Introduction

In July 2023 I finally decided to ditch my ADSL connection and upgrade to fiber optics (FTTH). Living in Israel, the whole thing gets a bit complicated, because there’s a separation between the infrastructure provider and the ISP. In other words, the fiber connections is supplied by company A, which brings the connection to the doorsteps of company B, the ISP, which takes care of the connection to the world. A and B can be the same company, but they often aren’t.

In my case, Bezeq is the provider of the fiber connection itself, and I’ve chosen Netvision / Cellcom as the ISP. The technical details below may be significantly different for you, in particular if another company has done the fiber infrastructure. There seems to be a lot of confusion regarding the infrastructure part, possibly because different service providers do things differently. I don’t know. This post is all about my own Internet connection.

There are three reasons why I got so involved in the gory details of this:

- It pays off in the long run. In particular, on that day when the Internet link falls, you call customer support and they are clueless (as always). If I can figure out what the problem is based upon my understanding of the system and the information that my own equipment provides, the link has a chance to get back quickly. Otherwise, good luck. And yes, it’s their job to know what they’re doing, but that’s nice in theory.

- There was some problem with ordering the service. It’s not clear exactly why, but it seems like a new fiber splitter was needed in the floor connection box (more on that below). It took a month from my first phone call to the actual installation.

- This stuff is actually interesting, and somewhat related to things I do for a living. I didn’t become an electrical engineer for nothing.

Reason #1 above implies working with equipment that exposes technical information. Sweet-talking routers that are configured automatically by virtue of a mobile phone app may be quick to set up, but then go figure what’s going on when something goes wrong. The fiber front-end should be as simple as possible and yet provide diagnostic information, at best.

Acronyms

I’m going to use these, so let’s get this over with:

- PON (Passive optical network) is the standard that arranges fiber connections in a one-to-many topology.

- GPON: Gigabit-capable PON. A standard on top of PON, which makes it capable for carrying Gigabit Ethernet.

- ONT: Optical Network Terminal. That is the little box we have in our homes. Sometimes called “fiber modem”, “fiber adapter”. Even a router is some kind of ONT.

- OLT: Optical Line Terminal: That’s the electronics that is connected to the fiber somewhere in the company’s premises.

- FTTH: Fiber to the home. A commercial term with obvious meaning.

The technical details in brief

The fiber connection is PON with an SC/APC connector (used for Gigabit Ethernet, hence GPON). I’m discussing this to painstaking detail below, so for now we’ll do with the fact that only one fiber is used for full-duplex communication. Unlike the SFP+ configuration I’m used to, which carries two fibers with a slinky feel, and usually connects with a dual connector, a GPON fiber looks like a somewhat rigid wire, and has a connector with a square shape.

But the most important technical detail is that pppoe is used. Effectively, the look and feel is exactly like ADSL, only it’s faster. Just like with ADSL, you get a username and a password. If you have a router, the credentials go there. But if you opted that out like I did, and instead went for an simple ONT (a.k.a. “fiber adapter”, “ONT bridge”, “fiber modem”), the computer needs to do the pppoe stuff (or maybe an pppoe-capable router, but what’s the point?).

As it turns out, the only thing I needed to change in my existing pppoe setting was the username and password.

Well, no. Doing that is enough to get a working Internet connection with an impressive data rate, but not even close to the advertised rate. To attain that, I had to switch to using the kernel’s pppoe module, as detailed in a separate post.

As a side note, my original plan was to have ADSL and fiber optics running for a month in parallel, just to be safe. But as soon as I got the fiber account activated (see below), the ADSL stopped working. Even though I asked explicitly to keep both accounts active in parallel, and even though the username for fiber was different from the one I used for ADSL (albeit very similar), ADSL got knocked down. I guess I asked for too much. Because it went so easily with the fiber, I wasn’t in the mood to waste time on ensuring redundancy.

Speed tests

There are two interesting sites for this:

- Netvision’s own speed test. I got 930 Mb/s down and 100 Mb/s up on this test. Quite reasonable.

- Ookla’s speed test allows choosing which server to run against. So picking Bezeq’s server, for example, I got 500 Mb/s down and 100 Mb/s up. This says nothing more than the connections between Cellcom and Bezeq.

Downloading a huge from from ISOC’s mirror server gave me around 400 Mb/s.

So the 1000 Mb/s figure is nice, but theoretical. And that’s before starting with server’s abroad. For example, downloading a huge file from a server in Germany (which is considered relatively close), the rate began at ~80 Mbit/s, and slowly rose towards 320 Mb/s. Which isn’t bad, actually.

GPON in a nutshell

GPON is a one-to-many framework. It means that the service provider (OLT) broadcasts a single 2.4 Gbit/s downstream signal to all subscribers (up to 128). Optical splitters divide this signal among the physical fibers that reach the subscribers. Likewise, all upstream signals are optically combined on their way to the OLT’s optical sensor. A 1.2 Gbit/s stream is TDMA’ed among the subscribers to avoid collisions. Each frame on the fiber is 125 μs, in both directions.

Each subscriber is connected with only one fiber, with a separate light wavelength for each direction: 1490 nm downstream, 1310 nm upstream (both infrared, hence invisible even with my smartphone’s camera).

The handshake that sets up the GPON link usually takes less than a second, but it involves several stages. During this setup, the ONT’s unique serial number is transmitted to the OLT. This means that the provider of the fiber network service can identify each subscriber, regardless of how the fibers are connected. It also means that if the ONT is replaced with another similar box, there will probably be a need to inform the service provider about the updated serial number, or else it will be rejected.

Like any TDMA protocol, the transmission of upstream data must be orchestrated and synchronized. As there might be significant differences between the fibers’ lengths, a calibration process called ranging takes place soon after the fiber is connected to the ONT. This allows compensating for these differences, and ensure an accurate timing of the upstream frames.

The immediate conclusion is that we share bandwidth with our neighbors. How many neighbors and which ones, that depends on how the optical splitting is made, but in most realistic scenarios, the data bottleneck will not be on the fiber: For now, the Internet itself isn’t all that fast, after all.

But if someone has a faulty ONT that transmits data when it shouldn’t (or say, all the time), this can definitely knock down a few neighbor’s connection. Not likely to happen, and still.

Is the pppoe handshake necessary?

I’ve seen talk about users getting a “DHCP connection” over fiber. It’s not clear if they got confused with the fact that their router at home gave them an IP address, or if some people skip the pppoe thing, and talk with a DHCP server across the fiber link.

Given that the bringup of the GPON link involves the ONT’s identification with its serial number, one could argue that the pppoe negotiation is unnecessary: The OLT knows who it is talking with, based upon the serial number. But skipping pppoe requires that OLT does the pairing with the ISP based upon the serial number, rather than the username (which includes the name of the ISP).

So ditching the pppoe thing is surely possible theoretically, and it would surely make things simpler. I don’t know if it has been done in Israel, though.

The fiber/Ethernet “adapter”

If you read my short discussion about GPON above, you’ve realized that there is no such thing as an “fiber to Ethernet adapter” on a GPON system. It’s an ONT, at it’s a very active device. The word “adapter” would have been suitable had the fiber connection been plain Gigabit Ethernet over fiber, with an SFP+ connector, because in that case the adapter indeed just translates the optical signal into an electrical one. But this is definitely not the case with the fibers I had installed.

To my technician’s disappointment, I didn’t want to take Cellcom’s all-in-one router, but requested what is called an “adapter” or “fiber modem” instead. There’s actually a price reduction for going this way, but this wasn’t my motivation: I want the computer to do the pppoe, so I can see what happens.

So the technician gave me a Nokia 7368 ISAM ONT G-010G-Q. It’s a small box with four LEDs. Looked a bit old and used, actually.

The most important thing to know about this box is that it takes about 60 seconds for this thing to boot after powerup.

During this time, the PON LED remains off no matter what, even if the fiber is connected correctly. After these 60 seconds, the “Alarm” LED (red) gets on briefly, after which the PON LED will also go on (if the fiber is connected). A disconnection of the fiber causes the “Alarm” LED to go on, and PON go off. Reconnecting the fiber reverses this (the PON will flash for a second before getting steady). All this is regardless of the LAN LED, which depends on the Ethernet plug, of course.

So a good way to get confused with this box is to turn it on with the fiber connected, see that PON doesn’t go on, and then start doing things randomly, possibly turning the box off and on again. 60 seconds is longer than most of us expect, and the box doesn’t give any indication that it’s starting up.

Another thing to keep in mind is that when the PON LED flashes, it means that a physical connection has been detected, and the box is busy bringing up the data link. When it stays in this state for a long time, it may be because the OLT (the fiber service provider) doesn’t recognize the ONT’s serial number, and therefore refuses to continue the bringup process. During my installation, this was the situation for quite a few minutes, while the technician was on the phone, reading out the ONT’s serial number, among others.

The disadvantage with this Nokia box is that it apparently has no admin interface on the Ethernet side. So it gives me no diagnostic information.

So I got myself a ZTE F601 for $20 instead. It’s listed in Bezeq’s list of approved ONTs, and it has an administration interface that is accessible with a web browser. This interface allows changing the PON serial number, and a whole lot of other things. I’ve written about ZTE’s ONT in a separate post. Bottom line: It’s a nice thing, and I use it instead of Nokia’s ONT. Apparently, the only drawback it has is that the PON LED can be misleading. I elaborate on that on the said post.

But after three weeks with this ONT, I got a phone call from my ISP. They asked me to move back to the Nokia ONT, or Bezeq would disconnect me. I responded by sending the said list of supported ONTs as well as a screenshot of the web interface screen, which shows that the version is correct. However, that didn’t help: Apparently, Bezeq don’t agree that Cellcom users have something different than what’s listed, and they surely didn’t like that I played with the serial number. Or maybe was it Cellcom who didn’t like my games, and they just blamed Bezeq?

I left a brief complaint at Bezeq’s website, and soon enough I got a phone call from their representative. I explained the situation, and a couple of days later, they were back with a clear message: Bezeq has no problem with my ONT, and they had specifically checked my connection and verified that there had been nothing odd reported regarding it.

So it looks like Cellcom doesn’t like my ONT, and they blamed Bezeq for it. Anyhow, I moved back to the Nokia thingy. ZTE will be used as a fallback and for debugging if necessary. It’s not worth my time and effort to fight about this. And the fact that representatives of communication companies make up stories is not really new.

Fun fact: The PPPoE session remained even after replacing the ONT. There was no need to re-initiate it.

Note to self: I left the firewall rules that allow communication with the ZTE ONT.

Choosing ISP

There are several companies in Israel that offer “Internet over Fiber”. One needs to keep in mind that choosing an ISP has an impact on exactly three factors:

- The data rate. The ISP supplies the facilities for routing to the traffic to the world. So except for local services (local TV stations etc. or services that have local servers for better performance), it’s the amount of bandwidth that the ISP has purchased for its connection with Europe and USA that matters.

- The annoyances. Does the ISP block certain types of traffic, for example Bittorrent? Does it “accidentally” disconnect your session in the middle of very long downloads? Does it block certain ports “for your safety”?

- The customer support. Eventually, you’ll have to call someone. How will that work?

I’ve been a Netvision (Cellcom) customer for a very long time, and I’m pretty satisfied with them. So the natural choice is to stay with them.

I did check with Partner, though. The people I got to talk with were exceptionally unprofessional. The person who called me up in response to my interest was very nice, but had no idea what I talked about when I said that I wanted an “adapter” and not a router. I was then handed over to their more technical guy who told me that nobody offers a fiber connection without a router (something Netvision had already approved they’ll do). So Partner was out, because they only offer their router.

I can actually understand that ISPs want to use their own router. All the technical hocus-pocus (activating the ONT’s serial number + setting up the pppoe username and password) is done with a simple mobile app, when using this kind of router. It also makes it a bit more complicated to move from one ISP to another, because I suppose each company locks its routers to its own services (or at least, doesn’t allow this automatic setup to another service). So even though you are legally free to change ISP, it’s still not something you can do with a simple phone call, and boom, you moved. A technician needs to pay a visit. This slows down the migration process, which gives the losing ISP a chance to do the sweet talk. With a neutral ONT, I can change ISP just like that. To an ISP that doesn’t require their own router, of course.

The third alternative that I checked was Bezeq. Their price was high, and my long-time experience with this company is to have as little as possible to do with them.

But I did consider them because of completely different reason: My first call to Cellcom for installing fiber was a month before the actual installation. On this initial call, the sales shark at the other end said that there’s an infrastructure issue that needs to be solved, and promised to come back to me soon. Two weeks passed, and I tried my luck again. Another guy said basically the same, said that he had begun the process of fixing the infrastructure issue, and then disappeared too. I tried again after a week, and this second guy finally opened a call for a technician to install fiber at my place.

A couple of days later, I got a call from Cellcom. This time they wanted to cancel the technician call, because there were missing details, which indicates an infrastructure problem. I refused, and told them to send a technician, and once he arrives, we’ll figure out why there’s a problem.

Eventually, this technician arrived and made the installation.

But why did it take a month? As I’ll detail below, there were already four fiber subscribers in my building. In order to add another one, there was a need to add another fiber splitter in the connection box at the bottom floor. It looks like it was added during this month, not clear when. But it’s clear who did it: Bezeq. It’s quite likely that Bezeq was reluctant to send their technician to allow the connection of a Cellcom subscriber, or maybe their technicians are busy, so they prioritize their own subscribers.

So had I known then what I know today (see below), I would have looked at the connection box and understood the problem. I would then have requested an installation of Bezeq’s fiber service. One day before the arrival of the technician, I would have checked if they had added the splitter, and if so, canceled the installation. If not, I would have let Bezeq’s technician do the fiber installation, and then switched ISP.

The installation process

The rest of this post is dedicated to the art of connecting fibers. In detail, maybe too much detail.

Note to self: See misc-after-moving-here/fiber-internet directory for a few even more specific details.

I’ll start with pointing out that my technician uses the word for “fiber” (“סיב”) to refer to a fiber cable. He uses the word for “leg” (“רגל”) in reference to a single fiber.

And now to what he actually did: The first step is to draw a single fiber from the requested point inside the apartment to the connection box outside the apartment, but on the same floor. In my case, that consisted of pulling the fiber through the existing cable pipes, side-by-side (or instead of) cables for cable TV and good old analog landline telephone.

As it was done in my case, the fiber just pops out from the lower edge of the telephone line jack’s plastic frame, going straight downwards. Not very elegant, but in my specific setting that’s barely visible.

The next step is to make a male optical connector on both sides of the fiber. There are special tools for that.

At this point, a red laser is connected to the fiber inside the apartment, using the connector. This will be used later.

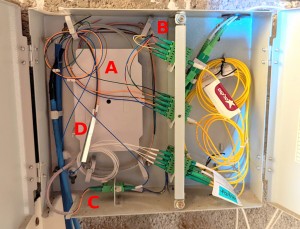

Now to the connection box outside the apartment: It’s manufactured by Appletec (this is a Youtube video showing how to install it + some fiber soldering and stuff). The idea is that a fiber cable is drawn from a box at the bottom floor to the top floor. On each floor, a number of fibers end at that floor’s connection box, one fiber for each apartment. These fibers are connected to female connectors on this box.

So the fiber from my apartment was connected to the floor’s connection box by mating two connectors. Now there’s an optical connection all the way to the connection box at the bottom floor. This is where all fibers from the building arrive, but even more important, this is where Bezeq’s fiber cable (or cables) arrive from their main switch (which can be literally miles away).

This connection box, which I’ll discuss in detail in the next section, is a patch panel for connecting between the fibers from the house to fibers that are connected to Bezeq. All fibers are connected to female connectors, so in order to connect an apartment to Bezeq, a short fiber cable, with two male connectors, is connected between two points.

The tricky part is to tell which of the fibers in this patch panel is connected to my apartment. Well, not really tricky, because of that red laser that was connected to that fiber inside my apartment. So there’s a clear red light showing which one to go on with.

A sticker with details is put on the fiber in both connection boxes. Both stickers containing the same information. It seems like Cellcom’s method is to write the apartment number, the floor, the “tag” number (“מספר תג”) and the Cellcom customer’s number. Partner appear to write the name of the subscriber and nothing else. I have no idea how Bezeq do their marking, because none of my neighbors was stupid enough to choose them. Anyhow, it’s a matter of time before the bottom connection box becomes a snakesnest. Not to mention that it’s practically accessible to anyone.

The floor level connection box

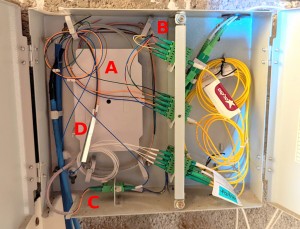

This box is manufactured by Appletec as well. Here’s a picture of its interior: (click to enlarge)

Quite evidently, this box is divided into a left and right compartment, each having a separate door. The intention is most likely that subscription installers keep themselves to the right side, and the infrastructure guys do their stuff to the left.

The first problem that is tackled at the left side of this box is that the fibers that are packed into a cable are too fragile to handle directly. The solution is to insert the cables into a protected enclosure (which I’ve marked A on the photo). In this closure, fusion splice (which is a bit like soldering or welding) is used to make 1:1 connections between the fragile fibers and fibers that have a mechanical protection shield (a thin coating, similar to electrical wire’s isolation). The latter fibers can then be used for the “wiring”. To get an idea what happens inside this enclosure, check out this Youtube video.

There are two types of cables that arrive to this box: Those from the upper floors and those from Bezeq. All these fibers get into this same little enclosure, get their thing done, and then it’s up to the technician who set up the connection box to not get confused about which fibers are which.

I’ve marked a few highlights in this box’ left side in the photo above:

- A: Inside this little plastic enclosure, there are 1:1 connections between fibers from cables and the fibers that are used inside the left side of the connection box. Explained above.

- B: Group of all fibers connected from upper floors (coming from 1:1 connections with cable).

- C: Group of fibers connected to Bezeq’s cable. There are only two of them.

- D: This metal rod is one of two 1:4 splitters in the connection box. The blue fiber at this splitter’s bottom comes from one of the fibers at C (it makes a nice loop on the way there), and the four outputs go to the patch panel’s front. The other 1:4 splitter, which was most likely installed earlier, is lying down on the box’ floor, close to the letter C, and is the output of the four white fibers.

So there are two 1:4 splitters in this connection box. The differences in the colors of the fibers and the way they are laid out in the box indicate that they were installed in different occasions. It seems like the one hanging vertically was installed lately in order to allow for my installation, which is the fifth subscriber on this box.

The right side is supposed to be a patch panel. One could have expected some kind of order, like an upper row of fiber connectors for connections to apartments and a lower row for connections to Bezeq. The reality in this box is not just that there’s no visible distinction, but that the genius who set up this box mixed them up completely. Some kind of note on the box saying which connection is what is of course way too much to expect, even though the slots are numbered.

At the moment there are only 8 live fibers from Bezeq available at the patch panel’s front (i.e. the wall between the left and right parts), so it’s quite likely that one of the fibers will have to be split further at a later stage (as my neighbors upgrade to fibers).

But wait. Why are there two fibers coming in a the box’ top-right corner, and another couple at the bottom right? This is supposed to be a patch-panel part, meaning all connections are internal!

The answer is simple: All four fiber subscriber installations were made with the fiber drawn directly from this connection box to the apartments. They simply ignored the connection boxes on each floor, and went directly down to the bottom floor. Why? Probably because the wiring infrastructure in this specific building makes it easier to draw a fiber all the way through, rather than to use the brain.

References

I have Google Chrome installed on a Linux machine at /opt/google as root, so the browser can’t update itself automatically. Instead, it complains with this pop-up every time the browser is started:

What I really like about this pop-up is the “you’re missing out” part. I get the same thing from the silly image gallery app on my Google Pixel phone. This is Google trying to play on my (not so existent) FOMO.

It has been suggested to add the –simulate-outdated-no-au argument to the command line that executes Chrome. This works indeed. The common suggestion is however to do that on the shortcut that executes the browser. But that won’t cover the case when I run the browser from a shell. Something I do, every now and then. Don’t ask.

So a more sledge hammer solution is to edit the wrapper script:

$ which google-chrome

/usr/bin/google-chrome

So edit this file (as root), and change the last line from

exec -a "$0" "$HERE/chrome" "$@"

to

exec -a "$0" "$HERE/chrome" --simulate-outdated-no-au='Tue, 31 Dec 2099' "$@"

What does this mean, then? Well, according to the list of Google Chrome switches, this switch “simulates that current version is outdated and auto-update is off”. The date is referred to in the source’s upgrade_detector_impl.cc. Look there if you want to figure out why this works (I didn’t bother, actually).

Introduction

These are notes I made while trying to make my Sony WH-CH510 bluetooth headphones work properly with my Linux Mint 19 machine. It’s evident that an upgrade of the whole OS would have fixed the problem, but I have my opinion on upgrades.

The problem: It takes a long time for the headphones to connect (around 20-30 seconds), and once that happens, the headphones are not automatically chosen by Pulseaudio as the output device. There are hacky solutions to the second part of the problems, but I have this thing about wanting to solve a problem properly.

The twist is that there’s no problem at all with Sony’s WH-CH500 headphones, neither with a pair of junky earbuds, which are labeled Y30.

So after trying quite a few quick fixes, I decided to get to the bottom of the problem. The good news is that I found out the reason for the problem. The bad news is that I didn’t find any direct solution for it.

So my solution was to upgrade the bluetooth daemon, and I did it in a rather exotic way: I basically installed a Linux Mint 21.1 (“Victoria”) pretty much in the same way as explained in this post. Now bluetoothd version 5.64 runs instead of Linux Mint’s 19 version 5.48 of this daemon, and everything works like a charm. But it’s a rather tangled solution if you don’t have this parallel Linux distribution installed for other reasons.

The rest of this post is a collection of things I tried before upgrading the daemon.

Bluetooth is handled by the kernel, which presents an hci device (typically hci0). The heavy lifting of implementing the protocol is done by bluetoothd ( /usr/lib/bluetooth/bluetoothd on my machine, started by the bluetooth systemd service). Try, for example,

$ hciconfig

Sniffing

To capture bluetooth communication, there are two primary option: The bluetooth0 interface in Wireshark or the btmon command line utility. Wireshark is more comprehensive as always, however btmon is actually easier to work with, because all crucial information is concentrated in a text file. The eternal tradeoff between GUI and text. Possibly, use both.

I’m going to show excerpts from btmon dumps below, obtained with e.g.

$ sudo stdbuf -oL btmon | tee btmon.txt

This could have been just “sudo btmon”, but using stdbuf and tee, there’s also data printed out to console in real time (stdbuf removes unnecessary buffering).

I skip a lot of entries, because there are, well, a lot of them. Hopefully I didn’t throw away anything relevant.

With btmon, “>” means incoming to host, and “<” means outgoing from host (the device is at the left side, which is a bit odd).

I’ve never carried out a bluetooth-related project, so all my comments on btmon’s output below are no more than hopefully intelligent guesses. I don’t pretend to be acquainted with any of the related protocols.

The connection

This is WH-CH510′s connection request (the earbud’s request was exactly the same):

> HCI Event: Connect Request (0x04) plen 10 #11 [hci0] 10.725859

Address: 30:53:C1:11:40:2D (OUI 30-53-C1)

Class: 0x240404

Major class: Audio/Video (headset, speaker, stereo, video, vcr)

Minor class: Wearable Headset Device

Rendering (Printing, Speaker)

Audio (Speaker, Microphone, Headset)

Link type: ACL (0x01)

< HCI Command: Accept Connection R.. (0x01|0x0009) plen 7 #12 [hci0] 10.725914

Address: 30:53:C1:11:40:2D (OUI 30-53-C1)

Role: Master (0x00)

And after a few packet exchanges, this appears in the dump output (three times, not clear why):

@ MGMT Event: Device Connected (0x000b) plen 28 {0x0001} [hci0] 11.256871

BR/EDR Address: 30:53:C1:11:40:2D (OUI 30-53-C1)

Flags: 0x00000000

Data length: 15

Name (complete): WH-CH510

Class: 0x240404

Major class: Audio/Video (headset, speaker, stereo, video, vcr)

Minor class: Wearable Headset Device

Rendering (Printing, Speaker)

Audio (Speaker, Microphone, Headset)

Cheap earbud’s service negotiation

Unlike USB, the device is in control: The requests information, and the host responds. The device chooses how to set up the connection, and the host follows suit. And it’s also the device that possibly messes up.

For the Y30 earbuds, the attribute request / response session went as follows.

First, the device checks if the host supports Handsfree Audio Gateway (0x111f), by asking for attributes, but the host doesn’t have any of it:

> ACL Data RX: Handle 17 flags 0x02 dlen 24 #42 [hci0] 14.317596

Channel: 64 len 20 [PSM 1 mode 0] {chan 0}

SDP: Service Search Attribute Request (0x06) tid 1 len 15

Search pattern: [len 6]

Sequence (6) with 3 bytes [16 extra bits] len 6

UUID (3) with 2 bytes [0 extra bits] len 3

Handsfree Audio Gateway (0x111f)

Max record count: 512

Attribute list: [len 6]

Sequence (6) with 3 bytes [16 extra bits] len 6

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0004

Continuation state: 0

< ACL Data TX: Handle 17 flags 0x00 dlen 14 #43 [hci0] 14.317758

Channel: 64 len 10 [PSM 1 mode 0] {chan 0}

SDP: Service Search Attribute Response (0x07) tid 1 len 5

Attribute bytes: 2

Continuation state: 0

So the device tries AVDTP (0x0019) instead:

> ACL Data RX: Handle 17 flags 0x02 dlen 24 #45 [hci0] 14.322578

Channel: 64 len 20 [PSM 1 mode 0] {chan 0}

SDP: Service Search Attribute Request (0x06) tid 2 len 15

Search pattern: [len 6]

Sequence (6) with 3 bytes [16 extra bits] len 6

UUID (3) with 2 bytes [0 extra bits] len 3

AVDTP (0x0019)

Max record count: 512

Attribute list: [len 6]

Sequence (6) with 3 bytes [16 extra bits] len 6

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0004

Continuation state: 0

< ACL Data TX: Handle 17 flags 0x00 dlen 60 #46 [hci0] 14.322737

Channel: 64 len 56 [PSM 1 mode 0] {chan 0}

SDP: Service Search Attribute Response (0x07) tid 2 len 51

Attribute bytes: 48

Attribute list: [len 21] {position 0}

Attribute: Protocol Descriptor List (0x0004) [len 2]

Sequence (6) with 6 bytes [8 extra bits] len 8

UUID (3) with 2 bytes [0 extra bits] len 3

L2CAP (0x0100)

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0019

Sequence (6) with 6 bytes [8 extra bits] len 8

UUID (3) with 2 bytes [0 extra bits] len 3

AVDTP (0x0019)

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0103

Attribute list: [len 21] {position 1}

Attribute: Protocol Descriptor List (0x0004) [len 2]

Sequence (6) with 6 bytes [8 extra bits] len 8

UUID (3) with 2 bytes [0 extra bits] len 3

L2CAP (0x0100)

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0019

Sequence (6) with 6 bytes [8 extra bits] len 8

UUID (3) with 2 bytes [0 extra bits] len 3

AVDTP (0x0019)

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0103

Continuation state: 0

And yes, the host supports it.

AVDTP is (according to Wikipedia) used by the advanced audio distribution (A2DP) profile to stream music to stereo headsets over an L2CAP channel intended for video distribution profile in the Bluetooth transmission.

So it goes on with connecting channel 65 to service number 0x0019 (PSM stands for Protocol Service Multiplexor).

> ACL Data RX: Handle 17 flags 0x02 dlen 12 #51 [hci0] 14.358888

L2CAP: Connection Request (0x02) ident 3 len 4

PSM: 25 (0x0019)

Source CID: 65

< ACL Data TX: Handle 17 flags 0x00 dlen 16 #52 [hci0] 14.358945

L2CAP: Connection Response (0x03) ident 3 len 8

Destination CID: 65

Source CID: 65

Result: Connection pending (0x0001)

Status: Authorization pending (0x0002)

< ACL Data TX: Handle 17 flags 0x00 dlen 16 #53 [hci0] 14.359202

L2CAP: Connection Response (0x03) ident 3 len 8

Destination CID: 65

Source CID: 65

Result: Connection successful (0x0000)

Status: No further information available (0x0000)

< ACL Data TX: Handle 17 flags 0x00 dlen 12 #54 [hci0] 14.359220

L2CAP: Configure Request (0x04) ident 3 len 4

Destination CID: 65

Flags: 0x0000

AVDTP (0x0019)> HCI Event: Number of Completed Packets (0x13) plen 5 #55 [hci0] 14.361709

Num handles: 1

Handle: 17

Count: 1

This is followed by a discovery phase:

@ MGMT Command: Start Discovery (0x0023) plen 1 {0x0001} [hci0] 14.362330

Address type: 0x07

BR/EDR

LE Public

LE Random

In the packet exchange that follows, the device obtains the capabilities of the host, and the audio connection is set up.

Sony WH-CH510′s service negotiation

The WH-CH510 takes another approach: It issues a Service Search Request for the Headset AG (0x1112). AG means Audio Gateway.

> ACL Data RX: Handle 20 flags 0x02 dlen 17 #48 [hci0] 11.736388

Channel: 64 len 13 [PSM 1 mode 0] {chan 0}

SDP: Service Search Request (0x02) tid 1 len 8

Search pattern: [len 5]

Sequence (6) with 3 bytes [8 extra bits] len 5

UUID (3) with 2 bytes [0 extra bits] len 3

Headset AG (0x1112)

Max record count: 68

Continuation state: 0

< ACL Data TX: Handle 20 flags 0x00 dlen 18 #49 [hci0] 11.736611

Channel: 64 len 14 [PSM 1 mode 0] {chan 0}

SDP: Service Search Response (0x03) tid 1 len 9

Total record count: 1

Current record count: 1

Record handle: 0x1000c

Continuation state: 0

And yes, it’s supported and given the handle 0x1000c. Using this handle, the device asks for this service’s attributes:

> ACL Data RX: Handle 20 flags 0x02 dlen 23 #51 [hci0] 11.741392

Channel: 64 len 19 [PSM 1 mode 0] {chan 0}

SDP: Service Attribute Request (0x04) tid 2 len 14

Record handle: 0x1000c

Max attribute bytes: 277

Attribute list: [len 7]

Sequence (6) with 5 bytes [8 extra bits] len 7

Unsigned Integer (1) with 4 bytes [0 extra bits] len 5

0x0000ffff

Continuation state: 0

< ACL Data TX: Handle 20 flags 0x00 dlen 97 #52 [hci0] 11.741610

Channel: 64 len 93 [PSM 1 mode 0] {chan 0}

SDP: Service Attribute Response (0x05) tid 2 len 88

Attribute bytes: 85

Attribute list: [len 83] {position 0}

Attribute: Service Record Handle (0x0000) [len 2]

0x0001000c

Attribute: Service Class ID List (0x0001) [len 2]

UUID (3) with 2 bytes [0 extra bits] len 3

Headset AG (0x1112)

UUID (3) with 2 bytes [0 extra bits] len 3

Generic Audio (0x1203)

Attribute: Protocol Descriptor List (0x0004) [len 2]

Sequence (6) with 3 bytes [8 extra bits] len 5

UUID (3) with 2 bytes [0 extra bits] len 3

L2CAP (0x0100)

Sequence (6) with 5 bytes [8 extra bits] len 7

UUID (3) with 2 bytes [0 extra bits] len 3

RFCOMM (0x0003)

Unsigned Integer (1) with 1 byte [0 extra bits] len 2

0x0c

Attribute: Browse Group List (0x0005) [len 2]

UUID (3) with 2 bytes [0 extra bits] len 3

Public Browse Root (0x1002)

Attribute: Bluetooth Profile Descriptor List (0x0009) [len 2]

Sequence (6) with 6 bytes [8 extra bits] len 8

UUID (3) with 2 bytes [0 extra bits] len 3

Headset (0x1108)

Unsigned Integer (1) with 2 bytes [0 extra bits] len 3

0x0102

Attribute: Unknown (0x0100) [len 2]

Headset Voice gateway [len 21]

Continuation state: 0

The device chooses to connect channel 65 to the RFCOMM service:

> ACL Data RX: Handle 20 flags 0x02 dlen 12 #62 [hci0] 12.337691

L2CAP: Connection Request (0x02) ident 6 len 4

PSM: 3 (0x0003)

Source CID: 65

< ACL Data TX: Handle 20 flags 0x00 dlen 16 #63 [hci0] 12.337747

L2CAP: Connection Response (0x03) ident 6 len 8

Destination CID: 64

Source CID: 65

Result: Connection successful (0x0000)

Status: No further information available (0x0000)

After some configuration packets, the discovery begins:

@ MGMT Event: Discovering (0x0013) plen 2 {0x0001} [hci0] 14.686897

Address type: 0x07

BR/EDR

LE Public

LE Random

Discovery: Disabled (0x00)

> ACL Data RX: Handle 20 flags 0x02 dlen 18 #91 [hci0] 15.848944

Channel: 64 len 14 [PSM 3 mode 0] {chan 0}

RFCOMM: Unnumbered Info with Header Check (UIH) (0xef)

Address: 0x63 cr 1 dlci 0x18

Control: 0xef poll/final 0

Length: 10

FCS: 0x0e

41 54 2b 43 49 4e 44 3d 3f 0d 0e AT+CIND=?..

> ACL Data RX: Handle 20 flags 0x02 dlen 17 #92 [hci0] 18.849015

Channel: 64 len 13 [PSM 3 mode 0] {chan 0}

RFCOMM: Unnumbered Info with Header Check (UIH) (0xef)

Address: 0x63 cr 1 dlci 0x18

Control: 0xef poll/final 0

Length: 9

FCS: 0x0e

41 54 2b 43 49 4e 44 3f 0d 0e AT+CIND?..

This discovery isn’t all that successful: The device sends AT commands, but the host doesn’t respond to them. 4 seconds of futile attempts.

After some useless back and forth, the device tries again with the same Service Search Request, to which it receives the same answer, and so it goes on.

> ACL Data RX: Handle 20 flags 0x02 dlen 17 #108 [hci0] 20.717682

Channel: 65 len 13 [PSM 1 mode 0] {chan 1}

SDP: Service Search Request (0x02) tid 3 len 8

Search pattern: [len 5]

Sequence (6) with 3 bytes [8 extra bits] len 5

UUID (3) with 2 bytes [0 extra bits] len 3

Headset AG (0x1112)

Max record count: 68

Continuation state: 0

[ ... ]

The device disconnects the futile channel 65:

> ACL Data RX: Handle 20 flags 0x02 dlen 12 #114 [hci0] 20.818859

L2CAP: Disconnection Request (0x06) ident 10 len 4

Destination CID: 65

Source CID: 64

< ACL Data TX: Handle 20 flags 0x00 dlen 12 #115 [hci0] 20.818906

L2CAP: Disconnection Response (0x07) ident 10 len 4

Destination CID: 65

Source CID: 64

And then it tries another few AT commands on this same channel, despite having disconnected it. It didn’t reconnect it, so probably disconnection doesn’t mean what I think it does…?

> ACL Data RX: Handle 20 flags 0x02 dlen 24 #118 [hci0] 22.225305

Channel: 64 len 20 [PSM 3 mode 0] {chan 0}

RFCOMM: Unnumbered Info with Header Check (UIH) (0xef)

Address: 0x63 cr 1 dlci 0x18

Control: 0xef poll/final 0

Length: 16

FCS: 0x0e

41 54 2b 43 4d 45 52 3d 33 2c 30 2c 30 2c 31 0d AT+CMER=3,0,0,1.

0e .

> ACL Data RX: Handle 20 flags 0x02 dlen 18 #119 [hci0] 25.285306

Channel: 64 len 14 [PSM 3 mode 0] {chan 0}

RFCOMM: Unnumbered Info with Header Check (UIH) (0xef)

Address: 0x63 cr 1 dlci 0x18

Control: 0xef poll/final 0

Length: 10

FCS: 0x0e

41 54 2b 43 43 57 41 3d 31 0d 0e AT+CCWA=1..

Needless to say, this was futile as well.

And then, out of the blue, the device requests to connect to AVDTP by choosing the service at address 0x0019.

> ACL Data RX: Handle 20 flags 0x02 dlen 12 #125 [hci0] 29.875346

L2CAP: Connection Request (0x02) ident 11 len 4

PSM: 25 (0x0019)

Source CID: 64

< ACL Data TX: Handle 20 flags 0x00 dlen 16 #126 [hci0] 29.875431

L2CAP: Connection Response (0x03) ident 11 len 8

Destination CID: 65

Source CID: 64

Result: Connection pending (0x0001)

Status: Authorization pending (0x0002)

< ACL Data TX: Handle 20 flags 0x00 dlen 16 #128 [hci0] 29.875677

L2CAP: Connection Response (0x03) ident 11 len 8

Destination CID: 65

Source CID: 64

Result: Connection successful (0x0000)

Status: No further information available (0x0000)

It’s not clear where the device got the idea to do this: As far as I can tell, the device wasn’t informed by the host about the existence of this service, at least not directly.

From this point, the negotiation goes well, and an audio device is set up. This allows selecting the headphones in the Sound Settings. Something that is done automatically with the old headphones and the junky earbuds.

So what is the problem? Maybe that the host doesn’t answer the AT commands. This is maybe solved in later versions of bluetoothd or Linux distributions in general. It’s quite possible that my distribution is too old for these newer headphones. Upgrade or perish.

Or go the other way: Downgrade. I suppose the solution would be to disable the Headset AG profile (0x1112), so that bluetoothd refuses to play ball when this is requested. This would speed up the fallback to A2DP, I hope, and possibly solve the problem.

I’ve tried hard to find a way to make bluetoothd refuse to the Headset AG profile, but in vain (so far?). sdptool has the option to disable a service, however it’s deprecated, and bluez 5 won’t talk with it. The updated method to tickle bluetoothd is through Dbus. Not sure if it has an API for turning off a service.

Unfortunately, I have no idea how to do this except for compiling bluetoothd from its sources and remove that option altogether. Actually, changing the UUID is enough to make it unusable.

I tried that, but it didn’t work all that well. More on that below.

Now to some extra stuff I randomly found out while working on this.

bluetootctl

This utility talks with bluetoothd through Dbus.

Doesn’t require root (when run from a terminal window on the computer’s desktop):

$ bluetoothctl

[NEW] Controller 9C:BB:CC:DD:EE:FF compname [default]

[NEW] Device 78:2E:D4:D9:62:C1 Y30

[NEW] Device 00:18:09:76:27:29 WH-CH500

[NEW] Device 30:53:C1:11:40:2D WH-CH510

Agent registered

[bluetooth]# show

Controller 9C:BB:CC:DD:EE:FF (public)

Name: compname

Alias: compname

Class: 0x001c0104

Powered: yes

Discoverable: yes

Pairable: yes

UUID: Headset AG (00001112-0000-1000-8000-00805f9b34fb)

UUID: Generic Attribute Profile (00001801-0000-1000-8000-00805f9b34fb)

UUID: A/V Remote Control (0000110e-0000-1000-8000-00805f9b34fb)

UUID: OBEX File Transfer (00001106-0000-1000-8000-00805f9b34fb)

UUID: Generic Access Profile (00001800-0000-1000-8000-00805f9b34fb)

UUID: OBEX Object Push (00001105-0000-1000-8000-00805f9b34fb)

UUID: PnP Information (00001200-0000-1000-8000-00805f9b34fb)

UUID: IrMC Sync (00001104-0000-1000-8000-00805f9b34fb)

UUID: A/V Remote Control Target (0000110c-0000-1000-8000-00805f9b34fb)

UUID: Audio Source (0000110a-0000-1000-8000-00805f9b34fb)

UUID: Audio Sink (0000110b-0000-1000-8000-00805f9b34fb)

UUID: Vendor specific (00005005-0000-1000-8000-0002ee000001)

UUID: Message Notification Se.. (00001133-0000-1000-8000-00805f9b34fb)

UUID: Phonebook Access Server (0000112f-0000-1000-8000-00805f9b34fb)

UUID: Message Access Server (00001132-0000-1000-8000-00805f9b34fb)

UUID: Headset (00001108-0000-1000-8000-00805f9b34fb)

Modalias: usb:v1D6Bp0246d0530

Discovering: no

This utility spits out a lot of information by itself when the daemon is restarted with e.g. “systemctl restart bluetooth”. There’s also output when a device is connected and disconnected.

An interesting feature of bluetootctl is the submenus, in particular “gatt” and “advertise”. Maybe the former allows deregistering UUIDs.

Try

[bluetooth]# menu gatt

and when done, go back to original menu:

[bluetooth]# back

btmgmt

btmgmt talks with the kernel directly through an AF_BLUETOOTH raw network socket. I considered this tool because it has the rm-uuid command, which is supposed to remove a UUID.

$ sudo btmgmt

[mgmt]# rm-uuid 00001112-0000-1000-8000-00805f9b34fb

Remove UUID succeeded. Class 0x1c0104

This is reverted when the bluetooth service is restarted. But it doesn’t seem to have any effect on the interface anyhow. The UUID keeps appearing in bluetoothctl’s “show” and the service is advertised and used. “clr-uuids” apparently removes all UUIDs, but this has no real effect.

It seems like the effective UUIDs are kept in bluetoothd. btmgmt changes the UUIDs in the kernel. See “Remove UUID Command” in mgmt-api.txt.

btmgmt also gets very active when bluetoothd is restarted and other events occur.

Talking with bluetoothd directly through DBus

See my notes on DBus on this post. Getting a property:

$ dbus-send --system --dest=org.bluez --print-reply /org/bluez/hci0 org.freedesktop.DBus.Properties.Get string:org.bluez.Adapter1 string:Address

method return time=1685778877.065819 sender=:1.4 -> destination=:1.4934 serial=82 reply_serial=2

variant string "9C:BB:CC:DD:EE:FF"

Let’s break this down. Bluetoothd’ Dbus API is published in its source’s doc/ subdirectory. The Address property of the adapter is documented in adapter-api.txt. So:

- –print-reply: We want the reply to this query, of course.

- –system: Bluetoothd is connected to the system DBus.

- –dest=org.bluez: Taken from “Service” in adapter-api.txt.

- /org/bluez/hci0: Taken from “Object path” in adapter-api.txt.

- org.freedesktop.DBus.Properties.Get: Every dbus-send command needs a method. In this case fetching a property’s value. So use the generic method for fetching a property.

- string:org.bluez.Adapter1: Taken from “Interface” in adapter-api.txt.

- string:Address: This is the name of the property, as listed in adapter-api.txt.

Likewise, I can fetch the UUIDs:

$ dbus-send --print-reply --system --dest=org.bluez /org/bluez/hci0 org.freedesktop.DBus.Properties.Get string:org.bluez.Adapter1 string:UUIDs

method return time=1685779625.693856 sender=:1.4 -> destination=:1.5017 serial=89 reply_serial=2

variant array [

string "00001112-0000-1000-8000-00805f9b34fb"

string "00001801-0000-1000-8000-00805f9b34fb"

string "0000110e-0000-1000-8000-00805f9b34fb"

string "00001106-0000-1000-8000-00805f9b34fb"

string "00001800-0000-1000-8000-00805f9b34fb"

string "00001105-0000-1000-8000-00805f9b34fb"

string "00001200-0000-1000-8000-00805f9b34fb"

string "0000110c-0000-1000-8000-00805f9b34fb"

string "00001104-0000-1000-8000-00805f9b34fb"

string "0000110a-0000-1000-8000-00805f9b34fb"

string "0000110b-0000-1000-8000-00805f9b34fb"

string "00005005-0000-1000-8000-0002ee000001"

string "00001133-0000-1000-8000-00805f9b34fb"

string "0000112f-0000-1000-8000-00805f9b34fb"

string "00001132-0000-1000-8000-00805f9b34fb"

string "00001108-0000-1000-8000-00805f9b34fb"

]

So this is how bluetoothctl got these values. Unfortunately, this property is read-only according to adapter-api.txt, so it can’t be manipulated with a Set method.

It’s of course possible to run methods that are published in bluetoothd’s DBus API, but I didn’t find anything related to disabling services.

Hacking the source

Download the sources for bluez 5.48-0ubuntu3.1 as bluez_5.48.orig.tar.xz (which is the version running on Mint 19).

In lib/sdp.h, change 0x1112 to 0xeb12. Same in lib/uuid.h and in src/profile.c.

Then in the source’s root directory, go:

$ ./configure && echo Success

and then just

$ make && echo Success

On my machine, there was a need to install libical, to make configure work, i.e.

$ sudo apt-get install libical-dev

And then replace /usr/lib/bluetooth/bluetoothd with the compiled version in src/. Keep a copy of the old executable, of course.

That didn’t work at all. The old UUID kept appearing in bluetoothctl’s output for “show”. There was a change, however: The WH-CH510 headphones refused to connect to the host, and reverted to pairing. At least I did something, I thought. But as it turned out, these headphones refused to connect to the host even after going back to the original bluetooth daemon (but had no problem with my cellphone). Y30 had no problems, as usual.

Resetting the headphone by pressing the power button and “-” button for 7 seconds didn’t help either. What eventually did the trick was to remove /usr/lib/bluetooth/bluetoothd, go “systemctl restart bluetooth”, which failed of course. Then return bluetoothd to its place, which worked, as expected. And then everything was back to normal again.

Extra reading

- A Debian wiki page with several interesting examples (in particular how to use dbus-send to talk with bluetoothd)

- This page contains a lot of information about using command line

- The list of tools that come with the bluez package

- Some information about configuration files.

Random jots

- The bluetooth service is defined in /lib/systemd/system/bluetooth.service, which runs /usr/lib/bluetooth/bluetoothd.

- It’s also possible to run the daemon directly, by typing “/usr/lib/bluetooth/bluetoothd” at shell prompt. The daemon runs in the foreground in this case. Add the “-n” flag to get the logging information on the console as well.

- bluetoothd stores persistent information (the list and information about already discovered devices in particular) in /var/lib/bluetooth. This directory can be removed

It’s possible to stop the Bluetooth daemon with

# systemctl stop bluetooth

however clicking on desktop’s bluetooth applet restarts it automatically, unless it is running by other means (e.g. started manually with a command).

The “bluetoothctl” command won’t do this, but rather wait for a connection. It’s actually OK to turn off the bluetooth deamon while this utility is running.

After upgrading bluetoothd as mentioned above, I noticed a lot of log messages of this sort every 16 seconds or so:

kernel: Bluetooth: hci0: last event is not cmd complete (0x0f)

But looking at old logs, it’s evident that these existed in the past as well. So they’re not related to my manipulations with the Bluetooth daemon. In fact, these messages appear while the Cinnamon Bluetooth applet’s utility window is displayed. Close this window, and the messages stop. This is a kernel bug that was fixed in kernel v5.0.

The nuisance

After trying to fix another problem with Pulseaudio, and messing up a bit with its configuration files, the option to control the volume by virtue of keyboard buttons suddenly stopped to work. The cursor would blink briefly when pressing the button, but nothing else happened.

I tried all the fairly normal things, including to restart the Pulseaudio daemon, to restart Cinnamon (with Alt-F2 + “r”), restart the session by changing the user (to the same user), disconnect and reconnect the (USB) keyboard, and a whole lot of other things.

It took me quite some time to fix this, so here’s what worked for me this time, along with some debug info.

The machine was a Linux Mint 19 with Cinnamon.

At an earlier stage, even the sound applet was gone from its desktop panel. To fix that, I right-clicked the panel, picked Troubleshoot > Looking Glass, and picked the Extension tab. There’s an applet listed called “Sound”. So I right-clicked it, and chose “Reload Code”. That got the applet back to the panel, and it worked flawlessly. But then I was stuck with the volume button problem.

The fix

What I actually did:

- Kill the process named csd-keyboard (probably irrelevant)

- Kill the process named csd-media-keys

- Restart pulseaudio with “pulseaudio -k”

This was the output of journalctl as a result of these:

May 31 12:17:03 cinnamon-session[2115]: WARNING: t+1132491.55749s: Application 'cinnamon-settings-daemon-keyboard.desktop' killed by signal 15

May 31 12:17:17 cinnamon-session[2115]: WARNING: t+1132505.78238s: Application 'cinnamon-settings-daemon-media-keys.desktop' killed by signal 15

May 31 12:17:17 rtkit-daemon[2284]: Successfully made thread 4304 of process 4304 (n/a) owned by '1010' high priority at nice level -11.

May 31 12:17:17 rtkit-daemon[2284]: Supervising 8 threads of 4 processes of 1 users.

May 31 12:17:17 pulseaudio[4304]: [pulseaudio] pid.c: Daemon already running.

May 31 12:18:04 bluetoothd[12009]: Endpoint unregistered: sender=:1.120210 path=/MediaEndpoint/A2DPSource

May 31 12:18:04 bluetoothd[12009]: Endpoint unregistered: sender=:1.120210 path=/MediaEndpoint/A2DPSink

May 31 12:18:04 rtkit-daemon[2284]: Successfully made thread 4345 of process 4345 (n/a) owned by '1010' high priority at nice level -11.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 4 threads of 4 processes of 1 users.

May 31 12:18:04 pulseaudio[4345]: [pulseaudio] sink.c: Default and alternate sample rates are the same.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 3 threads of 3 processes of 1 users.

May 31 12:18:04 rtkit-daemon[2284]: Successfully made thread 4347 of process 4345 (n/a) owned by '1010' RT at priority 5.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 4 threads of 3 processes of 1 users.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 4 threads of 3 processes of 1 users.

May 31 12:18:04 rtkit-daemon[2284]: Successfully made thread 4348 of process 4345 (n/a) owned by '1010' RT at priority 5.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 5 threads of 3 processes of 1 users.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 5 threads of 3 processes of 1 users.

May 31 12:18:04 rtkit-daemon[2284]: Successfully made thread 4349 of process 4345 (n/a) owned by '1010' RT at priority 5.

May 31 12:18:04 rtkit-daemon[2284]: Supervising 6 threads of 3 processes of 1 users.

May 31 12:18:05 rtkit-daemon[2284]: Supervising 6 threads of 3 processes of 1 users.

May 31 12:18:05 rtkit-daemon[2284]: Successfully made thread 4350 of process 4345 (n/a) owned by '1010' RT at priority 5.

May 31 12:18:05 rtkit-daemon[2284]: Supervising 7 threads of 3 processes of 1 users.

May 31 12:18:05 bluetoothd[12009]: Endpoint registered: sender=:1.120482 path=/MediaEndpoint/A2DPSource

May 31 12:18:05 bluetoothd[12009]: Endpoint registered: sender=:1.120482 path=/MediaEndpoint/A2DPSink

May 31 12:18:05 pulseaudio[4345]: [pulseaudio] backend-ofono.c: Failed to register as a handsfree audio agent with ofono: org.freedesktop.DBus.Error.ServiceUnknown: The name org.ofono was not provided by any .service files

May 31 12:18:05 rtkit-daemon[2284]: Successfully made thread 4352 of process 4352 (n/a) owned by '1010' high priority at nice level -11.

May 31 12:18:05 rtkit-daemon[2284]: Supervising 8 threads of 4 processes of 1 users.

May 31 12:18:05 pulseaudio[4352]: [pulseaudio] pid.c: Daemon already running.

May 31 12:18:58 gnome-keyring-daemon[2204]: asked to register item /org/freedesktop/secrets/collection/login/9, but it's already registered

I suppose that killing csd-media-keys was the part that really did the trick, because that caused a request to start Pulseaudio.

Why it worked (presumably)

The cinnamon-settings-daemon is the component responsible for passing the keyboard request to Pulseaudio. Apparently, messing up with Pulseaudio caused one of its components, csd-media-keys, to mess up as well. Killing it caused cinnamon-settings-daemon to restart it automatically. From fresh.

It’s fine to kill the csd-* processes. Nothing dramatic happens. The killed process is just restarted. But I haven’t tried to kill cinnamon-settings-daemon itself. I believe it will restart Cinnamon completely, something I wanted to avoid.

Dbus activity

I’ve already written a post mentioning how to monitor the Dbus. So here’s a different take on the same topic.

The difference is quite evident when running dbus-monitor (not as root) as follows in order to monitor the activity for the current session:

$ dbus-monitor

This is the output as a result of pressing a volume up button, when it works correctly (i.e. after fixing the problem):

method call time=1685525392.173838 sender=:1.6531 -> destination=:1.6616 serial=1431 path=/org/cinnamon/SettingsDaemon/KeybindingHandler; interface=org.cinnamon.SettingsDaemon.KeybindingHandler; member=HandleKeybinding

uint32 2

method call time=1685525392.174967 sender=:1.6616 -> destination=org.freedesktop.DBus serial=566 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=AddMatch

string "type='signal',interface='ca.desrt.dconf.Writer',path='/ca/desrt/dconf/Writer/user',arg0path='/org/cinnamon/desktop/sound/'"

method return time=1685525392.175012 sender=org.freedesktop.DBus -> destination=:1.6616 serial=382 reply_serial=566

method call time=1685525392.175026 sender=:1.6616 -> destination=:1.6531 serial=567 path=/org/Cinnamon; interface=org.Cinnamon; member=ShowOSD

array [

dict entry(

string "icon"

variant string "audio-volume-medium-symbolic"

)

dict entry(

string "level"

variant int32 34

)

]

method call time=1685525392.175170 sender=:1.6616 -> destination=org.freedesktop.DBus serial=568 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=RemoveMatch

string "type='signal',interface='ca.desrt.dconf.Writer',path='/ca/desrt/dconf/Writer/user',arg0path='/org/cinnamon/desktop/sound/'"

method return time=1685525392.175189 sender=org.freedesktop.DBus -> destination=:1.6616 serial=383 reply_serial=568

method call time=1685525392.175198 sender=:1.6616 -> destination=org.freedesktop.DBus serial=569 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=AddMatch

string "type='signal',interface='ca.desrt.dconf.Writer',path='/ca/desrt/dconf/Writer/user',arg0path='/org/cinnamon/desktop/sound/'"

method return time=1685525392.175216 sender=org.freedesktop.DBus -> destination=:1.6616 serial=384 reply_serial=569

method call time=1685525392.176330 sender=:1.6618 -> destination=org.freedesktop.DBus serial=26 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=RequestName

string "org.freedesktop.ReserveDevice1.Audio2"

uint32 5

signal time=1685525392.176383 sender=org.freedesktop.DBus -> destination=(null destination) serial=50 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=NameOwnerChanged

string "org.freedesktop.ReserveDevice1.Audio2"

string ""

string ":1.6618"

signal time=1685525392.176411 sender=org.freedesktop.DBus -> destination=:1.6618 serial=51 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=NameAcquired

string "org.freedesktop.ReserveDevice1.Audio2"

method return time=1685525392.176427 sender=org.freedesktop.DBus -> destination=:1.6618 serial=52 reply_serial=26

uint32 1

method return time=1685525392.184853 sender=:1.6616 -> destination=:1.6531 serial=570 reply_serial=1431

method call time=1685525392.184915 sender=:1.6616 -> destination=org.freedesktop.DBus serial=571 path=/org/freedesktop/DBus; interface=org.freedesktop.DBus; member=RemoveMatch

string "type='signal',interface='ca.desrt.dconf.Writer',path='/ca/desrt/dconf/Writer/user',arg0path='/org/cinnamon/desktop/sound/'"

method return time=1685525392.184937 sender=org.freedesktop.DBus -> destination=:1.6616 serial=385 reply_serial=571

method return time=1685525392.190757 sender=:1.6531 -> destination=:1.6616 serial=1432 reply_serial=567

Unfortunately, I didn’t save the output before the fix. But it was exactly two rows of output. As far as I recall, the first and last row from this dump were present when it didn’t work (i.e. the row involving KeybindingHandler’s request, and the return method that closed it).

The bus addresses can be listed with the following command:

$ busctl --user

In this specific session the output was:

NAME PID PROCESS USER CONNECTION UNIT SESSION DESCRIPTION

:1.0 2103 systemd eli :1.0 user@1010.service - -

:1.1014 45251 pxgsettings eli :1.1014 session-c1.scope c1 -

:1.1015 45277 scp-dbus-servic eli :1.1015 user@1010.service - -

:1.1016 45277 scp-dbus-servic eli :1.1016 user@1010.service - -

:1.12 2213 csd-a11y-keyboa eli :1.12 session-c1.scope c1 -

:1.1234 53331 nemo eli :1.1234 session-c1.scope c1 -

:1.13 2221 csd-a11y-settin eli :1.13 session-c1.scope c1 -

:1.131 16564 klauncher eli :1.131 session-c1.scope c1 -

:1.16 2232 csd-cursor eli :1.16 session-c1.scope c1 -

:1.17 2225 csd-color eli :1.17 session-c1.scope c1 -

:1.18 2238 csd-clipboard eli :1.18 session-c1.scope c1 -

:1.19 2242 csd-orientation eli :1.19 session-c1.scope c1 -

:1.20 2233 csd-wacom eli :1.20 session-c1.scope c1 -

:1.21 2245 csd-xsettings eli :1.21 session-c1.scope c1 -

:1.22 2248 csd-mouse eli :1.22 session-c1.scope c1 -

:1.24 2256 csd-print-notif eli :1.24 session-c1.scope c1 -

:1.26 2258 csd-background eli :1.26 session-c1.scope c1 -

:1.27 2260 gvfsd eli :1.27 user@1010.service - -

:1.28 2267 csd-housekeepin eli :1.28 session-c1.scope c1 -

:1.29 2274 csd-screensaver eli :1.29 session-c1.scope c1 -

:1.30 2270 csd-power eli :1.30 session-c1.scope c1 -

:1.31 2299 dconf-service eli :1.31 user@1010.service - -

:1.32 2285 csd-automount eli :1.32 session-c1.scope c1 -

:1.329 22590 xreader eli :1.329 session-c1.scope c1 -

:1.33 2306 gvfsd-fuse eli :1.33 user@1010.service - -

:1.330 22596 xreaderd eli :1.330 user@1010.service - -

:1.332 22606 WebKitNetworkPr eli :1.332 session-c1.scope c1 -

:1.35 2313 csd-printer eli :1.35 session-c1.scope c1 -

:1.37 2349 gvfs-udisks2-vo eli :1.37 user@1010.service - -

:1.38 2384 gvfs-gphoto2-vo eli :1.38 user@1010.service - -

:1.39 2277 csd-xrandr eli :1.39 session-c1.scope c1 -

:1.40 2410 gvfs-goa-volume eli :1.40 user@1010.service - -

:1.41 2414 goa-daemon eli :1.41 user@1010.service - -

:1.42 2427 goa-identity-se eli :1.42 user@1010.service - -

:1.43 2432 gvfs-mtp-volume eli :1.43 user@1010.service - -

:1.44 2436 gvfs-afc-volume eli :1.44 user@1010.service - -

:1.448 31116 gvfsd-http eli :1.448 user@1010.service - -

:1.457 31367 gvfsd-network eli :1.457 user@1010.service - -

:1.46 2472 polkit-gnome-au eli :1.46 session-c1.scope c1 -

:1.463 31401 gvfsd-dnssd eli :1.463 user@1010.service - -

:1.47 2481 cinnamon-killer eli :1.47 session-c1.scope c1 -

:1.48 2478 nemo-desktop eli :1.48 session-c1.scope c1 -

:1.49 2480 nm-applet eli :1.49 session-c1.scope c1 -

:1.5 2115 cinnamon-sessio eli :1.5 session-c1.scope c1 -

:1.50 2474 blueberry-obex- eli :1.50 session-c1.scope c1 -

:1.51 2474 blueberry-obex- eli :1.51 session-c1.scope c1 -

:1.52 2499 obexd eli :1.52 user@1010.service - -

:1.54 2506 gvfsd-trash eli :1.54 user@1010.service - -

:1.55 2512 gvfsd-metadata eli :1.55 user@1010.service - -

:1.56 2535 python2 eli :1.56 session-c1.scope c1 -

:1.5653 62813 chrome eli :1.5653 session-c1.scope c1 -

:1.5654 62813 chrome eli :1.5654 session-c1.scope c1 -

:1.5655 62813 chrome eli :1.5655 session-c1.scope c1 -

:1.5656 62813 chrome eli :1.5656 session-c1.scope c1 -

:1.5661 62813 chrome eli :1.5661 session-c1.scope c1 -

:1.57 2534 cinnamon-screen eli :1.57 session-c1.scope c1 -

:1.58 2574 gnome-terminal eli :1.58 session-c1.scope c1 -

:1.5834 52878 xreader eli :1.5834 session-c1.scope c1 -

:1.5836 52891 WebKitNetworkPr eli :1.5836 session-c1.scope c1 -

:1.5842 54509 xreader eli :1.5842 session-c1.scope c1 -

:1.5843 54522 WebKitNetworkPr eli :1.5843 session-c1.scope c1 -

:1.59 2574 gnome-terminal eli :1.59 session-c1.scope c1 -

:1.6 2115 cinnamon-sessio eli :1.6 session-c1.scope c1 -

:1.60 2579 gconfd-2 eli :1.60 user@1010.service - -

:1.61 2574 gnome-terminal eli :1.61 session-c1.scope c1 -

:1.6105 47745 xreader eli :1.6105 session-c1.scope c1 -

:1.6107 47757 WebKitNetworkPr eli :1.6107 session-c1.scope c1 -

:1.6477 31538 thunderbird-bin eli :1.6477 session-c1.scope c1 -

:1.6478 31538 thunderbird-bin eli :1.6478 session-c1.scope c1 -

:1.6531 2451 cinnamon eli :1.6531 session-c1.scope c1 -

:1.6562 69100 csd-sound eli :1.6562 session-c1.scope c1 -

:1.6592 1641 firefox-bin eli :1.6592 session-c1.scope c1 -

:1.6593 1641 firefox-bin eli :1.6593 session-c1.scope c1 -

:1.6594 1700 Web Content eli :1.6594 session-c1.scope c1 -

:1.6595 1760 WebExtensions eli :1.6595 session-c1.scope c1 -

:1.6596 1824 Web Content eli :1.6596 session-c1.scope c1 -

:1.6597 1876 Web Content eli :1.6597 session-c1.scope c1 -

:1.6598 1975 cinnamon-settin eli :1.6598 session-c1.scope c1 -

:1.6599 1975 cinnamon-settin eli :1.6599 session-c1.scope c1 -

:1.6608 2640 Web Content eli :1.6608 session-c1.scope c1 -

:1.6609 3399 Web Content eli :1.6609 session-c1.scope c1 -

:1.6615 4286 csd-keyboard eli :1.6615 session-c1.scope c1 -

:1.6616 4296 csd-media-keys eli :1.6616 session-c1.scope c1 -

:1.6617 4296 csd-media-keys eli :1.6617 session-c1.scope c1 -

:1.6618 4345 pulseaudio eli :1.6618 session-c1.scope c1 -

:1.6621 4692 xed eli :1.6621 session-c1.scope c1 -

:1.6625 5225 busctl eli :1.6625 session-c1.scope c1 -

:1.67 2860 applet.py eli :1.67 session-c1.scope c1 -

:1.7 2189 at-spi-bus-laun eli :1.7 user@1010.service - -

:1.73 3537 gnome-system-mo eli :1.73 session-c1.scope c1 -

:1.8 2196 at-spi2-registr eli :1.8 user@1010.service - -

:1.9 2204 gnome-keyring-d eli :1.9 session-c1.scope c1 -

:1.92 8884 xdg-desktop-por eli :1.92 user@1010.service - -

:1.93 8888 xdg-document-po eli :1.93 user@1010.service - -

:1.94 8891 xdg-permission- eli :1.94 user@1010.service - -

:1.95 8902 pxgsettings eli :1.95 user@1010.service - -

:1.96 8906 xdg-desktop-por eli :1.96 user@1010.service - -

ca.desrt.dconf 2299 dconf-service eli :1.31 user@1010.service - -

ca.desrt.dconf-editor - - - (activatable) - -

org.Cinnamon 2451 cinnamon eli :1.6531 session-c1.scope c1 -

org.Cinnamon.HotplugSniffer - - - (activatable) - -

org.Cinnamon.LookingGlass 2451 cinnamon eli :1.6531 session-c1.scope c1 -

org.Cinnamon.Melange - - - (activatable) - -

org.Cinnamon.Slideshow - - - (activatable) - -

org.Nemo 53331 nemo eli :1.1234 session-c1.scope c1 -

org.NemoDesktop 2478 nemo-desktop eli :1.48 session-c1.scope c1 -

org.PulseAudio1 4345 pulseaudio eli :1.6618 session-c1.scope c1 -

org.a11y.Bus 2189 at-spi-bus-laun eli :1.7 user@1010.service - -

org.bluez.obex 2499 obexd eli :1.52 user@1010.service - -

org.cinnamon.ScreenSaver 2534 cinnamon-screen eli :1.57 session-c1.scope c1 -

org.cinnamon.SettingsDaemon.KeybindingHandler 4296 csd-media-keys eli :1.6616 session-c1.scope c1 -

So it’s quite clear that the players here were cinnamon’s main socket, csd-media-keys and pulseaudio.

Overview

This is a collection of insights regarding lead-acid batteries, in particular those sealed batteries that are used in UPSes. Had it not been for my failed attempts to get a decent battery for my UPS, none of this would have been written. It was only when I asked myself why the UPS doesn’t maintain power nearly as long as I expected that I started to learn more about these batteries.

Batteries is far away from anything I do for a living. This is definitely not my expertise. Please keep that in mind. But as it turns out, there’s a lot to know about them.

Well, I think I’ll take that back: After a lot of playing with these batteries, my conclusion is that knowledge doesn’t help much. Run a test, see how long the battery holds up your computer, and that’s it. There doesn’t seem to be anything smarter to do.

There’s probably only one important thing to know about them: They should be fresh. That’s hardly new, and yet, that’s probably the bottom line. Lead-acid batteries discharge by themselves over time, and when they reach a certain level of discharge, they get damaged. Also, the discharge rate depends a lot on temperature. So a battery that has been stored in a hot place somewhere in a distant country for over a year is probably no good candidate for your UPS. The manufacturer’s timestamp is everything: It starts to seem to me that most batteries were more or less the same on the day that they left the factory. The difference is how much damage they caught during storage.

For a concise technical background, I recommend reading Power Sonic’s Technical Manual.

So without further ado, let’s go through my findings, in more or less random order.

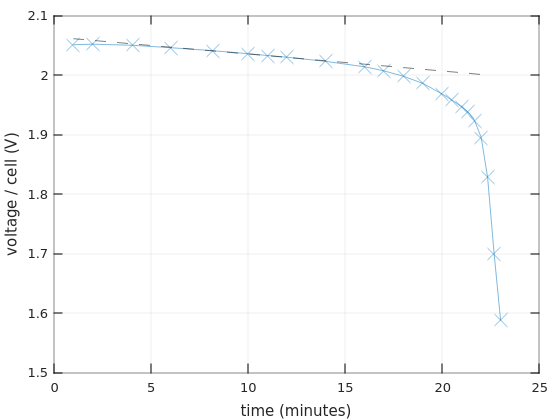

A 7AH battery doesn’t really give 7AH

With lead-acid batteries, 7AH doesn’t meas 7A for 1 hour. Or 3.5A for 2 hours. Or any other combination, except for 0.35A for 20 hours. More about that below.

The amount of charge (and energy) that a lead-acid battery supplies until it’s discharged depends dramatically on the discharging current. The capacity printed on the battery is given for a 20-hours discharge, or using the jargon, 0.05C. That “C” is 7 taken from the 7AH figure, so 7AH are obtained if the discharge current is 0.35A. For larger currents, expect much less energy out of the battery.

This phenomenon is approximated by Peukert’s Law.

For example, my specific case: The load is 70W (at 142VA) according to the UPS itself. I’ll assume that the low power factor thing can be ignored, i.e. that the fact that the VA figure is twice the consumed power makes no difference. This low power factor is natural to switching power supplies, as they draw more current when the voltage is low, so their behavior is far from a plain resistor (unless specifically compensated to mitigate this effect). I’ll also assume that the UPS is 100% efficient on its voltage conversion, which is complete rubbish, but for the heck of it.

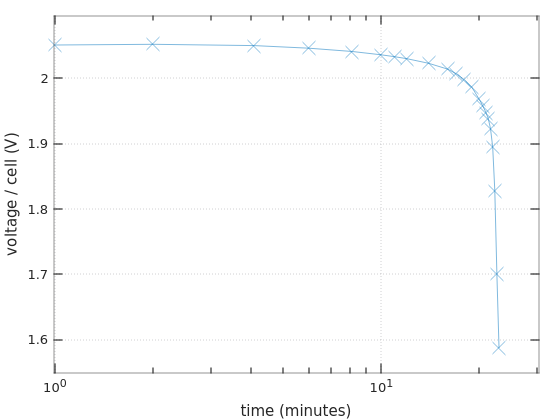

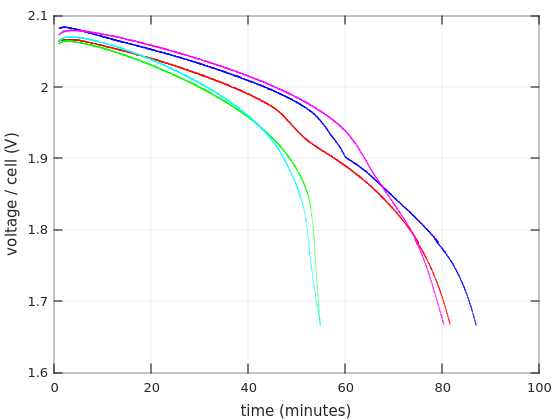

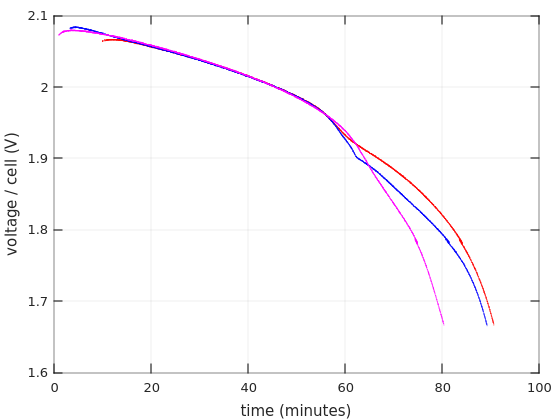

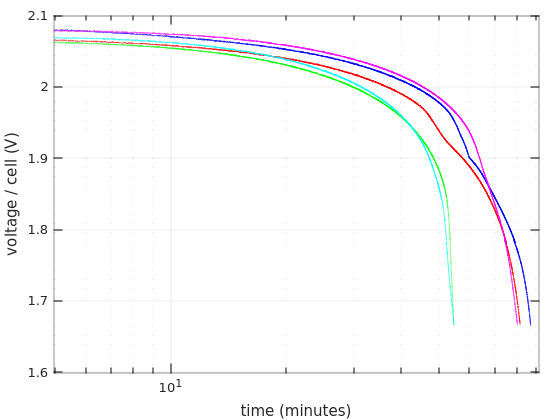

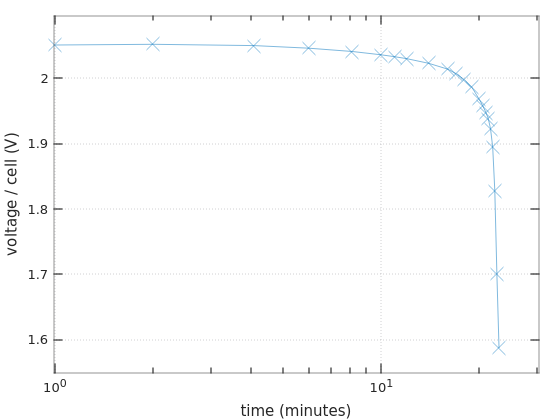

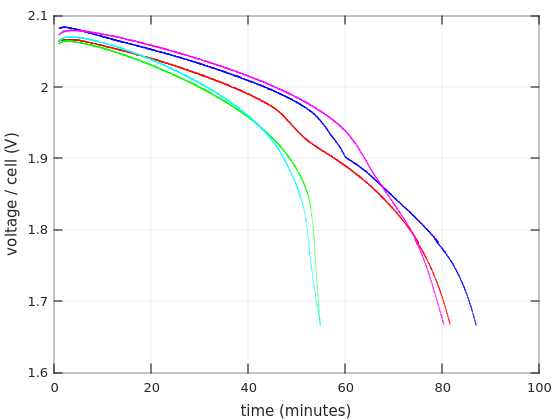

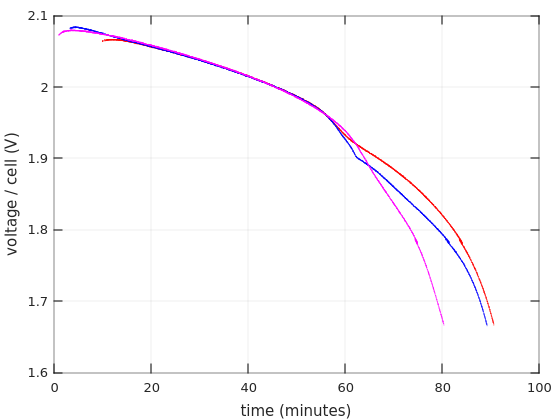

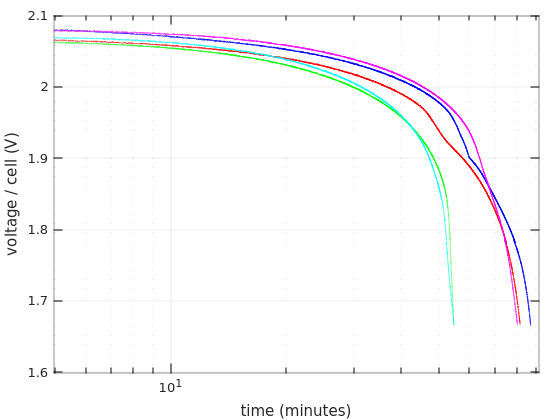

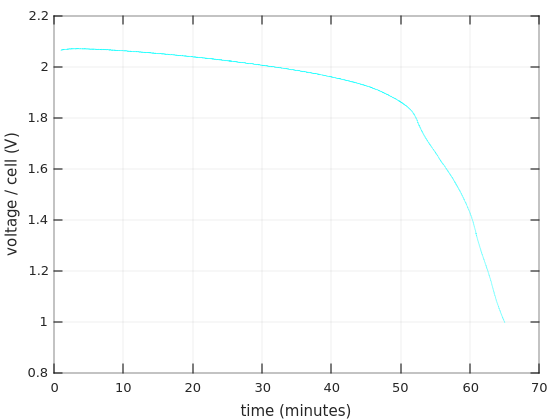

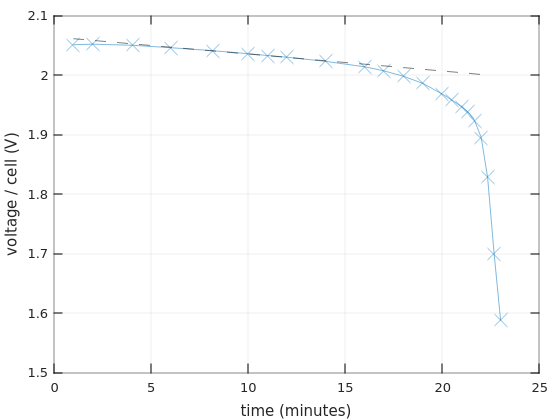

So for two 12V batteries in series it goes 70W/24V =~ 2.9A, which is about 0.4C (2.9 / 7 =~ 0.4). A ballpark figure can be taken from Figure 4 in Power Sonic’s Technical Manual, showing that the voltage starts to drop after about an hour, and reaches the critical value somewhere after an hour and a half. Note that I have different batteries.

Also from Table 2 of the same Manual, we have that the actual capacity of a 7AH battery, when drained with a 4.34A current, is 4.34AH (one hour). The current is higher than 2.9A, but given that the UPS isn’t really 100% efficient, it’s likely that the real discharge current is closer to 4A than to 2.9A. So that explains why the UPS said 1:12 hours when I updated the battery replacement date.

Many battery manufacturers supply datasheets with Discharge Characteristics Curves. Even better, if there are tables with Constant Current Discharge Characteristics (also referred to as F.V / Time specification). See Ritar’s datasheet for RT1270, for example. The numbers inside this table are the constant currents for each discharging scenario. Each row is the final voltage (F.V) per cell that is reached in this scenario, and each column represents the time it takes to reach this voltage.

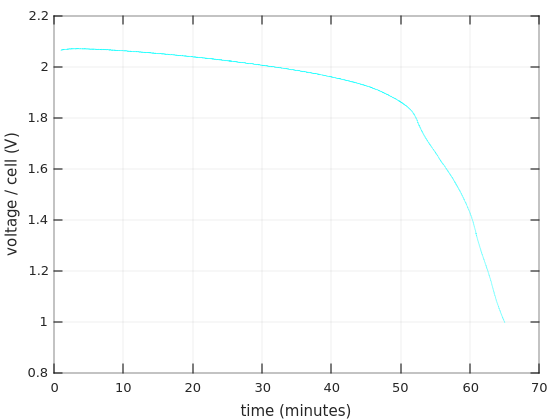

It’s not clear at which voltage the UPS decides to stop discharging. Even though 1.60V per cell seems to be a recurring number in datasheets for the game over. And on the other hand, I’ve repeatedly seen 0.35A (i.e. 0.05C) paired with 1.75V and 20 hours discharge in those F.V / Time tables. So it’s not really clear. Anyhow, finding a number that is similar to the intended current in the table can give an idea on how long the battery is supposed to last.

Obviously, different batteries behave differently on higher currents. I couldn’t find data on my “Bulls Power” batteries. So maybe they could meet the 7AH specification for a 20-hours discharge, and then perform really poorly with higher, real-life currents. No way to know for sure.

So what is the state of the battery after it has been discharged with less than 7AH? It’s still charged, as a matter of fact. A rapid discharge, which yields less than the full capacity is healthy for the battery. It’s when the full capacity is utilized with a slow discharge pattern that the battery can be damaged if it stays in that state for a long while.

Charging a lead-acid battery

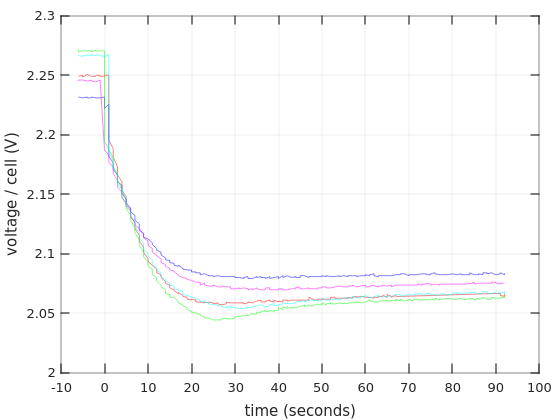

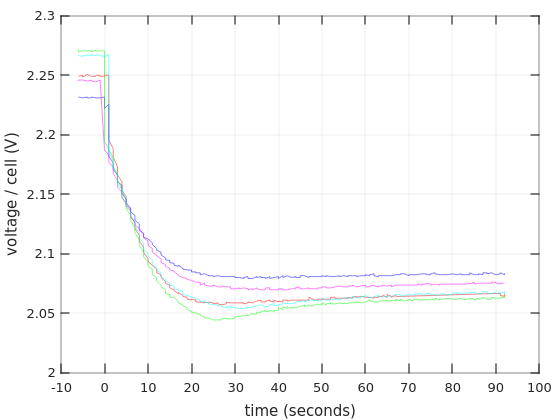

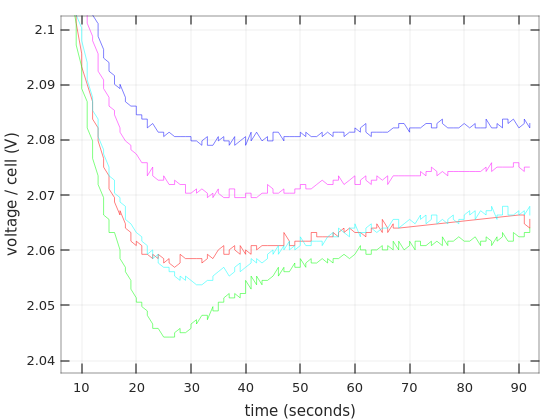

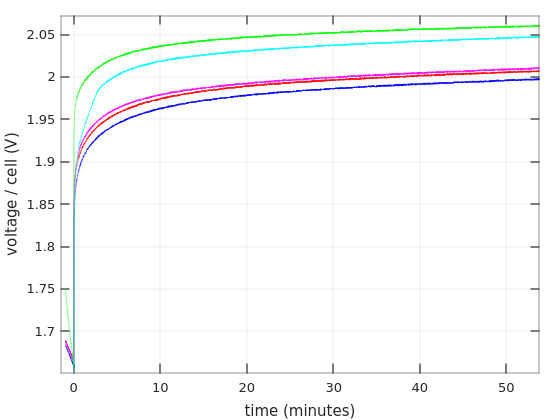

There are in fact several ways to charge a lead acid battery (for example, this), but the common way to charge a battery is to feed it with a current of 0.3C (2.1A for a 7AH battery) until the voltage rises to above a threshold. Or to set a constant voltage (with a current limit) and charge until the current goes below a certain level. Then comes the second phase, which is the slow charging to 100%.

When the battery is full, a floating voltage should be applied to the battery. Each battery specifies its own preferred floating voltage, but they all land at around 13.5-13.8V. This is the correct way to maintain a fully charged battery: A small charging current compensates for the battery’s inherent discharge (typically around 0.001C, i.e. 7 mA for a 7AH battery), and the battery remains fully charged in a way that is best for its health.

Battery manufacturers usually state something like 10% discharge for 90 days or so. This corresponds to about 0.000046C, or ~0.3 mA for a 7AH battery. But in this scenario, the battery discharges against its own voltage, and not the elevated floating voltage (does it matter?).

Knowing the battery’s charge level

How does one estimate how much energy a lead-acid battery has? The answer is unpleasant, yet simple: There is really no way to measure it from the battery electronically. After reading quite some material on the subject, that became evident to me: There are plenty of papers describing exotic algorithms for estimating a battery’s health and charge level, and their abundance and variety proves that there’s really no way to tell, except for draining it.

Maybe the first question should have been: What is consumed when the battery gets discharged? For one, sulfuric acid reacts with the lead plates and become lead sulfate. When there’s no acid left, the battery is done. On the other hand, the lead plates themselves might deteriorate in the process. This can happen relatively early if the lead plates are partly damaged because of previous use (or abuse).

There is one way to measure the charge level that is considered reliable, which is measuring the open circuit voltage (OCV) after the battery has been disconnected for a while (some say a few hours, battery manufacturers typically require 24 hours). Letting the battery rest allows it to reach a chemical equilibrium, at which point the voltage reflects its charge level. This is surely true for a fresh battery, because the OCV reflects the specific gravity of the electrolyte, that is the concentration of the sulfuric acid.

As for batteries with some history, the picture is less clear, and I haven’t managed to figure out if the OCV voltages remain the same, and if the voltage vs. charge percentage relate to the original charge capacity, or the one that is available after the battery is worn out. The OCV measurementt covers the charge level in the sense of how much acid there’s left to consume, but will the lead plates hold that long?

For example, Power Sonic claims that the OVC goes from 1.94V/cell to 2.16V/cell for 0% to 100% charge respectively. As a 12-volt battery has 6 cells, this corresponds to 11.64V to 12.96V. These figures are quite similar to those presented by another manufacturer.

But what does 100% charge mean? 7AH or as much as is left when the battery has worked for some time? My anecdotal measurement of the batteries I took out from the UPS was 12.99V after letting them rest. In other words, they presented a OCV voltage corresponding to 100% charge, even though they had much less than 7AH.

So how does a UPS estimate the remaining runtime? Well, the simple way is to let the battery run out once, and there you have a number. Clearly, Smart UPS uses this method.

Are there any alternatives? In theory, the UPS could let the battery rest for 24 hours, and measure its OCV. This is possible, because most of the time the UPS doesn’t need the battery. But even my anecdotal measurement shows that a 100% charge-like reading doesn’t mean much.

For other types of batteries (Li-ion in particular), measuring the current on the battery, in and out (Coulomb Counting), gives an idea on how much charge it contains. This doesn’t work with lead acid batteries, because the recommended way to maintain a standby battery, is to continuously float charge it. That means holding a constant voltage (say, 2.25V per cell, that is 13.5V for a 12V battery, or 27V on a battery pair, as in SmartUPS 750).

As this voltage is higher than the OCV at rest, this causes a small trickle current (said to be about 0.001C), which compensates for the battery’s self discharge. Even if it overcharges the battery slightly, the gases that are released are recycled internally in a sealed battery, so there’s no damage.