I had a set of SRT files with pretty good subtitles, but with one annoying problem: When there was a song in the background, the translation of the song would pop up and interrupt of the dialogue’s subtitles, so it became impossible to understand what’s going on.

Luckily, those song-translating subtitles had all have a “{\a6}” string, which is an ASS tag meaning that the text should be shown at the top of the picture. mplayer ignores these tags, which explains why these subtitles make sense, but mess up things for me. So the simple solution is to remove these entries.

Why don’t I use VLC instead? Mainly because I’m used to mplayer, and I’m under the impression that mplayer gives much better and easier control of low-level issues such as adjusting the subtitles’ timing. But also the ability to run it with a lot of parameters from the command line and jumping back and forth in the displayed video, in particular through a keyboard remote control. But maybe it’s just a matter of habit.

Here’s a Perl script that reads an SRT file and removes all entries with such string. It fixes the numbering of the entries to make up for those that have been removed. Fun fact: The entries don’t need to appear in chronological order. In fact, most of the annoying subtitles appeared at the end of the file, even though they messed up things everywhere.

This can be a boilerplate for other needs as well, of course.

use warnings;

use strict;

my $fname = shift;

my $data = readfile($fname);

my ($name, $ext) = ($fname =~ /^(.*)\.(.*)$/);

die("No extension in file name \"$fname\"\n")

unless (defined $name);

my $nl = qr/\r*\n\r*/;

my $tregex = qr/(?:\d+$nl.*?(?:$nl$nl|$))/s;

my ($pre, $chunk, $post) = ($data =~ /^(.*?)($tregex*)(.*)$/);

die("Input file doesn't look like an SRT file\n")

unless (defined $chunk);

my $lpre = length($pre);

my $lpost = length($post);

print "Warning: Passing through $lpre bytes at beginning of file untouched\n"

if ($lpre);

print "Warning: Passing through $lpost bytes at beginning of file untouched\n"

if ($lpost);

my @items = ($chunk =~ /($tregex)/g);

my @outitems;

my $removed = 0;

my $counter = 1;

foreach my $i (@items) {

if ($i =~ /\\a6/) {

$removed++;

} else {

$i =~ s/\d+/$counter/;

$counter++;

push @outitems, $i;

}

}

print "Removed $removed subtitle entries from $fname\n";

writefile("$name-clean.$ext", join("", $pre, @outitems, $post));

exit(0);

sub writefile {

my ($fname, $data) = @_;

open(my $out, ">:utf8", $fname)

or die "Can't open \"$fname\" for write: $!\n";

print $out $data;

close $out;

}

sub readfile {

my ($fname) = @_;

local $/;

open(my $in, "<:utf8", $fname)

or die "Can't open $fname for read: $!\n";

my $input = <$in>;

close $in;

return $input;

}

Introduction

This post is a spin-off from another post of mine. It’s the result of my wish to limit the battery’s charge level, so it doesn’t turn into a balloon again.

I’ve written this post in chronological order (i.e. in the order that I found out things). If you’re here for the “what do I do”, just jump to “The Google pixel way”.

I assume that you’re fine with adb and that your Pixel phone is rooted.

Failed attempts to use an app

I installed Charge Control (version 3.5) which appears to be the only app of this sort available for my phone in Google Play. It’s a bit scary to install an app with root control, but without root it can’t possibly work. It’s a nice app, with only one drawback: It doesn’t work, at least not on my phone. The phone nevertheless went on charging up to 100% despite the (annoying) notification that charging is disabled. I also tried turning off the “Adaptive Battery” and “Adaptive Charging” features but that made no difference. So I gave this app the boot.

I first wanted Battery Charge Limit app at Google Play. This app is announced at XDA developers (which is a good sign, if 2.9k reviews isn’t good enough), and has a long trace of versions. Even better, this app’s source is published at Github, which is how I found out that it hasn’t been updated since 2020. It was kicked off in 2017, according to the same repo. Unfortunately, as this app isn’t maintained, so it doesn’t support recent phones. Not mine, for sure.

The Google pixel way

Based upon this post in XDA forums, I found the way to actually limit the charging level. This is based upon this kernel commit on a driver that is specific to Google devices. This driver, google_charger.c, is not part of the kernel itself, but is included as an external kernel module. I’m therefore not sure exactly which version of this driver is used on my phone. However, as I’ve identified the kernel as slightly before “12.0.0 r0.36″, I suppose the driver is more or less in that region too, as it appears in the msm git repo as drivers/power/supply/google/google_charger.c.

git clone https://android.googlesource.com/kernel/msm

Now to some hands-on: I do this directly with adb, as root (more about adb on my other post):

# cd /sys/devices/platform/google,charger

# ls -F

bd_clear bd_resume_temp bd_trigger_voltage of_node@

bd_drainto_soc bd_resume_time charge_start_level power/

bd_recharge_soc bd_temp_dry_run charge_stop_level subsystem@

bd_recharge_voltage bd_temp_enable driver@ uevent

bd_resume_abs_temp bd_trigger_temp driver_override

bd_resume_soc bd_trigger_time modalias

# cat charge_start_level

0

# cat charge_stop_level

100

This sysfs directory belongs to the google_charger kernel module (i.e. it’s listed on lsmod).

As one would expect, charging is disabled when the battery’s level equals or is above charge_stop_level, and resumes when the battery level equals or is below charge_start_level. The driver ignores both values if they are 0 and 100, or else the phone would reach 0% before starting to charge.

The important point is: If you change charge_stop_level, don’t leave charge_start_level at zero, or the battery will be emptied.

For my own purposes, I went for charge levels between 70% and 80%:

# echo 70 > charge_start_level

# echo 80 > charge_stop_level

When charging is disabled this way, the phone clearly gets confused about the situation, and the information on the screen is misleading.

What actually happens is that if the charging level is above the stop level (80% in my case), the phone will discharge the battery, as if the power supply isn’t connected at all.

When the level reaches the stop level from above, the phone behaves as if the power supply was just plugged in (with a graphic animation and sound) and then goes back and forth between “charging slowly/rapidly” or it just shows the percentage. But it doesn’t charge the battery. Judging by the very slow discharging rate, the external power is used to run the phone, and the battery is just left on its own. Which is ideal: It’s equivalent to storing the battery within its ideal charging percentage for that purpose.

So the charging level will not oscillate all the time. Rather, it will more or less dwell at a random level between 70% and 80% each time, with a very slow descent.

When disconnecting the phone from external power, the phone works on battery of course, and will discharge. If it reaches below 70%, it will go up to 80% on the next opportunity to charge. If it doesn’t reach that level, it sometimes remains where it was after connection to external power, and sometimes it goes up to 80% anyhow. Go figure.

The “Ampere” app that I use to get info about the battery gets confused as well, saying “Not charging” most of the time, but often contradicts itself. I don’t blame it.

As for the regular Android settings in relation to the battery: Battery saver off, Adaptive Battery off and Adaptive Charging off. Not that I think these matter.

The relevant driver emits messages to the kernel log, so it looked like this when the charging level was 66% and I set charge_stop_level to 67:

# dmesg | grep google_charger

[ ... ]

[14866.762578] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=66, charging on

[14866.771714] google_charger: usbchg=USB typec=null usbv=4725 usbc=882 usbMv=5000 usbMc=900

[14896.794038] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=66, charging on

[14896.799065] google_charger: usbchg=USB typec=null usbv=4725 usbc=872 usbMv=5000 usbMc=900

[14923.236443] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=67, lowerdb_reached=1->0, charging off

[14923.236543] google_charger: MSC_CHG disable_charging 0 -> 1

[14923.247057] google_charger: usbchg=USB typec=null usbv=4725 usbc=880 usbMv=5000 usbMc=900

[14923.286307] google_charger: MSC_CHG fv_uv=4200000->4200000 cc_max=4910000->0 rc=0

[14926.809992] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=67, charging off

[14926.811376] google_charger: usbchg=USB typec=null usbv=4975 usbc=0 usbMv=5000 usbMc=900

[14953.952837] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=67, charging off

[14953.954431] google_charger: usbchg=USB typec=null usbv=4725 usbc=0 usbMv=5000 usbMc=900

[15046.115042] google_charger: MSC_CHG lowerbd=0, upperbd=67, capacity=67, charging off

[15046.117563] google_charger: usbchg=USB typec=null usbv=4975 usbc=0 usbMv=5000 usbMc=900

As with all settings in /sys/, this is temporary until next boot. A short script that runs on boot is necessary to make this permanent. I haven’t delved into this yet, and I’m not sure I really want it to be permanent. It’s actually a good idea to be able to restore normal behavior just by rebooting. Say, if I realize that I’m about to have a long day out with the phone, just reboot and charge to 100% in the car.

The Tasker app and a Magisk module have been mentioned as possible solutions. Haven’t looked into that. To me, it would be more natural to add a script that runs on system start, but I am yet to figure out how to do that.

And by the way, there’s also a google,battery directory, but with nothing interesting there.

And then I read the source

Wanting to be sure I’m not messing up something, I read through google_charger.c as of tag android-12.0.0_r0.35 (commit ID 44d65f8296b034061d76efb5409c3bf4d7dc1272, to be accurate). I’m not 100% sure this is what is running on my phone, however the code that I related to has no changes for at least a year in either direction (according to the git repo).

I should mention that google_charger.c is a bit of a mess. The signs of hacking without cleaning up afterwards are there.

Anyhow, I noted that setting charge_start_level and/or charge_stop_level disables another mechanism, which is referred to as Battery Defender. This mechanism stops charging in response to a hot battery.

The thing is that if a battery becomes defective, the only thing that prevents it from burning is that charging stops in response to a high temperature reading. So can turning off Battery Defender have really unpleasant consequences?

After looking closely at chg_run_defender(), my conclusion is that Battery Defender only halts charging when the battery’s voltage exceeds bd_trigger_voltage and when the average temperature is above bd_trigger_temp. According the values in my sysfs, this is 4.27V (i.e. 100% full and beyond) and 35.0°C.

All other bd_* parameters set the condition for resumption of charging.

In other words, this mechanism wouldn’t kick in anyhow, because the battery voltage is lower than this. So limiting the battery’s charge this way poses no additional risk.

I surely hope there’s another mechanism that stops charging if the battery gets really hot. I didn’t find such (but didn’t really look much for one).

The rest of this post consists of really messy jots.

Dissection notes

These are random notes that I took as I read through the code. Written as I went, so not necessarily fully accurate.

- “bd” stands for Battery Defender.

- chg_work() is the battery charging work item. It calls chg_run_defender() for updating chg_drv->disable_pwrsrc and chg_drv->disable_charging, in other words, to implement the Battery Defender.

- chg_is_custom_enabled() returns true when charge_stop_level and/or charge_start_level have non-default values that are legal.

- bd_state->triggered becomes one when the temperature (of the battery, I presume) exceeds a trigger temperature (bd_trigger_temp) AND when the battery’s voltage exceeds the trigger voltage (bd_trigger_voltage). This is done in bd_update_stats().

- In chg_run_defender(), if chg_is_custom_enabled() returns true, bd_reset() is called if bd_state->triggered is true, hence zeroing bd_state->triggered. In fact, the entire trigger mechanism.

- bd_work() is a work item that merely runs in the background after disconnect for the purpose of resetting the trigger (according to the comment). No more than this.

In conclusion, setting the charging levels disables the mechanism that disables charging based upon temperature. chg_run_defender() uses the charging levels instead of the mechanism that is based upon temperature.

More dissection of chg_run_defender():

As bd_reset() has a crucial role, here it is. Note that the parameters are the same as published in /sys/devices/platform/google,charger/.

static void bd_reset(struct bd_data *bd_state)

{

bool can_resume = bd_state->bd_resume_abs_temp ||

bd_state->bd_resume_time ||

(bd_state->bd_resume_time == 0 &&

bd_state->bd_resume_soc == 0);

bd_state->time_sum = 0;

bd_state->temp_sum = 0;

bd_state->last_update = 0;

bd_state->last_voltage = 0;

bd_state->last_temp = 0;

bd_state->triggered = 0;

bd_state->enabled = ((bd_state->bd_trigger_voltage &&

bd_state->bd_recharge_voltage) ||

(bd_state->bd_drainto_soc &&

bd_state->bd_recharge_soc)) &&

bd_state->bd_trigger_time &&

bd_state->bd_trigger_temp &&

bd_state->bd_temp_enable &&

can_resume;

}

Current content of parameters

As read from /sys/devices/platform/google,charger/:

bd_drainto_soc:80

bd_recharge_soc:79

bd_recharge_voltage:4250000

bd_resume_abs_temp:280

bd_resume_soc:50

bd_resume_temp:290

bd_resume_time:14400

bd_temp_dry_run:1

bd_temp_enable:1

bd_trigger_temp:350

bd_trigger_time:21600

bd_trigger_voltage:4270000

charge_start_level:70

charge_stop_level:80

driver_override:(null)

modalias:of:Ngoogle,chargerT(null)Cgoogle,charger

uevent:DRIVER=google,charger

OF_NAME=google,charger

OF_FULLNAME=/google,charger

OF_COMPATIBLE_0=google,charger

OF_COMPATIBLE_N=1

Other notes

- The Battery Defender was apparently added on commit ID 2859390b7e66fc2e1dc097f475a16423182585e2 (the commit is however titled “google_battery: sync battery defend feature”).

- The voltage at 100% is 4.395V while connected to charger, but not charging (?), 4.32V after disconnecting the charger. The voltage while charging is higher.

- For 80% it’s 4.156V. For 79% its 4.145V. For 70% it’s 4.048V.

- All voltage measurements are according to the Ampere app, but they can be read from /sys/class/power_supply/battery/voltage_now (given in μV).

Credit card abuse, episode #2

ssl.com presents the lowest price for an EV code signing certificate, however it’s a bit like going into a flea market with a lot of pickpockets around: Pay attention to your wallet, or things happen.

This is a follow-up post to one that I wrote three years ago, after ssl.com suddenly charged my credit card in relation to the eSigner service. It turns out that this a working pattern that persists even three years later. Actually, it was $200 last time, and $747 now, so one could say they’re improving.

The autorenew fraud

Three years ago, I got a EV code signing certificate from ssl.com that expired more or less at the time of writing this. I got a reminder email from ssl.com, urging me to renew this certificate, and indeed, I ordered a one-year certificate so I could continue to sign drivers. I paid for the one-year certificate and went through a brief process of authenticating my identity, and got an approval soon enough.

I’ll say a few words below about the technicalities around getting the certificate, but all in all the process was finished after a few days, and I thought that was the end of it.

And then, I randomly checked my credit card bill and noticed that it had been charged with 747 USD by ssl.com. So I contacted them to figure out what happened. The answer I got was:

{order number} is an auto renewal for the expiring order. But, I do see that you already manually renewed and renewal cert issued.

I can cancel {order number} then credit the amount to your SSL.com account. Would that be good with you?

Indeed, the automatic renewal order was issued after I had completed the process with the new certificate, so surely there was no excuse for an automatic renewal. And the offer to add the funds to my account in ssl.com for future use was of course a joke (even though they were serious about it, of course).

It’s worth mentioning that the reminder email said nothing about what would happen if I didn’t renew the certificate. And surely, there was no hint about any automatic mechanism for a renewal.

On top of that, I got no notification whatsoever about the automatic renewal or that my credit card had been charged. Needless to say, I didn’t approve this renewal. In fact, I made the order for the one-year certificate on a different and temporary credit card, because I learned the lesson from three years ago. Or so I thought.

So I asked them to cancel the order and refund my credit card. Basically, the answer I got was

I have forwarded to the billing team about the refund request. They will email you once they have an update.

Sounds like a fairly happy end, doesn’t it? Only they didn’t cancel the order, let alone refund the credit card. During two weeks I sent three reminders, and the answer was repeatedly that my requests and reminders had been forwarded to “the team”, and that’s where it ended. Who knows, maybe I’ll just forget about it.

I sent the fourth reminder to billing@ssl.com (and not support@ssl.com), so I got some kind of response. I was once again offered to fill up my wallet on ssl.com with the money instead of a refund. To which I responded negatively, of course. In fact, I turned to slightly harsher language, saying that ssl.com’s conduct makes them no better than a street pickpocket.

And interestingly enough, the response was that my refund request “had been approved”. A day later, I got a confirmation that a refund had taken place. The relevant order remained in the ssl.com’s Dashboard as “pending validation”, but at the same time also marked as refunded. And indeed, the refund was visible in my credit card bill the day after that.

So the method is to fetch money silently from the credit card, hoping that I won’t pay attention or won’t bother to do anything about it. Is there another definition for stealing? And I guess this method works fine with companies that have a lot of transactions of this sort with their credit cards. A few hundred dollars can easily slip away.

It appears like the counter-tactic is to use angry and ugly language right away. As long as the request for refund is polite and sounds like a calm person has written it, there’s still hope that the person writing it will give up or maybe forget about it.

And by the way, this post was published after receiving the refund, so unlike last time, it didn’t play a role in getting the issue resolved.

Avoiding unexpected withdrawals

The best way to avoid situations like this is of course to use a credit card with a short life span. This is the kind I used this time, but not three years ago.

Specifically with ssl.com, there are two things to do when ordering a certificate from them:

- After purchasing, be sure that autorenewal is off. Click the “settings” link on the Dashboard, and uncheck “Automated Certificate Renewal”.

- Also, delete the credit card details: Click on “deposit funds” on the Dashboard, and delete the credit card details.

Pushing eSigner, again

And now to a more subtle issue.

The approval for my one-year certificate came quickly enough, and it came with two suggestions for continuing: To start off immediately with eSigner, or to order a Yubikey from them with the certificate already loaded on it. The latter option costs $279 (even though it was included for free three years ago). Makes the eSigner option sound attractive, doesn’t it?

They didn’t mention using the Yubikey dongle that I already had and that I used for signing drivers. It was only when I asked about this option that they responded that there’s a procedure for loading a new certificate into the existing dongle.

And so I did, and filled the automatic form on their website, as required for obtaining a Yubikey-based certificate. And waited. And waited. Nothing happened. So I sent a reminder, got apologies for the delay, and finally got the certificate I had ordered.

Was this an innocent mishap, or a deliberate attempt to make me try out eSigner instead? As I’ve already had my fingers burnt with eSigner, no chance I would do that, but I can definitely imagine people losing their patience.

The Yubikey dongle costs $25

You can get your Yubikey dongle from ssl.com at $279, or buy it directly from Yubico at $25. This is the device that I got from ssl.com three years ago, and which I use with the renewed certificate after completing the attestation procedure.

The idea behind this procedure is that the secret key that is used for digital signatures is created inside the dongle, and is never revealed to the computer (or in any other way), so it can’t be stolen. The dongle generates a certificate (the “attestation certificate”) ensuring that the public key and secret key pair was indeed created this way, and is therefore safe. This certificate is signed with Yubico’s secret key, which is also present inside the dongle.

So the procedure consists of creating the key pair, obtaining the attestation certificate from the dongle and sending it to ssl.com by filling in a web form. They generate a certificate for signing code (or whatever is needed) in response.

So if you’re about to obtain your first certificate from ssl.com, I suggest checking up the option to purchase the Yubikey separately from Yubico. They have no reason to refuse from a security point of view, because the attestation certificate ensures that the cryptographic key is safe inside the dongle.

Summary

Exactly like three years ago, it seems like ssl.com uses fraudulent methods along with dirty tactics to cover up for their relatively low prices. So if you want to work with this company, be sure to keep a close eye on your credit card bill, and be ready for lengthy delays when requesting something that apparently goes against their interests. Plus misleading messages.

Also, be ready for a long exchange of emails with their support and billing department. It’s probably best to escalate to rude and aggressive language pretty soon, as their support team is probably instructed not to be cooperative as long as the person complaining appears to be calm.

And this comes from a company whose core business is generating trust.

This is a simple utility C macro for selecting a bit range from an integer:

#define bits(x, y, z) (((x) >> (z)) & ((((long int) 2) << ((y) - (z))) - 1))

This picks the part that is equivalent to the expression x[y:z] in Verilog.

The cast to long int may need adjustment to the type of the variable that is manipulated.

And yes, it’s possible this could have been done with less parentheses. But with macros, I’m always compelled to avoid any ambiguity that I may not thing about right now.

In case this helps someone out there:

I have an LG OLED65B9 TV screen for four years. Lately, I began having trouble turning it on. Instead of powering on (from standby mode) the red LED under the screen would blink three times, and then nothing.

On the other hand, if I disconnected the screen from the wall power (220V), and waited for a few minutes, and then attempted to turn on the screen as soon as possible after connecting back to power, the screen would go on and work without any problems and for as long as needed.

When I tried to initiate Pixel Refreshing manually, the screen went to standby as expected and the red LED was on to indicate that. Two hours later, I found the LED off, the screen wouldn’t turn on, and after I did the wall power routine again to turn the screen on again, it indeed went on and complained that it failed to complete Pixel Refreshing before it was turned on.

I tried playing with several options regarding power consumption, and I upgraded webOS to the latest version as of April 2024 (05.40.20). Nothing helped.

I called service, and they replaced the power supply (which costs ~200 USD) and that fixed the problem. That’s the board to the upper left on the image below. Its part number is EAY65170412, in case you wonder.

And by the way, the board to the upper right is the motherboard, and the thing in the middle is the screen panel controller.

(click image to enlarge)

May 19 update: A bit more than a month later, the screen works with no issues. So problem fixed for real. The LED blinks three-four times when I turn it on with the button on the screen itself. So the blinking LED is not an indication of a problem.

The problem I wanted to solve

On an embedded arm system running LUbuntu 16.04 LTS (systemd 229), I wanted to add a mount point to the /etc/fstab file, which originally was like this:

# UNCONFIGURED FSTAB FOR BASE SYSTEM

Empty, except for this message. Is that a bad omen or what? This is the time to mention that the system was set up with debootstrap.

So I added this row to /etc/fstab:

#/dev/mmcblk0p1 /mnt/tfcard vfat defaults 0 0

The result: Boot failed, and systemd asked me for the root password for the sake of getting a rescue shell (the overall systemd status was “maintenance”, cutely enough).

Journalctl was kind enough to offer some not-so-helpful hints:

systemd[1]: dev-mmcblk0p1.device: Job dev-mmcblk0p1.device/start timed out.

systemd[1]: Timed out waiting for device dev-mmcblk0p1.device.

systemd[1]: Dependency failed for /mnt/tfcard.

systemd[1]: Dependency failed for Local File Systems.

systemd[1]: local-fs.target: Job local-fs.target/start failed with result 'dependency'.

systemd[1]: local-fs.target: Triggering OnFailure= dependencies.

systemd[1]: mnt-tfcard.mount: Job mnt-tfcard.mount/start failed with result 'dependency'.

systemd[1]: dev-mmcblk0p1.device: Job dev-mmcblk0p1.device/start failed with result 'timeout'.

Changing the “defaults” option to “nofail” got the system up and running, but without the required mount. That said, running “mount -a” did successfully mount the requested partition.

Spoiler: I didn’t really find a way to solve this, but I offer a fairly digestible workaround at the end of this post.

This is nevertheless an opportunity to discuss systemd dependencies and how systemd relates with /etc/fstab.

The main takeaway: Use “nofail”

This is only slightly related to the main topic, but nevertheless the most important conclusion: Open your /etc/fstab, and add “nofail” as an option to all mount points, except those that really are crucial for booting the system. That includes “/home” if it’s on a separate file system, as well as those huge file systems for storing a lot of large files. The rationale is simple: If any of these file systems are corrupt during boot, you really don’t want to be stuck on that basic textual screen (possibly with very small fonts), not having an idea what happened, and fsck asking you if you want to fix this and that. It’s much easier to figure out the problem when the failing mount points simply don’t mount, and take it from there. Not to mention that it’s much less stressful when the system is up and running. Even if not fully so.

Citing “man systemd.mount”: “With nofail, this mount will be only wanted, not required, by local-fs.target or remote-fs.target. This means that the boot will continue even if this mount point is not mounted successfully”.

To do this properly, make a tarball of /run/systemd/generator. Keep an eye on what’s in local-fs.target.requires/ vs. local-fs.target.wants/ in this directory. Only the absolutely necessary mounts should be in local-fs.target.requires/. In my system there’s only one symlink for “-.mount”, which is the root filesystem. That’s it.

Regardless, it’s a good idea that the root filesystem has the errors=remount-ro option, so if it’s mountable albeit with errors, the system still goes up.

This brief discussion will be clearer after reading through this post. Also see “nofail and dependencies” below for an elaboration on how systemd treats the “nofail” option.

/etc/fstab and systemd

At an early stage of the boot process, systemd-fstab-generator reads through /etc/fstab, and generates *.mount systemd units in /run/systemd/generator/. Inside this directory, it also generates and populates a local-fs.target.requires/ directory as well local-fs.target.wants/ in order to reflect the necessity of these units for the boot process (more about dependencies below).

So for example, in response to this row in /etc/fstab,

/dev/mmcblk0p1 /mnt/tfcard vfat nofail 0 0

the automatically generated file can be found as /run/systemd/generator/mnt-tfcard.mount as follows:

# Automatically generated by systemd-fstab-generator

[Unit]

SourcePath=/etc/fstab

Documentation=man:fstab(5) man:systemd-fstab-generator(8)

[Mount]

What=/dev/mmcblk0p1

Where=/mnt/tfcard

Type=vfat

Options=nofail

Note that the file name of a mount unit must match the mount point (as it does in this case). This is only relevant when writing mount units manually, of course.

Which brings me to my first attempt to solve the problem: Namely, to copy the automatically generated file into /etc/systemd/system/, and enable it as if I wrote it myself. And then remove the row in /etc/fstab.

More precisely, I created this file as /etc/systemd/system/mnt-tfcard.mount:

[Unit]

Description=Mount /dev/mmcblk0p1 as /mnt/tfcard

[Mount]

What=/dev/mmcblk0p1

Where=/mnt/tfcard

Type=vfat

Options=nofail

[Install]

WantedBy=multi-user.target

Note that the WantedBy is necessary for enabling the unit. multi-user.target is a bit inaccurate for this purpose, but it failed for a different reason anyhow, so who cares. It should have been “WantedBy=local-fs.target”, I believe.

Anyhow, I then went:

# systemctl daemon-reload

# systemctl enable mnt-tfcard.mount

Created symlink from /etc/systemd/system/multi-user.target.wants/mnt-tfcard.mount to /etc/systemd/system/mnt-tfcard.mount.

And then ran

# systemctl start mnt-tfcard.mount

but that was just stuck for a few seconds, and then an error message. There was no problem booting (because of “nofail”), but the mount wasn’t performed.

To investigate further, I attached strace to the systemd process (PID 1) while running the “systemctl start” command, and there was no fork. In other words, I had already then a good reason to suspect that the problem wasn’t a failed mount attempt, but that systemd didn’t go as far as trying. Which isn’t so surprising, given that the original complaint was a dependency problem.

Also, the command

# systemctl start dev-mmcblk0p1.device

didn’t finish. Checking with “systemctl”, it just said:

UNIT LOAD ACTIVE SUB JOB DESCRIPTION

dev-mmcblk0p1.device loaded inactive dead start dev-mmcblk0p1.device

[ ... ]

So have we just learned? That it’s possible to create .mount units instead of populating /etc/fstab. And that the result is exactly the same. Why that would be useful, I don’t know.

See “man systemd-fstab-generator” about how the content of fstab is translated automatically to systemd units. Also see “man systemd-fsck@.service” regarding the service that runs fsck, and refer to “man systemd.mount” regarding mount units.

A quick recap on systemd dependencies

It’s important to make a distinction between dependencies that express requirements and dependencies that express the order of launching units (“temporal dependencies”).

I’ll start with the first sort: Those that express that if one unit is activated, other units need to be activated too (“pulled in” as it’s often referred to). Note that the requirement kind of dependencies don’t say anything about one unit waiting for another to complete its activation or anything of that sort. The whole point is to start a lot of units in parallel.

So first, we have Requires=. When it appears in a unit file, it means that the listed unit must be started if the current unit is started, and that if that listed unit fails, the current unit will fail as well.

In the opposite direction, there’s RequiredBy=. It’s exactly like putting a Required= directive in the unit that is listed.

Then we have another famous couple, Wants= and its opposite WantedBy=. These are the merciful counterparts of Requires= and RequriedBy=. The difference: If the listed unit fails, the other unit may activate successfully nevertheless. The failure is marked by the fact that “systemctl status” will declare the system as “degraded”.

So the fact that basically every service unit ends with “WantedBy=multi-user.target” means that the unit file says “if multi-user.target is a requested target (i.e. practically always), you need to activate me”, but at the same time it says “if I fail, don’t fail the entire boot process”. In other words, had RequriedBy= been used all over the place instead, it would have worked just the same, as long as all units started successfully. But if one of them didn’t, one would get a textual rescue shell instead of an almost fully functional (“degraded”) system.

There are a whole lot of other directives for defining dependencies. In particular, there are also Requisite=, ConsistsOf=, BindsTo=, PartOf=, Conflicts=, plus tons of possible directives for fine-tuning the behavior of the unit, including conditions for starting and whatnot. See “man systemd.unit”. There’s also a nice summary table on that man page.

It’s important to note that even if unit X requires unit Y by virtue of the directives mentioned above, systemd may (and often will) activate them at the same time. That’s true for both “require” and “want” kind of directives. In order to control the order of activation, we have After= and Before=. After= and Before= also ensure that the stopping of units occur in the reverse order.

After= and Before= only define when the units are started, and are often used in conjunction with the directives that define requirements. But by themselves, After= and Before= don’t define a relation of requirement between units.

It’s therefore common to use Required= and After= on the same listed unit, meaning that the listed unit must complete successfully before activating the unit for which these directives are given. Same with RequiredBy= and Before=, meaning that this unit must complete before the listed unit can start.

Once again, there are more commands, and more to say on those I just mentioned. See “man systemd.unit” for detailed info on this. Really, do. It’s a good man page. There’s a lot of “but if” to be aware of.

“nofail” and dependencies

One somewhat scary side-effect of adding “nofail” is that the line saying “Before=local-fs.target” doesn’t appear in the *.mount unit files that are automatically generated. Does it mean that the services might be started before these mounts have taken place?

I can’t say I’m 100% sure about this, but it would be really poor design if it was that way, as it would have have added a very unexpected side-effect to “nofail”. Looking at the system log of a boot with almost all mounts with “nofail”, it’s evident that they were mounted after “Reached target Local File Systems” is announced, but before “Reached target System Initialization”. Looking at a system log before adding these “nofail” options, all mounts were made before “Reached target Local File Systems”.

So what happens here? It’s worth mentioning that in local-fs.target, it only says After=local-fs-pre.target in relation to temporal dependencies. So that can explain why “Reached target Local File Systems” is announced before all mounts have been completed.

But then, local-fs.target is listed in sysinit.target’s After= as well as Wants= directives. I haven’t found any clear-cut definition to whether “After=” waits until all “wanted” units have been fully started (or officially failed). The term that is used in “man systemd.unit” in relation to waiting is “finished started up”, but what does that mean exactly? This manpage also says “Most importantly, for service units start-up is considered completed for the purpose of Before=/After= when all its configured start-up commands have been invoked and they either failed or reported start-up success”. But what about “want” relations?

So I guess systemd interprets the “After” directive on local-fs.target in sysinit.target as “wait for local-fs.target’s temporal dependencies as well, even if local-fs.target doesn’t wait for them”.

Querying dependencies

So how can we know which unit depends on which? In order to get the system’s tree of dependencies, go

$ systemctl list-dependencies

This recursively shows the dependency tree that is created by Requires, RequiredBy, Wants, WantedBy and similar relations. Usually, only target units are followed recursively. In order to display the entire tree, add the –all flag.

The units that are listed are those that are required (or “wanted”) in order to reach the target that is being queried (“default.target” if no target specified).

As can be seen from the output, the filesystem mounts are made in order to reach the local-fs.target target. To get the tree of only this target:

$ systemctl list-dependencies local-fs.target

The output of this command are the units that are activated for the sake of reaching local-fs.target. “-.mount” is the root mount, by the way.

It’s also possible to obtain the reverse dependencies with the –reverse flag. In other words, in order to see which targets rely on a local-fs.target, go

$ systemctl list-dependencies --reverse local-fs.target

list-dependencies doesn’t make a distinction between “required” or “wanted”, and neither does it show the nuances of the various other possibilities for creating dependencies. For this, use “systemctl show”, e.g.

$ systemctl show local-fs.target

This lists all directives, explicit and implicit, that have been made on this unit. A lot of information to go through, but that’s the full picture.

If the –after flag is added, the tree of targets with an After= directive (and other implicit temporal dependencies, e.g. mirrored Before= directives) is printed out. Recall that this only tells us something about the order of execution, and nothing about which unit requires which. For example:

$ systemctl list-dependencies --after local-fs.target

Likewise, there’s –before, which does the opposite.

Note that the names of the flags are confusing: –after tells us about the targets that are started before local-fs.target, and –before tells us about the targets started after local-fs.target. The rationale: The spirit of list-dependencies (without –reverse) is that it tells us what is needed to reach the target. Hence following the After= directives tells us what had to be before. And vice versa.

Red herring #1

After this long general discussion about dependencies, let’s go back to the original problem: The mnt-tfcard.mount unit failed with status “dependency” and dev-mmcblk0p1.device had the status inactive / dead.

Could this be a dependency thing?

# systemctl list-dependencies dev-mmcblk0p1.device

dev-mmcblk0p2.device

● └─mnt-tfcard.mount

# systemctl list-dependencies mnt-tfcard.mount

mnt-tfcard.mount

● ├─dev-mmcblk0p1.device

● └─system.slice

Say what? dev-mmcblk0p2.device depends on mnt-tfcard.mount? And even more intriguing, mnt-tfcard.mount depends on dev-mmcblk0p2.device, so it’s a circular dependency! This must be the problem! (not)

This was the result regardless of whether I used /etc/fstab or my own mount unit file instead. I also tried adding DefaultDependencies=no on mnt-tfcard.mount’s unit file, but that made no difference.

Neither did this dependency go away when the last parameter in /dev/fstab was changed from 0 to 2, indicating that fsck should be run on the block device prior to mount. The dependency changed, though. What did change was mnt-tfcard.mount’s dependencies, but that’s not the problem.

# systemctl list-dependencies mnt-tfcard.mount

mnt-tfcard.mount

● ├─dev-mmcblk0p1.device

● ├─system.slice

● └─systemd-fsck@dev-mmcblk0p1.service

# systemctl list-dependencies systemd-fsck@dev-mmcblk0p1.service

systemd-fsck@dev-mmcblk0p1.service

● ├─dev-mmcblk0p1.device

● ├─system-systemd\x2dfsck.slice

● └─systemd-fsckd.socket

But the thing is that the cyclic dependency isn’t an error. This is the same queries on the /boot mount (/dev/sda1, ext4) of another computer:

$ systemctl list-dependencies boot.mount

boot.mount

● ├─-.mount

● ├─dev-disk-by\x2duuid-063f1689\x2d3729\x2d425e\x2d9319\x2dc815ccd8ecaf.device

● ├─system.slice

● └─systemd-fsck@dev-disk-by\x2duuid-063f1689\x2d3729\x2d425e\x2d9319\x2dc815ccd8ecaf.service

And the back dependency:

$ systemctl list-dependencies 'dev-disk-by\x2duuid-063f1689\x2d3729\x2d425e\x2d9319\x2dc815ccd8ecaf.device'

dev-disk-by\x2duuid-063f1689\x2d3729\x2d425e\x2d9319\x2dc815ccd8ecaf.device

● └─boot.mount

The mount unit depends on the device unit and vice versa. Same thing, but this time it works.

Why does the device unit depend on the mount unit, one may ask. Not clear.

Red herring #2

At some point, I thought that the reason for the problem was that the filesystem’s “dirty bit” was set:

# fsck /dev/mmcblk0p1

fsck from util-linux 2.27.1

fsck.fat 3.0.28 (2015-05-16)

0x25: Dirty bit is set. Fs was not properly unmounted and some data may be corrupt.

1) Remove dirty bit

2) No action

By the way, using “fsck.vfat” instead of just “fsck” did exactly the same. The former is what the systemd-fsck service uses, according to the man page.

But this wasn’t the problem. Even after removing the dirty bit, and with fsck reporting success, dev-mmcblk0p1.device would not start.

The really stinking fish

What really is fishy on the system is this output of “systemctl –all”:

dev-mmcblk0p2.device loaded activating tentative /dev/mmcblk0p2

/dev/mmcblk0p2 is the partition that is mounted as root! It should be “loaded active plugged”! All devices have that status, except for this one.

Lennart Poettering (i.e. the horse’s mouth) mentions that “If the device stays around in “tentative” state, then this indicates that a device appears in /proc/self/mountinfo with some name, and systemd can’t find a matching device in /sys for it, probably because for some reason it has a different name”.

And indeed, this is the relevant row in /proc/self/mountinfo:

16 0 179:2 / / rw,relatime shared:1 - ext4 /dev/root rw,data=ordered

So the root partition appears as /dev/root. This device file doesn’t even exist. The real one is /dev/mmcblk0p2, and it’s mentioned in the kernel’s command line. On this platform, Linux boots without any initrd image. The kernel mounts root by itself, and takes it from there.

As Lennart points out in a different place, /dev/root is a special creature that is made up in relation to mounting a root filesystem by the kernel.

At this point, I realized that I’m up against a quirk that has probably been solved silently during the six years since the relevant distribution was released. In other words, no point wasting time looking for the root cause. So this calls for…

The workaround

With systemd around, writing a service is a piece of cake. So I wrote a trivial service which runs mount when it’s started and umount when it’s stopped. Not a masterpiece in terms of software engineering, but it gets the job done without polluting too much.

The service file, /etc/systemd/system/mount-card.service is as follows:

[Unit]

Description=TF card automatic mount

[Service]

ExecStart=/bin/mount /dev/mmcblk0p1 /mnt/tfcard/

ExecStop=/bin/umount /mnt/tfcard/

Type=simple

RemainAfterExit=yes

[Install]

WantedBy=local-fs.target

Activating the service:

# systemctl daemon-reload

# systemctl enable mount-card

Created symlink from /etc/systemd/system/local-fs.target.wants/mount-card.service to /etc/systemd/system/mount-card.service.

And reboot.

The purpose of “RemainAfterExit=yes” is to make systemd consider the service active even after the command exits. Without this row, the command for ExecStop runs immediately after ExecStart, so the device is unmounted immediately after it has been mounted. Setting Type to oneshot doesn’t solve this issue, by the way. The only difference between “oneshot” and “simple” is that oneshot delays the execution of other units until it has exited.

There is no need to add an explicit dependency on the existence of the root filesystem, because all services have an implicit Required= and After= dependency on sysinit.target, which in turn depends on local-fs.target. This also ensures that the service is stopped before an attempt to remount root as read-only during shutdown.

As for choosing local-fs.target for the WantedBy, it’s somewhat questionable, because no service can run until sysinit.target has been reached, and the latter depends on local-fs.target, as just mentioned. More precisely (citing “man systemd.service”), “service units will have dependencies of type Requires= and After= on sysinit.target, a dependency of type After= on basic.target”. So this ensures that the service is started after the Basic Target has been reached and shuts down before this target is shut down.

That said, setting WantedBy this way is a more accurate expression of the purpose of this service. Either way, the result is that the unit is marked for activation on every boot.

I don’t know if this is the place to write “all well that ends well”. I usually try to avoid workarounds. But in this case it was really not worth the effort to insist on a clean solution. Not sure if it’s actually possible.

Motivation

I have an English / Hebrew keyboard, but occasionally I also want to use the Swedish letters å, ä and ö. The idea is to sacrifice three keys on the keyboard for this purpose.

But which ones? I went for the keypad’s /, * and – keys. But hey, I use these normally every now and then.

So why not use these keys with Alt to produce the desired letters? Here’s a simple reason: I don’t know how to do that. What I managed to achieve with xmodmap isn’t sensitive to Alt. Only Shift.

And why not use the keys that are commonly used in Swedish keyboards? Because the relevant keys are used for colon and double quotes. These are useful in Swedish as well. This is normally solved by using the right-Alt button to select the Swedish letters. But as just mentioned, I didn’t find the way to use Alt buttons as a modifier.

And why don’t I just install the Swedish keyboard layout? I might do that later on, but right now I don’t use Swedish often enough to justify another language candidate. It makes language switching more cumbersome.

So my solution was to write simple bash scripts that turn the said keys into å, ä and ö, and another bash script that bring them back to their default use. I’m sure there’s a way to fix it nicely with the Alt key, but I won’t bother.

All here relates to Linux Mint 19 running Cinnamon.

Making changes

First thing first: Save the current setting, in case you mess up:

$ xmodmap -pke > default-mapping.txt

Now let’s look at the default situation. Start with the mapping of modifiers:

$ xmodmap

xmodmap: up to 4 keys per modifier, (keycodes in parentheses):

shift Shift_L (0x32), Shift_R (0x3e)

lock Caps_Lock (0x42)

control Control_L (0x25), Control_R (0x69)

mod1 Alt_L (0x40), Alt_R (0x6c), Meta_L (0xcd)

mod2 Num_Lock (0x4d)

mod3

mod4 Super_L (0x85), Super_R (0x86), Super_L (0xce), Hyper_L (0xcf)

mod5 ISO_Level3_Shift (0x5c), Mode_switch (0xcb)

To get the current keyboard mapping, try

$ xmodmap -pk | less

$ xmodmap -pke | less

The output is a list of keycode commands. Let’s look at one row:

keycode 42 = g G hebrew_ayin G U05F1

The number is the code of the physical key 42 on the keyboard.

That is followed by a number of keysyms. The first is with no modifier pressed, the second with Shift, the third when Mode_switch is used, and the forth is Switch + Mode_switch.

In my case, Mode_switch is when the language is changed to Hebrew, which is why “hebrew_ayin” is listed.

The fifth code, U05F1, is a Unicode character U+05F1, a Hebrew ligature character (ױ). Not clear what that’s for.

According to the man page, there may be up to 8 keysyms, but most X servers rarely use more than four. However, looking at the output of “xmodmap -pke”, there are 10 keysyms allocated to the function keys (F1, F2, etc.). Once again, not clear what for.

If the number codes aren’t clear, use this command to obtain X events. The keycodes are printed out (among all the mumbo-jumbo, it says e.g. “keycode 82″)

$ xev

This utility opens a small windows and dumps all X events that it gets. So this small window must have focus so that the keyboard events are directed to it.

What do these keysyms mean?

I tried this on keycode 90, which is the keypad’s zero or Ins key. Its assignment is originally

keycode 90 = KP_Insert KP_0 KP_Insert KP_0

So I changed it with

$ xmodmap -e "keycode 90 = a b c d e f g h i j"

This command takes a couple of seconds to execute. I’m discussing why it’s slow below.

After this, pressing this character plainly now just printed out “a”. No I could test a whole lot of combinations to find out what each of these positions mean:

- No modifier.

- Shift

- Hebrew mode on

- Hebrew mode on + Shift

No surprise here. As for the rest of the keysyms, I didn’t manage to get any of the e-j letter to appear, no matter what combination I tried. So I guess they’re not relevant in my setting. The man page was correct: Only four of them are actually used.

By the way, when applying Caps Lock, I got the uppercase version of the same letters. It’s really twisted.

Reverting my change from above:

$ xmodmap -e "keycode 90 = KP_Insert KP_0 KP_Insert KP_0"

The scripts

To reiterate, the purpose is to turn the keypad’s /, *, – into å, ä and ö.

Their respective 106, 63 and 82 (that was pretty random, wasn’t it?), with the following defaults:

keycode 106 = KP_Divide KP_Divide KP_Divide KP_Divide KP_Divide KP_Divide XF86Ungrab KP_Divide KP_Divide XF86Ungrab

keycode 63 = KP_Multiply KP_Multiply KP_Multiply KP_Multiply KP_Multiply KP_Multiply XF86ClearGrab KP_Multiply KP_Multiply XF86ClearGrab

keycode 82 = KP_Subtract KP_Subtract KP_Subtract KP_Subtract KP_Subtract KP_Subtract XF86Prev_VMode KP_Subtract KP_Subtract XF86Prev_VMode

So this is the script to bring back the buttons to normal (which I named “kbnormal”):

#!/bin/bash

echo "Changing the keymap to normal."

echo "The display will now freeze for 7-8 seconds. Don't worry..."

sleep 0.5

/usr/bin/xmodmap - <<EOF

keycode 106 = KP_Divide KP_Divide KP_Divide KP_Divide KP_Divide KP_Divide XF86Ungrab KP_Divide KP_Divide XF86Ungrab

keycode 63 = KP_Multiply KP_Multiply KP_Multiply KP_Multiply KP_Multiply KP_Multiply XF86ClearGrab KP_Multiply KP_Multiply XF86ClearGrab

keycode 82 = KP_Subtract KP_Subtract KP_Subtract KP_Subtract KP_Subtract KP_Subtract XF86Prev_VMode KP_Subtract KP_Subtract XF86Prev_VMode

EOF

And finally, this is the script that turns these buttons into å, ä and ö:

#!/bin/bash

echo "Changing the keymap to swedish letters on numpad's /, *, -"

echo "The display will now freeze for 7-8 seconds. Don't worry..."

sleep 0.5

/usr/bin/xmodmap - <<EOF

keycode 106 = aring Aring aring Aring

keycode 63 = adiaeresis Adiaeresis adiaeresis Adiaeresis

keycode 82 = odiaeresis Odiaeresis odiaeresis Odiaeresis

EOF

The change is temporary, and that’s fine for my purposes. I know a lot of people have been struggling with making the changes permanent, and I have no idea how to do that, nor do I have any motivation to find out.

See the notification that the screen will freeze for a few seconds? It’s for real. The mouse pointer keeps running normally, but the desktop freezes completely for a few seconds sometimes up to twenty seconds, including the clock.

That rings an old bell. Like, a six years old bell. I’ve been wondering why moving the mouse pointer in and out from a VMWare window causes the entire screen to freeze for a minute. The reason is that xmodmap calls XChangeKeyboardMapping() for every “keycode” row. And each such call causes the X server to report a MappingNotify events to all clients. These clients may process such an event by requesting an update of their view of the keymap.

This was probably reasonable in the good old times when people had a few windows on a desktop. But with multiple desktops and a gazillion windows and small apps on each, I suppose this isn’t peanuts anymore. The more virtual desktop I have, and the more windows I have on each, the longer the freeze. I’m not sure if this issue is related to Linux Mint, Cinnamon, or to the fact that it’s so convenient to have a lot of open windows with Cinnamon. While writing this, I have 40 windows spread on five virtual desktops.

Reducing the freeze time

As just mentioned, the problem is that xmodmap calls XChangeKeyboardMapping() for each key mapping that needs to be modified. But XChangeKeyboardMapping() can also be used to change a range of key mappings. In fact, it’s possible to set the entire map in one call. This was the not-so-elegant workaround that was suggested on a Stackexchange page.

So the strategy is now like this: Read the entire keymap into an array, make the changes in this array, and then write it back in one call. If I had the motivation and patience to modify xmodmap, this is what I would have done. But since I’m in here for solving my own little problem, this is the C program that does the same as the script above. Only with a freeze that is three times shorter: One call to XChangeKeyboardMapping() instead of three.

#include <stdlib.h>

#include <stdio.h>

#include <unistd.h>

#include <X11/XKBlib.h>

#define update_per_code 4

static const struct {

int keycode;

char *names[update_per_code];

} updatelist [] = {

{ 106, { "aring", "Aring", "aring", "Aring" }},

{ 63, { "adiaeresis", "Adiaeresis", "adiaeresis", "Adiaeresis" }},

{ 82, { "odiaeresis", "Odiaeresis", "odiaeresis", "Odiaeresis" }},

{ }

};

int main(int argc, char *argv[]) {

int rc = 0;

int minkey, maxkey;

int keysyms_per_keycode;

int i, j;

Display *dpy = NULL;

KeySym *keysyms = NULL;

dpy = XOpenDisplay(NULL);

if (!dpy) {

fprintf(stderr, "Failed to open default display.\n");

rc = 1;

goto end;

}

XDisplayKeycodes(dpy, &minkey, &maxkey);

keysyms = XGetKeyboardMapping(dpy, minkey, 1+maxkey-minkey,

&keysyms_per_keycode);

if (!keysyms) {

fprintf(stderr, "Failed to get current keyboard mapping table\n");

rc = 1;

goto end;

}

if (keysyms_per_keycode < update_per_code) {

fprintf(stderr, "Number of keysyms per key is %d, no room for %d as in internal table.\n", keysyms_per_keycode, update_per_code);

rc = 1;

goto end;

}

for (i=0; updatelist[i].keycode; i++) {

int key = updatelist[i].keycode;

int offset = (key - minkey) * keysyms_per_keycode;

if ((key < minkey) || (key > maxkey)) {

fprintf(stderr, "Requested keycode %d is out of range.\n", key);

rc = 1;

goto end;

}

for (j=0; j < update_per_code; j++, offset++) {

char *name = updatelist[i].names[j];

KeySym k = XStringToKeysym(name);

if (k == NoSymbol) {

fprintf(stderr, "Invalid key symbol name \"%s\" for key %d\n",

name, key);

rc = 1;

goto end;

}

keysyms[offset] = k;

}

}

fprintf(stderr, "The X display may freeze for a few seconds now. "

"Don't worry...\n");

usleep(100000);

rc = XChangeKeyboardMapping(dpy, minkey, keysyms_per_keycode,

keysyms, 1+maxkey-minkey);

if (rc)

fprintf(stderr, "Failed to update keyboard mapping table.\n");

end:

if (keysyms)

XFree(keysyms);

if (dpy)

XCloseDisplay(dpy);

return rc;

}

It might be necessary to install Xlib’s development package, for example

# apt install xutils-dev

Compilation with:

$ gcc keymapper.c -o keymapper -Wall -O3 -lX11

The only obvious change that you may want to do is the entries in updatelist[], so that they match your needs.

Note that this program only changes the first four keysyms of each key code (or what you change @update_per_code to). Rationale: I’ve never manage to activate the the other entries, so why touch them?

Introduction

These are my notes while setting up ZTE’s ONT for GPON on a Linux desktop computer. I bought this thing from AliExpress at 20 USD, and got a cartoon box with the ONT itself, a power supply and a LAN cable.

This is a follow-up from a previous post of mine. I originally got a Nokia ONT when the fiber was installed, but I wanted an ONT that I can talk with. In particular, one that gives some info about the fiber link. Just in case something happens.

The cable of the 12V/0.5A power supply was too short for me, so I remained with the previous one (from Nokia’s ONT).

The software version of the ONT is V6.0.1P1T12 out of the box, which is certified by Bezeq. Couldn’t be better.

By default, this ONT acts as a GPON to Ethernet bridge. However, judging by its menus on the browser interface, it can also act as a router with one Ethernet port: If so requested, it apparently takes care of the PPPoE connection by itself, and is capable of supplying the whole package that comes with a router: NAT, a firewall, a DHCP server, a DNS and whatnot. I didn’t try any of this, so I don’t know how well it works. But it’s worth to keep these possibilities in mind.

In order to reset the ONT’s settings to the default values, press the RESET button with a needle for at least five seconds while the device is on (according to the user manual, didn’t try this).

So how come this thing isn’t sold at ten times the price, rebranded by some big shot company? I think the reason is this:

The PON LED is horribly misleading

According to the user guide, the PON LED is off when the registration has failed, blinking when registration is ongoing, and steadily on when registration is successful.

The problem is that registration doesn’t mean authentication. In other words, the fact that the PON LED is steadily on doesn’t mean that the other side (the OLT) is ready to start a PPPoE session. In particular, if the PON serial number is not set up correctly, the PON LED will be steadily on, even though the fiber link provider has rejected the connection.

Nokia’s modem’s PON led will blink when the serial number is wrong, and it makes sense: The PON is not good to go unless the authentication is successful. I suppose most other ONTs behave this way.

The only way to tell is through the browser interface. More about this below.

Browser interface

The ONT responds to pings and http at port 80 on address 192.168.1.1. A Chinese login screen appears. Switch language by clicking on where is says “English” at the login box’ upper right corner.

The username and password are both “admin” by default.

As already mentioned, this ONT has a lot of features. For me, there were two important ones: The ability to change the PON serial number, so I can replace ONTs without involving my ISP, and the ability to monitor the fiber link’s status and health. This can be crucial when spotting a problem:

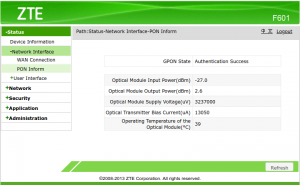

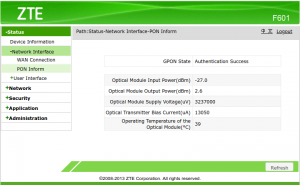

(click to enlarge)

Note that in this screenshot, the GPON State is “Authentication Success”. This is what it should be. If it says “Registration Complete”, it means that the ONT managed through a few stages in the setup process, but the link isn’t up yet: The other side probably rejected the serial number (and/or the password, if such is used). And by the way, when the fiber wasn’t connected at all, it said “Init State”.

Also note the input power, around -27 dBm in my case. It depends on a lot of factors, among others the physical distance to the other fiber transmitter. It can also change if optical splitters are added or removed on the way. All this is normal. But each such change indicates that something has happened on the optical link. So it’s a good way to tell if people are fiddling with the optics, for better and for worse.

These are the changes I made on my box, relative to the default:

- I turned the firewall off at Security > Firewall (was at “Low”). It’s actually possible to define custom rules, most likely based upon iptables. I don’t think the firewall operates when the ONT functions as a bridge, but just to be sure it won’t mess up.

- In Security > Service Control, there’s an option for telnet access from WAN. Removed it.

- In BPDU, disabled BPDU forwarding.

I don’t think any of these changes make any difference when using the ONT as a bridge.

Setting the PON serial number

Note to self: Look for a file named pon-serial-numbers.txt for the previous and new PON serial numbers.

When I first connected the ONT to the fiber, I was surprised to see that the PON LED flashed and then went steady. Say what? The network accepted the ONT’s default serial number without asking any questions?

I then looked at the “PON inform” status page (Status > Network Interface > PON Inform), and it said “Registration Complete”. Wow. That looked really reassuring. However, pppd was less happy with the situation. In fact, it had nobody to talk with:

Aug 06 10:56:21 pppd[36167]: Plugin rp-pppoe.so loaded.

Aug 06 10:56:21 pppd[36167]: RP-PPPoE plugin version 3.8p compiled against pppd 2.4.5

Aug 06 10:56:21 pppd[36168]: pppd 2.4.5 started by root, uid 0

Aug 06 10:56:56 pppd[36168]: Timeout waiting for PADO packets

Aug 06 10:56:56 pppd[36168]: Unable to complete PPPoE Discovery

Aug 06 10:56:56 pppd[36168]: Exit.

Complete silence from the other side. I was being ignored bluntly.

Note that I’m discussing the PPPoE topic in another post of mine.

Solution: I went into the Network > PON > SN menu in the browser interface, and copied the serial number that was printed on my previous ONT in full. It was something like ALCLf8123456. That is, four capital letters, followed by 8 hex digits. There’s also a place to fill in the password. Bezeq’s fiber network apparently doesn’t use a password, so I just wrote “none”. Clicked the “Submit” button, the ONT rebooted (it takes about a minute), and after that the Internet connection was up and running.

And of course, the GPON State appeared as “Authentication Success” in the “POD Inform” page.

So don’t trust the PON LED, and don’t get deceived by the words “Registration Complete”. Unless you feed the serial number that the fiber network provider expects, there’s nobody talking with you.

In fact, there’s an option in browser interface to turn off the LEDs altogether. It seemed like a weird thing to me at first, but maybe this is the Chinese workaround for this issue with the PON LED.

Bottom line

With the Internet link up and running, I ran a speed test. Exactly the same as the Nokia ONT.

So the final verdict is that this a really good ONT, which provides a lot of features and information. The only problem it apparently has is the confusing information regarding the PON link’s status when the serial number is incorrect. Which is probably the reason why this cute thing remains a Chinese no-name product.

I checked my Apache access logs, and noted that I saw no indications for people clicking links between two of my websites. It was extremely odd, because it was quite clear that at least a few such clicks should happen.

In the beginning, I though it was because of the rel=”noopener” part in the link. It shouldn’t have anything to do with this, but maybe it did? So no, that wasn’t the problem.

The issue was that if the link goes from an https site to a non-https site, the referer is blank. Why? Not 100% clear, but this is what Mozilla’s guidelines says. It has probably to do with pages with sensitive URLs (e.g. pages for resetting passwords). If the URL leaks through a non-secure http link (say, to a third-party server that supplies images, fonts and other stuff for the page), an eavesdropping attacker might get access to this URL.

And it so happens that this blog is a non-https site as of writing this. Mainly because I’m lazy.

On the other hand, when you read this, the site has been moved to https. Lazy or not, the missing referrer was the motivation I needed to finally do this.

Was it worth the effort? Well, so-so. Both Chrome nor Firefox submit a blank referrer if the link was non-https, even if a redirection to an https address is made. In other words, all existing links to a plain http address will remain hidden. But new links are expected to be based upon https, so at least they will be visible.

Well, partly: My own anecdotal test showed that Firefox indeed submits the full URL of the referrer for an https link, but Chrome gives away only the domain of the linking site. This is more secure of course: Don’t disclose a sensitive URL to a third party. And also, if you want to know who links to your page, go to Google’s search console. So chopping off the referrer also server Google to some extent.

Bottom line: It seems like the Referer thing is slowly fading away.

Introduction

Having switched from ADSL to FTTH (fiber to the home), I was delighted to discover that the same script that set up the pppoe connection for ADSL also works with the new fiber connection. And as the title of this post implies, I opted out the external router that the ISP provided, and instead my Linux desktop box does the pppoe stuff. Instead, I went for a simple ONT (“fiber bridge”) which relays Ethernet packets between the fiber and an Ethernet jack.

This post is a spin-off from another post of mine, which discusses the transition to fiber in general.

Why am I making things difficult, you may ask? Actually, if you’re reading this there’s a good chance that you want to do the same, but anyhow: The reason for opting out an external router is the possibility to apply a sniffer on the pppoe negotiation if something goes wrong. To be able to tell the difference between rejected credentials and an ISP that doesn’t talk with me at all. This might hopefully help bringing back the link quicker if and when.

But then it turned out that even though the old setting works, the performance is quite bad: It was all nice when the data rate was limited to 15 Mb/s, but 1000 Mb/s is a different story.

So here’s my own little cookbook to pppoe for FTTH on a Linux desktop.

The “before”

The commands I used for ADSL were:

/usr/sbin/pppd pty /usr/local/etc/ADSL-pppoe linkname ADSL-$$ user "myusername@013net" remotename "10.0.0.138 RELAY_PPP1" defaultroute netmask 255.0.0.0 mtu 1452 mru 1452 noauth lcp-echo-interval 60 lcp-echo-failure 3 nobsdcomp usepeerdns

such that /usr/local/etc/ADSL-pppoe reads:

#!/bin/bash

/usr/sbin/pppoe -p /var/run/pppoe-adsl.pid.pppoe -I eth1 -T 80 -U -m 1412

And of course, replace myusername@013net with your own username and assign the password in /etc/ppp/pap-secrets. Hopefully needless to say, the ADSL modem was connected to eth1.

This ran nicely for years with pppd version 2.4.5 and PPPoE Version 3.10, which are both very old. But never mind the versions of the software. pppoe and pppd are so established, that I doubt any significant changes have been made over the last 15 years or so.

Surprisingly enough, I got only 288 Mb/s download and 100 Mb/s upload on Netvision’s own speed test. The download speed should have been 1000 Mb/s (and the upload speed is as expected).

I also noted that pppoe ran at 75% CPU during the speed test, which made me suspect that it’s the bottleneck. Spoiler: It indeed was.

I tried a newer pppd (version 2.4.7) and pppoe (version 3.11) but that made no difference. As one would expect.

Superfluous options

Note that pppd gets unnecessary options that set the MTU and MRU to 1452 bytes. I suspected that these were the reason for pppoe working hard, so I tried without them. But there was no difference. They are redundant nevertheless.

Then we have the ‘remotename “10.0.0.138 RELAY_PPP1″ ‘ part, which I frankly don’t know why it’s there. Probably a leftover from the ADSL days.

Another thing is pppoe’s “-m 1412″ flag, which causes pppoe to mangle TCP packets with the SYN flag set, so that their MSS option is set to 1412 bytes, and not what was originally requested.

A quick reminder: The MSS option is the maximal size of IP packets that we can receive from the TCP stack on the other side. This option is used to tell the other side not to create TCP packets larger than this, in order to avoid fragmentation of arriving packets.

It is actually a good idea to mangle the MSS on outgoing TCP packets, as explained further below. But the 1412 bytes value is archaic, copied from the pppoe man page or everyone copies from each other. 1452 is a more sensible figure. But it doesn’t matter all that much, because I’m about to scrap the pppoe command altogether. Read on.

Opening the bottleneck

The solution is simple: Use pppoe in the kernel.

There’s a whole list of kernel modules that need to be available (or compiled into the kernel), but any sane distribution kernel will have them included. I suppose CONFIG_PPPOE is the kernel option to check out.

The second thing is that pppd should have the rp-pppoe.so plugin available. Once again, I don’t think you’ll find a distribution package for pppd without it.

With these at hand, I changed the pppd command to:

/usr/sbin/pppd plugin rp-pppoe.so eth1 linkname ADSL-$$ user "myusername@013net" defaultroute netmask 255.0.0.0 noauth lcp-echo-interval 60 lcp-echo-failure 3 nobsdcomp usepeerdns

That’s exactly the same as above, but instead of the “pty” option that calls an external script, I use the plugin to talk with eth1 directly. No pppoe executable to eat CPU, and the transmission speed goes easily up to >900 Mb/s without any dramatic CPU consumption visible (“top” reports 8% system CPU at worst, and that’s global to all CPUs).

I also removed the options for setting MTU and MRU in the pppd command. ppp0 now presents an MTU of 1492, which I suppose is correct. I mean, why fiddle with this? And I ditched the “remotename” option too.

Once again, the ONT (“fiber bridge”) was connected to eth1.

Samples of log output

This is the comparison between pppd’s output with pppoe as an executable and with the kernel’s pppoe module:

First, the old way, with pppoe executable:

Using interface ppp0

Connect: ppp0 <--> /dev/pts/13

PAP authentication succeeded

local IP address 109.186.24.16

remote IP address 212.143.8.104

primary DNS address 194.90.0.1

secondary DNS address 212.143.0.1

And with pppoe inside the kernel:

Plugin rp-pppoe.so loaded.

RP-PPPoE plugin version 3.8p compiled against pppd 2.4.5

PPP session is 3865

Connected to 00:1a:f0:87:12:34 via interface eth1

Using interface ppp0

Connect: ppp0 <--> eth1

PAP authentication succeeded

peer from calling number 00:1A:F0:87:12:34 authorized

local IP address 109.186.4.18

remote IP address 212.143.8.104

primary DNS address 194.90.0.1

secondary DNS address 212.143.0.1

The MAC address that is mentioned seems to be owned by Alcatel-Lucent, and is neither my own host’s or the ONT’s (i.e. the “fiber adapter”). It appears to belongs to the link partner over the fiber connection.

And by the way, if the ISP credentials are incorrect, the row saying “Connect X <–> Y” is followed by “LCP: timeout sending Config-Requests” after about 30 seconds. Instead of “PAP authentication succeeded”, of course.

Clamping MSS

The pppoe user-space utility had this nice “-m” option that caused all TCP packets with a SYN to be mangled, so that their MSS field was set as required for the pppoe link. But now I’m not using it anymore. How will the MSS field be correct now?

First of all, this is not an issue for packets that are created on the same computer that runs pppd. ppp0′s MTU is checked by the TCP stack, and the MSS is set correctly to 1452.

But forwarded packets come from a source that doesn’t know about ppp0′s reduced MTU. That host sets the MSS according to the NIC that it sees. It can’t know that this MSS may be too large for the pppoe link in the middle.

The solution is to add a rule in the firewall that mangles these packets:

iptables -A FORWARD -o ppp0 -t mangle -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu

This is more or less copied from iptables’ man page. I added the -o part, because this is relevant only for packets going out to ppp0. No point mangling all forwarded packets.

A wireshark dump

This is what wireshark shows on the Ethernet card that is connected to the ONT during a successful connection to the ISP. It would most likely have looked the same on an ADSL link.

No. Time Source Destination Protocol Length Info

3 0.142500324 Dell_11:22:33 Broadcast PPPoED 32 Active Discovery Initiation (PADI)

4 0.144309286 Alcatel-_87:12:34 Dell_11:22:33 PPPoED 60 Active Discovery Offer (PADO) AC-Name='203'

5 0.144360515 Dell_11:22:33 Alcatel-_87:12:34 PPPoED 52 Active Discovery Request (PADR)

6 0.146062649 Alcatel-_87:12:34 Dell_11:22:33 PPPoED 60 Active Discovery Session-confirmation (PADS)

7 0.147037263 Dell_11:22:33 Alcatel-_87:12:34 PPP LCP 36 Configuration Request

8 0.192272315 Alcatel-_87:12:34 Dell_11:22:33 PPP LCP 60 Configuration Request

9 0.192290554 Alcatel-_87:12:34 Dell_11:22:33 PPP LCP 60 Configuration Ack

10 0.192335094 Dell_11:22:33 Alcatel-_87:12:34 PPP LCP 40 Configuration Ack

11 0.192516908 Dell_11:22:33 Alcatel-_87:12:34 PPP LCP 30 Echo Request

12 0.192660752 Dell_11:22:33 Alcatel-_87:12:34 PPP PAP 50 Authenticate-Request (Peer-ID='myusername@013net', Password='mypassword')

13 0.201978697 Alcatel-_87:12:34 Dell_11:22:33 PPP LCP 60 Echo Reply

14 0.309272346 Alcatel-_87:12:34 Dell_11:22:33 PPP PAP 60 Authenticate-Ack (Message='')

15 0.309286268 Alcatel-_87:12:34 Dell_11:22:33 PPP IPCP 60 Configuration Request

16 0.309289064 Alcatel-_87:12:34 Dell_11:22:33 PPP IPV6CP 60 Configuration Request

17 0.309398416 Dell_11:22:33 Alcatel-_87:12:34 PPP IPCP 44 Configuration Request

18 0.309429731 Dell_11:22:33 Alcatel-_87:12:34 PPP IPCP 32 Configuration Ack

19 0.309441755 Dell_11:22:33 Alcatel-_87:12:34 PPP LCP 42 Protocol Reject

20 0.315313539 Alcatel-_87:12:34 Dell_11:22:33 PPP IPCP 60 Configuration Nak

21 0.315365821 Dell_11:22:33 Alcatel-_87:12:34 PPP IPCP 44 Configuration Request

22 0.321070570 Alcatel-_87:12:34 Dell_11:22:33 PPP IPCP 60 Configuration Ack

These “Configuration Request” and “Configuration Ack” packets contain a lot of data, of course: This is where the local and remote IP addresses are given, as well as the addresses to the DNSes.

Some random notes

- On a typical LAN connection over Ethernet, MSS is set to 1460. The typical value for a pppoe connection is 1452, 8 bytes lower.

- Add “nodetach” to pppd’s command for a (debug) foreground session.

- Add “dump” to pppd’s command to see all options in effect (from option file and command line combined).