Just a few notes about getting the sound working.

Pulseaudio

Pulseaudio belongs to the Linux Windowization era, meaning there are plenty of instructions of what-to-do-if but very little info about how the machinery works. Because it never fails, right? Who needs that info?

My notes about problems with sound from Firefox are in a different post.

Important: Any user needing to access Pulseaudio sound must belong to the “audio” group, since the device files in /dev/snd/ need to be opened.

Anyhow, if this is what you get:

$ aplay -D hw:0,0 file.wav

aplay: main:654: audio open error: Device or resource busy

Then just go

$ killall -9 pulseaudio

or

$ pulseaudio -k

(which is a pleasing action for some reason) and the pulseaudio daemon will (unfortunately…!) restart as a result of some other will-always-work hocus-pocus daemon, whose identity I may still need to find (rtkit?).

To prevent pulseaudio from restarting all the time, edit /etc/pulse/client.conf and change “autospawn = yes” to “autospawn = no” (possibly uncomment the line if necessary). Note that in the absence of the daemon, the volume control applet goes away.

To start it again, go

$ pulseaudio -D

Update: There’s the pacmd command-line utility, which supplies pretty useful info about what’s going on internally. If it says “No PulseAudio daemon running, or not running as session daemon” just kill -9 pulseaudio. That seems to be the solution for everything.

Using aplay and lsof, I managed to figure out that hw0,1 (Card 0, Device 1) is in fact /dev/snd/pcmC0D1p. To check who’s using my default sound device, go

$ lsof /dev/snd/pcmC0D0p

Pulseaudio may be in the list, because it’s running the device. When running aplay, it takes the file. Sometimes nobody does. I’m not sure what’s going on here.

And as silly as this comment is: To change the output sink, just use Gnome’s Preferences > Sound and set it up there. Also try “pavucontrol” from command line for a slightly different GUI tool.

ALSA

For (too much) information about the audio hardware, run alsa-info at command prompt.

The devices on my system:

$ aplay -l

**** List of PLAYBACK Hardware Devices ****

card 0: Intel [HDA Intel], device 0: ALC888 Analog [ALC888 Analog]

Subdevices: 0/1

Subdevice #0: subdevice #0

card 0: Intel [HDA Intel], device 1: ALC888 Digital [ALC888 Digital]

Subdevices: 1/1

Subdevice #0: subdevice #0

card 1: HDMI [HDA ATI HDMI], device 3: ATI HDMI [ATI HDMI]

Subdevices: 1/1

Subdevice #0: subdevice #0

Jack

The idea about Jack is to allow the user route sound sources to sinks, so there is better control of what goes where. This makes sense in particular when MIDI devices are involved, since picking the MIDI player can make a significant change.

To work correctly, Jack relies on the audio group, which the user of the Jack application must belong to. Prepare for a relogin when fiddling with this.

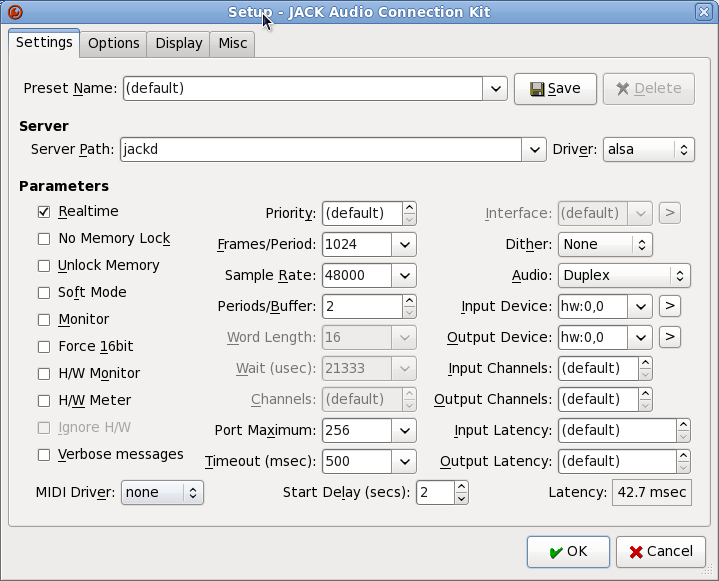

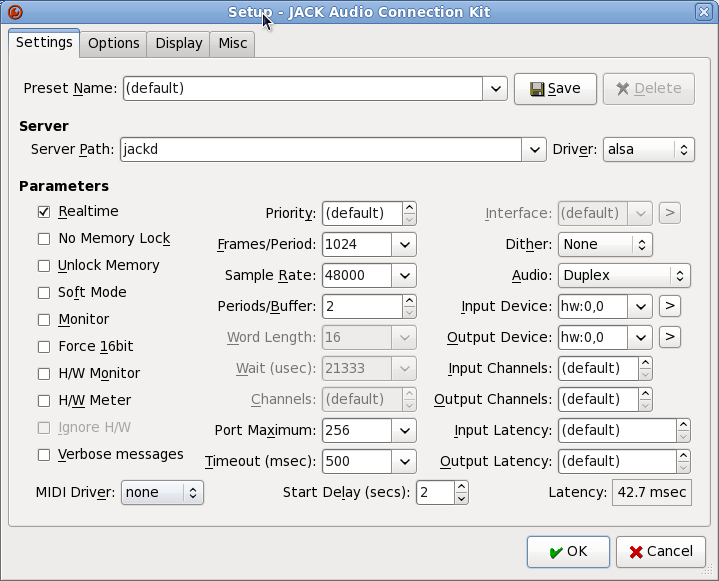

Applications requiring JACK must have the Jack server running before being executed. QJackCtl is the preferred tool for that. Settings: Input/output device is hw:0,0 and sample rate 48000. Then press “Start” to kickoff the server.

Jack needs to be exclusive on the hardware device, so if another application is using hw:0,0 jackd will not start, resulting in annoying periodic popup messages by QJackCtl. To find out who’s to blame, see notes about Pulseaudio above.

Also, if it manages to start, no other sound application (including console alerts) will be able to play any sound. Did I say annoying? Or hiJack?

This is what happens when jackd is running, making all other sounds die out.

$ lsof /dev/snd/pcmC0D0p

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

jackd 14825 eli mem CHR 116,7 14591 /dev/snd/pcmC0D0p

jackd 14825 eli 10u CHR 116,7 0t0 14591 /dev/snd/pcmC0D0p

And finally, this is the setup screen that worked for me:

Audacity

Can have its audio devices set to “default”. hw:0,0 has been used in the past to solve noisy sound problems, but it looks like killing pulseaudio is much better (and more fun).

LMMS

The GUI looks a bit like those cars with ultraviolet lights underneath. More like a toy, and not a professional tool. But judging from its variety of sound sources (some of which are outright stupid. Commodore 64 emulation? Come on) and its by-programmers-for-programmers feel I gave it a go. So it’s indeed mostly a nice toy: A fantastic variety of sounds, fun editing, but if you want to insert a few bars in the middle of an edited song, there’s no way to just make some space. No bulk operations, as needed when you really want to do something serious. So as for v0.48 it’s nice to play with but not really a working horse. The real-life production scenarios are not handled yet (in particular, moving and copying large portions). The undo operation is also very partially supported, so a simple mouse click can easily destroy some work irrecoverably. Having said all this, I hope its developers will stick to it, and I will take all my criticism back in a year or so. It looks like they are on the right path.

I was surprised to find out that the output format is binary, but that’s just compressed XML. If hand editing is desired, it’s possible to save to .mpp rather than .mppz.

Ah, and using ZynAddSubFX as a sound bank, I ran into a bug causing notes to be stuck playing indefinitely during playback and conversion to WAV. This happens at random. Just some note refuses to release until reloading the entire project. Making WAVs is impossible with this bug, of course. But it looks like I know how to fix this.

Rosegarden

It’s maybe a wonderful application, but I have to admit that its dependency on Jack made it far less attractive compared with LMMS. Having all pulseaudio dependent applications die out is not fun. The alternative would be to connect all other applications to Jack, which I would maybe consider had I been a real sound guy.

… or when Paste does the same. Everything in the targeted track is deleted, and replaced with the snippet, which we wanted spliced in or overwritten. But not instead of everything!

Well, this is not a bug, but a feature: This happens when there are “in” and “out” markers (or even worse: only one of them) in the editing timeline. When these are present, Cinelerra interprets these as the points to which the snippet should go. If the “in” point happened to be at the beginning of the track, all goes away.

Simple solution: Remove “in” and “out” points from the timeline (are they there because of previous rendering?)

Using mplayer, I was annoyed that mplayer remembered my brightness, contrast, hue and saturation settings, which I had tweaked with the keyboard controls the last time I used mplayer. I tried to find where it keeps that info, but didn’t find it anywhere.

Then it turned out, that mplayer changes these settings on the X display’s own attributes. Going “xvinfo” on command prompt soon revealed that my tweakings were remembered by the display itself.

I suppose there’s a reason why mplayer changes the display’s parameters rather than keeping this business to itself. Anyhow, it looks like I’m the only one who gets annoyed by this. Most complaints on the web are about these settings getting lost when a new clip is played. And sincerely, I don’t get it. Why would anyone want all videos played with a color tint from the moment one accidentally shifted the hue? Isn’t the whole point of a command-line application that it runs exactly the same every time one executes it, regardless of what happened in the past?

Solution: xvattr. The Fedora guys must have thought its 23 kbyte of disk space are too much for the useless things it can do (why would anyone want to reset the settings with a command-line utility when you can do that with the Movie Player’s GUI?), so it has to be installed:

# yum install xvattr

and here’s my little script for clearing the relevant settings.

#!/bin/bash

{ xvattr -a XV_BRIGHTNESS -v 0 ;

xvattr -a XV_CONTRAST -v 0 ;

xvattr -a XV_SATURATION -v 0 ;

xvattr -a XV_HUE -v 0 ; } > /dev/null

I chose to call this script “xvclear”, but that doesn’t matter much.

If anyone knows how to tell mplayer to reset these automagically, please comment below.

There’s a bug in the kernel, which causes the Big TTY Mutex to be held for some 30 seconds every time certain TTY devices are closed. During these 30 seconds, no TTY operation is possible (including pseudo terminals), which practically means that most, if not all communication with the keyboard is stalled. Since GUI applications don’t expect simple TTY I/O to take time, they perform these operations in the main GUI loop, which causes GUI to freeze as well. In particular, this is evident on my Fedora 12: I can’t type in my password, but only after waiting a few minutes for something to get in order.

The kernel bug is being discussed as these lines are written, and it would be reasonable to expect that a patch will be out within a week or two. But first we need to find out exactly what went wrong.

In the meanwhile, it may be possible to work around the problem by disabling the application which causes these long closes. In my case, it is modem-manager which probes serial ports for cellular modems or whatever, which I don’t have.

It might be worthy to check out /usr/log/messages for entries like this:

Oct 27 18:16:35 ocho modem-manager: (ttyS2) opening serial device...

Oct 27 18:16:35 ocho modem-manager: (ttyS3) closing serial device...

Oct 27 18:17:05 ocho modem-manager: (ttyS3) opening serial device...

Oct 27 18:17:09 ocho modem-manager: (ttyS1) closing serial device...

Oct 27 18:17:39 ocho modem-manager: (ttyS2) closing serial device...

See the 30 seconds gap between closing a serial device and the next entry? That’s the time the kernel holds the close() call until timeout. It’s not clear whether the 30 seconds delay is correct behavior, but it is clear that a change in the locking schema in 2.6.36 causes all other TTY operations in the system to wait.

The immediate solution could be to turn off the NetworkManager service (which may remove some desirable features from your system), or configure it not to probe serial devices. I haven’t gotten into this further, since I need this on for debugging purposes.

If your issue is with the modem-manager, please leave a comment below. I’d like to contact you to acquire some information about your hardware. One of the possibilities is that the problem occurs due to a hardware issue.

As my dentist always says: It will be over in no time.

The bad news are that Linux has been behaving pretty badly when the disk is under heavy load. The good news are that all you need is a kernel upgrade.

First, let’s run a simple experiment:

$ dd if=/dev/zero of=junkfile bs=1k count=20M

Don’t expect anything dramatic to happen right away. It takes half a minute or so. In the meanwhile, on another command prompt, let’s keep track on the memory info:

$ watch cat /proc/meminfo

What will soon happen, is that MemFree goes low and Cached goes high. This is normal behavior: As the dd command floods the disk queue with blocks to be written, unused memory is allocated as temporary buffers for the data just to be written or just written. Which is a sensible thing to do, given that the memory is unused anyhow, so why not remember what we just wrote, in case someone asks to read it?

So far so good. The trouble begins when MemFree gets really low, say around 50000 kB, and the heavy disk load continues. For reasons beyond me, this makes the system stall, and then recover when the disk load is stopped. For example, our watch program stops updating. Have a look on the time stamp at the upper right, and see that it freezes for several seconds.

If the memory goes low on your system, but the system doesn’t get stuck, nevermind. If it does, you may need the patch for vmscan.c, or just upgrade to the current stable kernel (2.6.36 when these lines were written).

A simple way to check if your kernel has this fixed or not, is to look for the should_reclaim_stall() function is mm/vmscan.c in your kernel source. If the function is there, you’re fine. If not, and you’re running on some 2.6.32 or something like that, it’s very likely that you need the fix.

This is just a few command lines I use every now and then. Just so I have them when I need them.

Convert a lot of Flash Video files to DIVX, audio rate 128 kb/sec mp3:

for i in *.flv ; do ffmpeg -i "$i" -ab 128k -b 1500k -vcodec mpeg4 -vtag DIVX "${i%.*}.avi" ; done

FFMPEG is good with the video output from my Canon 500D camera. So to convert to DIVX:

$ ffmpeg -i MVI_6739.MOV -acodec pcm_s16le -b 5000k -vcodec mpeg4 -vtag XVID was_4gb.avi

Convert a movie with super-wide screen to 720p, with some black stripes around, so that there’s place for the subtitles. Note that centering vertically would require (oh-ih)/2, not divided by four. Also set the bitrate to 2000k, so the quality remains good.

$ ffmpeg -i movie.mp4 -vf 'scale=1280:720:force_original_aspect_ratio=decrease,pad=1280:720:(ow-iw)/2:(oh-ih)/4,setsar=1' -b 2000k movie.720p.mp4

Or better still, loop through all files and use MP3 encoding for audio:

for i in *.MOV ; do ffmpeg -i "$i" -ab 192k -acodec libmp3lame -b 5000k -vcodec mpeg4 -vtag DIVX "divx_${i%.*}.avi" ; done

Or to MJPEG, which is the only format I know to work 100% smooth with Cinelerra:

$ ffmpeg -i MVI_6739.MOV -acodec pcm_s16le -b 50000k -vcodec mjpeg -vtag MJPG mjpeg.avi

$ ffmpeg -i MVI_6739.MOV -acodec pcm_s16le -vcodec mjpeg -q:v 0 -vtag MJPG mjpeg.avi

(the -q:v 0 enforces high quality MJPEG output. Setting the bitrate depends on the frame size)

The same, only for all MOV files in the current directory

for i in *.MOV ; do ffmpeg -i $i -acodec pcm_s16le -b 50000k -vcodec mjpeg -vtag MJPG mjpeg_${i%%.MOV}.avi ; done

Don’t: Use ffmpeg version 2.8.10 instead, which also detects frame rotation. With videos from my LG G4 phone, there’s a problem with detecting the frame rate (it appears as 90000 fps for some reason). So I guess it’s 30 fps, and this does the trick (at least for a short clip):

$ mencoder ~/Desktop/20180118_115559.mp4 -oac pcm -ovc lavc -lavcopts vcodec=mjpeg -ffourcc MJPG -fps 30 -o mencoder.avi

And make thumbnails of all MOVs in the current directory (so that I know where I can find what):

$ for i in *.MOV ; do ffmpeg -i $i -ss 10 -r 1/10 -s 320x180 ../snapshots/${i%%.MOV}_%04d.jpg ; done

This is more or less one frame every 10 seconds, and taken down to 25% of the size.

The other way around: A 10 fps AVI video clip from images (Blender output):

$ ffmpeg -r 10 -f image2 -i %04d.png -b 1000k -vcodec mpeg4 -vtag XVID clip.avi

Using mencoder to create a slow motion version of a video. Note that in this example, the input frame rate was 30 fps (and I wanted to keep it, hence the -ofps 30) and input audio rate was 44100, which I also wanted to keep. Without the -ofps and -srate arguments, I would get 10 fps and some weird sound rate, which could possible mess up video players and video editing software.

See more below on playing with video / audio rates.

I only tested this with an MJPG video.

$ mencoder -speed 1/3 -ofps 30 -srate 44100 -vf harddup MVI_7596.avi -ovc copy -oac pcm -o slowmo_MVI_7596.avi

Or convert a 30 fps video to 25 fps (making the voice sound unnaturally dark, but other sounds are OK):

$ mencoder -speed 25/30 -ofps 25 -srate 44100 -vf harddup MVI_7613.avi -ovc copy -oac pcm -o weird_MVI_7613.avi

Fix brightness, contrast and saturation on a MJPEG video, resulting in an MJPEG video

$ mencoder -vf harddup,eq2=1.0:1.2:0.2:1.2 mjpeg_MVI_7608.avi -oac copy -ovc lavc -lavcopts vcodec=mjpeg -ffourcc MJPG -o ../fixed/mjpeg_MVI_7608.avi

Playing a video with an external mono soundtrack, listening in stereo (very good when working only on audio track, so there’s no need to render the video all the time):

$ mplayer -audiofile sound.wav -af channels=2:2:1:0:1:1 rendered_video.avi

Dumping keyframes from an MPEG video stream (don’t ask me why this is necessary)

mplayer clip.avi -benchmark -nosound -noaspect -noframedrop -ao null -vo png:z=6 -vf framestep=I

Piping a video from ffmpeg to mplayer (instead of using ffplay, but still using ffmpeg’s capabilities)

ffmpeg clip.mkv -f matroska - | mplayer -

Creating an HD MP4 video. The result isn’t very impressive, despite the 10Mbit/s rate. Also, mp3 is used rather than AAC, because libfaac isn’t supported on my computer, and choosing -acodec aac lead to a warning about using an experimental codec. I suppose this should be done in a dual pass, but since I needed MP4 merely as a backup, so be it.

ffmpeg -i clip_mjpeg.avi -threads 16 -qmin 10 -qmax 51 -i_qfactor 0.71 -qcomp 0.6 -qdiff 4 -trellis 0 -vcodec libx264 -acodec libmp3lame -aspect 16:9 -b 10M -ab 128k -y clip.mp4

Getting the length of a video (the -pretty flag makes it display it in human-readable format, as opposed to number of seconds):

ffprobe -v error -show_entries format=duration -of default=noprint_wrappers=1 -pretty clip.mp4

Note that ffprobe supports a wide range of output formats, including JSON, CSV etc. Surprisingly enough, this can be done with exiftool too:

exiftool -T -Duration clip.mp4

But there’s a problem with these two commands: They output the duration of the file according to the metadata. If the file has been truncated by a partial download or something, this is noticed only when the file is played. So this gives the actual length:

ffmpeg -i clip.mp4 -c copy -f null - 2>&1 | perl -e 'local $/; $a=<>; @x=($a =~ /time=([^ ]+)/g); print "$x[-1]\n";'

The trick is to extract the last timestamp that is given during an encoding of the data into null. Using the copy encoder (-c copy) is somewhat inaccurate but fast (the copy encoder counts in non-video frames at the end, if such exist). The Perl one-liner script just looks for the last occurrence of a time stamp. This is a bit of clumsy solution, but I haven’t seen anything more elegant around.

Selecting specific audio/video streams

This is good for videos with alternative audio in particular. Use -map with the stream numbers that appear when the command is invoked (or with ffprobe). Both the video and audio must be explicitly given. For example:

ffmpeg -i video.mkv -map 0:2 -map 0:0 -f matroska - | mplayer -

Note that once -map is used, streams that are not explicitly mentioned are ignored. So this is the command I used with ffmpeg v6.0 to re-encode an AV1 video stream into MPEG4, select the Japanese audio and keep all subtitles and attachments (fonts in this case):

ffmpeg -i in.mkv -map 0:a:m:language:jpn -map 0:s -map 0:v -map 0:t -b 5000k -vcodec mpeg4 out.mkv

Note that the audio stream was selected according to its declared language, and not its number. Both ways are possible, the former is usually more robust.

All data was just copied to the output file (except for the video stream, of course). Playing this result with vlc, the subtitles appeared nicely with fonts that were given as attachments inside the mkv file itself. I didn’t manage to burn the subtitles on the video with something like

-filter_complex "[0:v][0:s:m:language:eng]overlay[v]" -map "[v]"

possibly because these subtitles rely on the attached fonts (and maybe because these ass/ssa subtitles are on the wild side with animations and stuff).

Concatenating videos

Stitching several video clips can be handy. First, prepare a list of files to handle, say as list.txt with the following format

file 'file1.avi'

file 'file2.avi'

(possibly with absolute paths). If the files all have the same format, re-encoding may not be necessary, so just go

ffmpeg -f concat -i list.txt -c copy concat.avi

Or, for re-encoding into AVI XVID with low-end mp3 sound, go:

ffmpeg -f concat -i list.txt -ab 128k -acodec libmp3lame -b 5000k -vcodec mpeg4 -vtag XVID recoded.avi

I had errors regarding non monotonically increasing dts to muxer. As a result, the video played only halfways. Fixed with this:

ffmpeg -i recoded.avi -c copy recoded-fixed.avi

It might better or worse when outputting to an .mp4 file instead of .avi. This is a messy business — just experiment.

It’s possible to put URLs instead of file names in list.txt, so it goes something like (also see notes on m3u8 below):

file 'https://the-server.net/seg-6773.ts'

file 'https://the-server.net/seg-6774.ts'

file 'https://the-server.net/seg-6775.ts'

[ ... ]

This is consumed by a command like

$ ffmpeg -safe 0 -protocol_whitelist tls,file,http,https,tcp -f concat -re -i list.txt -c copy try.mp4

The -re flag keeps the download rate to match the frame rate. Required if the URLs are predicted by some script, so they’re not present in the server when starting.

The -protocol_whitelist flag is explained on this page, which also lists the different protocols supported. Without it, an “Protocol ‘https’ not on whitelist ‘file,crypto’!” error prevents the processing.

Typically there is no problem delaying the fetching with ffmpeg even hours after they have been announced in playlists. The video is just files on a plain HTTP server.

Audio encoding…

Convert from mpc to mp3, output bitrate 192k:

ffmpeg -ab 192k -i infile.mpc outfile.mp3

Extract audio track from video, to 48 kHz sample rate

ffmpeg -i video.mp4 -ar 48000 sound.wav

Changing audio / video speed

Based upon this page: Slowing down audio by 15% without changing the pitch (a.k.a. “change tempo”):

ffmpeg -i toofast.wav -filter:a "atempo=0.85" slower.wav

Now suppose I’ve recorder a video with this sound. To bring it back to the original speed, go:

ffmpeg -i rawshot.mp4 -filter_complex "[0:v]setpts=0.85*PTS[v];[0:a]atempo=1.17647[a]" -map "[v]" -map "[a]" speedup.mp4

Note that this speeds up the video by 1/0.85 ≈ 1.17647.

The atempo filter is limited between 0.5 and 2. For a wider range, insert the filter multiple times, e.g. with “atempo=0.5,atempo=0.5″.

Choosing format with yt-dlp

Downloading from Youtube and similar sites is done with yt-dlp. In order to select which video or audio channel to grab, when there is a variety to choose from, go

$ yt-dlp -f- 'https://thesite.com/video'

and then replace the dash after “f” with the ID that is listed. In order to download both video and audio, put a plus character (“+”) between the two IDs.

To choose two specific format IDs and download English subtitles along with the video and include them in the .mp4 file:

$ yt-dlp -f136+140 --embed-subs --sub-langs en 'https://thesite.com/video'

Wildcards are allowed with –sub-langs. Use –list-subs for a list of subtitle sets available.

If the video requires a cookie context, it’s possible to use Firefox’ Export Cookies add-on (git repo here) and refer to the cookie file with something like this:

$ yt-dlp --cookies /path/to/cookies.txt [ ... ]

There’s probably a similar add-on for Chrome as well.

Grabbing fragmented Flash (f4f, f4m)

Note: yt-dlp is by far the better option for these things.

First, grab the utility:

$ git clone https://github.com/K-S-V/Scripts.git

I used commit ID 3cc8ca9de346089b673b803cd6233e8c0bca3871 which was the most recent one, and works well on my old Fedora 12 after a “yum install php-bcmath”.

The trick is to obtain the manifest file, which is fetched by the browser before the f4f fragments

php AdobeHDS.php --manifest 'http://some.long.url/manifest.f4m'

That downloads all fragments into the current directory, and concatenates it all into an .flv file. Possibly convert it into DIVX to a smaller image, so that my silly TV set AVI player manages it (small image and low bitrate, or it fails).

$ ffmpeg -i thelongname.flv -ab 128k -b 1500k -vcodec mpeg4 -vtag DIVX -s 640x480 -aspect 4:3 view.avi

Grabbing m3u8

Once again, check out yt-dlp (formerly youtube-dl) first.

Note to self: The getts script in the misc/utils repo feeds ffmpeg with a spoon with TS segments — useful when it’s required to be patient with servers that refuse requests every now and then.

Requires a fairly recent ffmpeg version. Something like

$ ffmpeg -version

ffmpeg version 1.2.6-7:1.2.6-1~trusty1

built on Apr 26 2014 18:52:58 with gcc 4.8 (Ubuntu 4.8.2-19ubuntu1)

[...]

Give ffmpeg the URL to the m3u8 manifest (obtained by sniffing, for example), and let ffmpeg do the rest (this converts directly to AVI as above)

$ ffmpeg -i 'http://the.host.com/the_long_path.m3u8' -strict -2 -ab 128k -b 1500k -vcodec mpeg4 -vtag DIVX myvid.avi

The “-strict -2″ flag is a response to ffmpeg complaining that the AAC decoder is experimental, so I have to insist.

If cookies and other custom headers should be used on the HTTP request, they can be issues with the -headers flag. Note however that this flag must come before the -i argument (better put the -headers flag first). Also note that all headers must be given in a single argument with each header line terminated with \r\n. This is easily done in bash:

ffmpeg -headers 'Cookie: this=that'$'\r\n''Referer: http://whatever.com'$'\r\n' -i ...

Also note that a script may generate a list of URLs (possibly obtained from m3u8 playlists, and also possibly “guess” them in advance, following the typically very simple naming scheme) and then use the concat feature — see above.

Using VLC

I had a really tricky video with crazy ASS subtitles, which only VLC managed to show correctly (version 3.0.7.1, by the way). But even VLC stopped showing the subtitles when I skipped forward over a part with intense subtitle trickery. So I used VLC to create an mp4 video with “hardcoded” subtitles, and then played it with whatever.

To make a long story short, the command I used was

$ vlc orig.mkv --sub-file subs.ass --sout='#transcode{vcodec=h264,width=1280,height=720,acodec=mp4a,ab=192,soverlay}:std{access=file,mux=mp4,dst="out.mp4"}' vlc://quit

This requires some explanations. But first, VLC’s own help:

$ vlc --longhelp --advanced --help-verbose | less

As for the parameters in the command above: What really matters is vcodec, acodec and soverlay, which appear in the help file as –sout-transcode-vcodec, –sout-transcode-acodec, –sout-transcode-soverlay. But they aren’t really explained there. Their meaning is pretty obvious, except for soverlay, which is required for burning the subtitles into the video frame. But what are the options for the codecs? Go figure. I have no idea how to query VLC for these. It appears like such option doesn’t exist.

The part after “std” defines the output file. The “mux” part selects the container, and “dst” is the file name. Same problem with getting a list of supported muxes. I would look for hints in VLC’s help output, for example, look for demuxes to get the codes for muxes.

I added width=1280 and height=720 to scale the video, and ab=192 to set the audio data rate, but these are specific to this session.

Last: The vlc://quit part. This tells VLC to quit after finishing the encoding, which it won’t do otherwise. It’s also possible to add “-I dummy” as the first argument in order to avoid VLC’s GUI from opening (and hence steal focus on the desktop, which is annoying when running the command in a loop). But then there’s no information about how the encoding progresses.

To select e.g. Japanese language for the audio track, add “–sout-transcode-alang jpn”.

In short, VLC is really a video player with encoding capabilities. It was never intended for use from the command line it seems, but in times of need it saves the day.

To be continued…

This is how to make an encrypted DVD, which is automatically mounted by Fedora 12 (and others, I suppose) when the DVD is inserted (prompting for the passphrase, of course). The truth is that I don’t use this automatic feature, because only seeing the suggestion to save my passphrase as an option makes me prefer going good old command line.

A word of caution: A lot of disk-related operations are done here as root. A slightest mistake, and you may very well trash your entire hard disk. If you don’t understand what the operations below mean, don’t do them. If you feel tired, do it later.

Looks like I’m going to make a script of this sooner or later.

Why lazy man? Mainly for two reasons: The real way to do with is to generate an ISO image, and then encrypt it, so it doesn’t get larger than necessary. The second reason is that having an almost-full sized ISO image anyhow, regardless of how much data I put in it, it’s pretty lazy not to fill it with random data before applying encrypted information. By using /dev/zero instead of /dev/urandom (or /dev/frandom if you want it faster) it’s possible to know how much of the DVD contains data. Also, the fact that it’s all random makes it impossible for an adversary to know for sure what region contains encrypted data, and where it’s just random. But frankly, all these extra safety measures are ridiculous given the fact that a human knows the passphrase, which is by far the weakest link.

Now to some action. First, generate an empty image file. I chose to make it slighly smaller than the maximum allowed. This should work with count=4480 as well, but I don’t want to push it (so I get 4.6 marketing-GB instead of the well-known 4.7).

$ dd if=/dev/zero of=disk.img bs=1M count=4400

As I said, you may prefer /dev/frandom over /dev/zero. Now it’s time to become root, and go:

# losetup /dev/loop1 disk.img && cryptsetup luksFormat /dev/loop1 && cryptsetup luksOpen /dev/loop1 mybackupdisk && genisoimage -R -J -joliet-long -graft-points -V backup -o /dev/mapper/mybackupdisk directory-to-backup

You will be prompted to agree to erase /dev/loop1, and then for the passphrase three times: Twice for creating the encrypted device, and once for opening it.

I do this in a single line for one important reason: If losetup fails, the show must stop. One of the possible reasons is that /dev/loop1 (which could be any /dev/loopN, as long as it’s the same one along the line) is busy doing something completely different. If we ignore a failure to open a certain loop device, there is a good chance to erase something we didn’t intend to.

When that’s done, close the encrypted device and free /dev/loop1.

# cryptsetup luksClose /dev/mapper/mybackupdisk && losetup -d /dev/loop1

Now burn the image to a DVD. When inserting it, a GUI popup may appear and ask for the password. You can use that, or click Cancel in order to do this manually (and more safely, if you ask me). My DVD is at /dev/sr0, so I go:

# cryptsetup luksOpen /dev/sr0 mydvd

Enter passphrase for /dev/sr0:

# mount /dev/mapper/mydvd backmnt/

mount: block device /dev/mapper/mydvd is write-protected, mounting read-only

And finally, in order to eject the disk one can use the GUI option (if available) or do it manually:

# umount backmnt/

# cryptsetup luksClose /dev/mapper/mydvd

If you use the GUI eject, check /dev/mapper/ to verify that the encryption is indeed closed. On my system it was done properly, but I prefer to see that the door is locked.

Many posts in this blog are just things I wanted written down in case I needed them again. This post is about very small things that don’t deserve a post of their own. To be constantly updated.

Upload to web with Picasa 2.7

Didn’t work at first. Couldn’t sign in. Try later. Right. The fix, thanks to this tip:

# ln -s /usr/lib/libssl.so.10 /opt/picasa/lib/libssl.so

# ln -s /usr/lib/libcrypto.so.10 /opt/picasa/lib/libcrypto.so

and it works like a charm. With Picasa 3.0, it’s the wininet.dll.so that needs to be copied from somewhere. Take the 32 bit version (as opposed to 64 bit).

I really banged my head on this one: I was sure I had set up all registers correctly, and still I got complete garbage at the output. Or, as some investigation showed, everything worked OK, only the PLL didn’t seem to do anything: The VCO was stuck at its lowest possible frequency (which depended on whether I picked the one for higher or lower frequencies).

At first I thought that there was something wrong with my reference clock. But it was OK.

Only after a while did I realize that the PLL needs to be recalibrated after the registers are set. The CDCE62002 wasn’t intended to be programmed after powerup, like the CDCE906. The by-design use is to program the EEPROM once, and then power it up. Doing it this way, the correct values go into the RAM from EEPROM, after which calibration takes place with the correct parameters.

Solution: Power down the device by clearing bit 7 in register #2, write the desired values in registers #0 and #1, and then power up again by setting bit 7 (and and bit 8, regardless) in register #2. This way the device wakes up as is the registers were loaded from EEPROM, and runs its calibration routine correctly.

What I still don’t understand, is why I have to do this twice. The VCO seems to go to its highest frequency now, unless I repeat the ritual mentioned above again 100 ms after the first time. If I do this within microseconds it’s no good.

I’ve written a Verilog module to handle this. Basically, send_data should be asserted during one clock cycle, and the parameter inputs should be held steady for some 256 clock cycles afterwards. As I’m using this module, there are constant values there.

This is not an example of best Verilog coding techniques, but since I didn’t care about either slice count or timing here, I went for the quickest solution, even if it’s a bit dirty. And it works.

Note that the module’s clock frequency should not exceed 40 MHz, since the maximal SPI clock allowed by spec is 20 MHz. And again, for this to really work, send_data has to be asserted twice, with some 100 ms or so between assertions. I’ll check with TI about this.

module cdce62002

(

input clk, // Maximum 40 MHz

input reset, // Active high

output busy,

input send_data,

output reg spi_clk, spi_le, spi_mosi,

input spi_miso, // Never used

// The names below match those used in pages 22-24 of the datasheet

input INBUFSELX,

input INBUFSELY,

input REFSEL,

input AUXSEL,

input ACDCSEL,

input TERMSEL,

input [3:0] REFDIVIDE,

input [1:0] LOCKW,

input [3:0] OUT0DIVRSEL,

input [3:0] OUT1DIVRSEL,

input HIPERFORMANCE,

input OUTBUFSEL0X,

input OUTBUFSEL0Y,

input OUTBUFSEL1X,

input OUTBUFSEL1Y,

input SELVCO,

input [7:0] SELINDIV,

input [1:0] SELPRESC,

input [7:0] SELFBDIV,

input [2:0] SELBPDIV,

input [3:0] LFRCSEL

);

reg [7:0] out_pointer;

reg active;

wire [255:0] data_out;

wire [255:0] le_out;

wire [27:0] word0, word1, word2, word3;

wire [27:0] ones = 28'hfff_ffff;

// synthesis attribute IOB of spi_clk is true;

// synthesis attribute IOB of spi_le is true;

// synthesis attribute IOB of spi_mosi is true;

// synthesis attribute init of out_pointer is 0 ;

// synthesis attribute init of active is 0 ;

// synthesis attribute init of spi_le is 1;

assign busy = (out_pointer != 0);

// "active" is necessary because we don't rely on getting a proper

// reset signal, and out_pointer is subject to munching by the

// synthesizer, which may result in nasty things during wakeup

always @(posedge clk or posedge reset)

if (reset)

begin

out_pointer <= 0;

active <= 0;

end

else if (send_data)

begin

out_pointer <= 1;

active <= 1;

end

else if ((spi_clk) && busy)

out_pointer <= out_pointer + 1;

always @(posedge clk)

begin

if (spi_clk)

begin

spi_mosi <= data_out[out_pointer];

spi_le <= !(le_out[out_pointer] && active);

end

spi_clk <= !spi_clk;

end

assign data_out = { word3, 4'd2, 2'd0, // To register #2 again.

64'd0, // Dwell a bit in power down

word1, 4'd1, 2'd0,

word0, 4'd0, 2'd0,

word2, 4'd2, 4'd0

};

assign le_out = { ones[27:0], ones[3:0], 2'd0,

64'd0, // Dwell a bit in power down

ones[27:0], ones[3:0], 2'd0,

ones[27:0], ones[3:0], 2'd0,

ones[27:0], ones[3:0], 4'd0 };

assign word0[0] = INBUFSELX;

assign word0[1] = INBUFSELY;

assign word0[2] = REFSEL;

assign word0[3] = AUXSEL;

assign word0[4] = ACDCSEL;

assign word0[5] = TERMSEL;

assign word0[9:6] = REFDIVIDE;

assign word0[10] = 0; // TI trashed external feedback

assign word0[12:11] = 0; // TI's test bits

assign word0[14:13] = LOCKW;

assign word0[18:15] = OUT0DIVRSEL;

assign word0[22:19] = OUT1DIVRSEL;

assign word0[23] = HIPERFORMANCE;

assign word0[24] = OUTBUFSEL0X;

assign word0[25] = OUTBUFSEL0Y;

assign word0[26] = OUTBUFSEL1X;

assign word0[27] = OUTBUFSEL1Y;

assign word1[0] = SELVCO;

assign word1[8:1] = SELINDIV;

assign word1[10:9] = SELPRESC;

assign word1[18:11] = SELFBDIV;

assign word1[21:19] = SELBPDIV;

assign word1[25:22] = LFRCSEL;

assign word1[27:26] = 2'b10; // Read only bits

// word2 and word3 are both sent to register #2 in order to

// restart the PLL calibration after registers are set.

assign word2 = 28'h000_0100; // Power down

assign word3 = 28'h000_0180; // Exit powerdown

endmodule

So I compiled the kernel I downloaded from kernel.org like I’ve always done, but the system wouldn’t boot, and it had good reasons not to: My root filesystem is both encrypted and RAID-5′ed, which requires, at least, a password to be entered. That job has to be done by some script which runs before my root filesystem is mounted. So obviously, a clever environment is necessary at that stage.

Before trying to fiddle with the existing image, I figured out there must be a script creating that image. And so it did. It’s called dracut and seems to be what Fedora uses in its distribution kernels.

So, go as root (or an internal ldconfig call will fail, not that I know what effect that has)

# dracut initramfs-2.6.35.4-ELI1.img 2.6.35.4-ELI1

This created a 92 Mbyte compressed image file. Viewing the image (uncompressed and opened into files), it turns out that 308 MB out of the 324 MB this image occupies are for kernel modules. Nice, but way too much. And also causes the stage between leaving GRUB and until prompted for password take something like two minutes (!) during which a blank screen is shown. But eventually the system booted up, and ran normally.

So this initramfs is definitely sufficient, but it includes too much junk on the way. Solution: Using the -H flag, so that only the modules necessary for this certain computer are loaded. This is maybe dangerous in case of a hardware change but it reduced the kernel size to 16 MBytes, which is slightly larger than the distribution initramfs (12MBytes). Which I couldn’t care less about, in particular since the RAM is freed when the real root file system is mounted.

I ran dracut again while the target kernel was running. I don’t know if this has any significance.

# dracut -H initramfs-2.6.35.4-ELI1.img 2.6.35.4-ELI1

Dissection

And as a final note, I’d just mention, that if you want to know what’s inside that image, there’s always

$ lsinitrd initramfs.img

or open the image: Get yourself to a directory which you don’t care about filling with junk, and go:

$ zcat initramfs-2.6.35.4-ELI1 | cpio -i -d -H newc --no-absolute-filenames

To build an image, go from the root of the directory to pack

$ find . -print0 | cpio --null -ov --format=newc | gzip -9 > ../initramfs.img

(note the verbose flag, so all files are printed out)

This may not be necessary, as recent versions of dracut supports injecting custom files and other tweaks.