What’s this?

This is a note for myself, in case I need a quick replacement for my ADSL connection on the desktop computer (Fedora 12, and oldie). It may seem paradoxical that I’ll read this in order to access the internet (…), but this is probably where I would look first. With my cellphone, which is also the temporary access point, that is.

In short, there’s a lot of stuff here particular to my own computer.

I’m using the small TP-LINK dongle (TL-WN725N), which is usually doing nothing in particular.

Notes to self

- This post is also on your local blog (copy-paste commands…)

- wlan1 is the Access Point dongle (maybe use it instead…?)

- Put the phone in the corner next to the door (that’s where I get a 4G connection) once the connection is established…

- … but not before that, so you won’t run back and forth

Setting up the interface

# service ADSL off

# /etc/sysconfig/network-scripts/firewall-wlan0

# ifconfig wlan0 up

# wpa_supplicant -B -Dwext -iwlan0 -c/etc/wpa_supplicant/wpa_supplicant.conf

ioctl[SIOCSIWAP]: Operation not permitted

# iwlist wlan0 scan

wlan0 Scan completed :

Cell 01 - Address: 00:34:DA:3D:F8:F5

ESSID:"MYPHONE"

Protocol:IEEE 802.11bgn

Mode:Master

Frequency:2.462 GHz (Channel 11)

Encryption key:on

Bit Rates:108 Mb/s

Extra:rsn_ie =30140100000fac040100000fac040100000fac020c00

IE: IEEE 802.11i/WPA2 Version 1

Group Cipher : CCMP

Pairwise Ciphers (1) : CCMP

Authentication Suites (1) : PSK

IE: Unknown: DD5C0050F204104A0001101044000102103B00010310470010A7FED45DE0455F5DB64A55553EB96669102100012010230001201024000120104200012010540008000000000000000010110001201008000221481049000600372A000120

Quality:0 Signal level:0 Noise level:0

# iwconfig wlan0 essid MYPHONE

# dhclient wlan0 &

Note that wpa_supplicant complained, and it was still fine. Use the -d or -dd flags for some debugging info.

It seems like the iwconfig is redundant, as wpa_supplicant handles this, thanks to the “scan_ssid=1″ attribute in the config entry (?). The DHCP client isn’t redundant, because the routing table isn’t set correctly without it (making wlan0 the default gateway)

Shutting down

WPA supplicant config file

The WPA supplicant scans wlan0 and finds matching SSIDs. If such is found, it sends the password. Looks like it handles the association.

/etc/wpa_supplicant/wpa_supplicant.conf should read:

ctrl_interface=/var/run/wpa_supplicant

ctrl_interface_group=wheel

network={

ssid="MYPHONE"

scan_ssid=1

key_mgmt=WPA-PSK

psk="MYPASSWORD"

}

(it’s already this way)

I needed to update a lot of releases, each designated by a tag, with a single fix, which took the form of a commit. This commit was marked with the tag “this”.

#!/bin/bash

for i in $(git tag | grep 2.0) ; do

git checkout $i

git cherry-pick this

git tag -d $i

git tag $i

done

This bash script checks out all tags that match “2.0″, and advances it after cherry-picking. Recommended to try it on a cloned repo before going for the real thing, or things can get sad.

These are messy, random notes that I took while setting up my little Raspberry Pi 3. Odds are that by the time you read this, I’ve replaced it with a mini-PC. So if you ask a question, my answer will probably be “I don’t remember”.

Even though the Pi is cool, it didn’t supply what I really wanted from it, which is simultaneous output on SDTV and HDMI. It also turns out that it’s unable to handle a large portion of the video streams and apps out there on the web, seemingly because the lack of processing power vs. the resolution of these streams (running Kodi, which I suppose is the best optimized application for the Pi). So as a catch-all media center attached to a TV set, it’s rather useless.

Starting

- Used the 2016-11-25-raspbian-jessie.zip image

- Raspbian: To get remote access over ssh, do “service ssh start” and login as “pi” with password “raspberry”. Best to remove these before really working. To make ssh permanent, go

# systemctl enable ssh

- Cheap USB charger from Ebay didn’t hold the system up, and a reboot occurred every time the system attempted to boot up. The original LG G4 charger is strong enough, though.

- Kodi installed cleanly with

# apt-get update

# apt-get install --install-suggests kodi

# apt-get install --install-suggests vlc

- … but it seems like vlc doesn’t use video acceleration, and I tried a lot to make it work. It didn’t. So it’s quite useless.

- Enabling Composite output: Use a four-lead 3.5mm plug (a stereo plug doesn’t work). The Samsung screen refused to work, but Radiance detected the signal OK.

I used old Canon Powershot’s video cable, but attached to the RED plug for video, and not the yellow.

In /boot/config.txt, uncomment

sdtv_mode=2

however composite video is disabled when an HDMI monitor is detected, and Q&A’s on the web seem to suggest that simultaneous outputs is not possible. Following this page, I tried setting (so that the HDMI output matches)

hdmi_group=1

hdmi_mode=21

and got 576i (PAL) on the HDMI output but the signals on the composite output were dead (checked with a scope).

- Added “eli” as a user:

# adduser --gid 500 --uid 1010 eli

- Add “eli as sudoer”. Add the file /etc/sudoers.d/010_eli-nopasswd saying

eli ALL=(ALL) NOPASSWD: ALL

- Manually edit /etc/groups, find all the places it says “pi” and add “eli” — so they have some groups. Compare “id” outputs.

- Add ssh keys for password-less access (use ssh-copy-id)

- Change the timezone

$ sudo raspi-config

pick “4 Internationalisation Options” and change the timezone to Jerusalem

- Set “eli” as the default login: One possibility would have been to change the config script (usr/bin/raspi-config) as suggested on this page. Or change /etc/lightdm/lightdm.conf so it says

autologin-user=eli

as for console login, the key line in raspi-config is

ln -fs /etc/systemd/system/autologin@.service /etc/systemd/system/getty.target.wants/getty@tty1.service

so the change is to edit /etc/systemd/system/autologin@.service so it says

ExecStart=-/sbin/agetty --autologin eli --noclear %I $TERM

- Turn off scrrensaver / blanking: First check the current situation (from an ssh session, therefore specific about display)

$ xset -display :0 q

Keyboard Control:

auto repeat: on key click percent: 0 LED mask: 00000000

XKB indicators:

00: Caps Lock: off 01: Num Lock: off 02: Scroll Lock: off

03: Compose: off 04: Kana: off 05: Sleep: off

06: Suspend: off 07: Mute: off 08: Misc: off

09: Mail: off 10: Charging: off 11: Shift Lock: off

12: Group 2: off 13: Mouse Keys: off

auto repeat delay: 500 repeat rate: 33

auto repeating keys: 00ffffffdffffbbf

fadfffefffedffff

9fffffffffffffff

fff7ffffffffffff

bell percent: 50 bell pitch: 400 bell duration: 100

Pointer Control:

acceleration: 20/10 threshold: 10

Screen Saver:

prefer blanking: yes allow exposures: yes

timeout: 600 cycle: 600

Colors:

default colormap: 0x20 BlackPixel: 0x0 WhitePixel: 0xffffff

Font Path:

/usr/share/fonts/X11/100dpi/:unscaled,/usr/share/fonts/X11/75dpi/:unscaled,/usr/share/fonts/X11/Type1,/usr/share/fonts/X11/100dpi,/usr/share/fonts/X11/75dpi,built-ins

DPMS (Energy Star):

Standby: 600 Suspend: 600 Off: 600

DPMS is Enabled

Monitor is On

So turn it off, according to this thread. Edit /etc/kbd/config to say (in different places of the file)

BLANK_TIME=0

POWERDOWN_TIME=0

and then append these lines to ~/.config/lxsession/LXDE-pi/autostart (this is a per-user thing):

@xset s noblank

@xset s off

@xset -dpms

More jots

Kodi setup

- Change setting level to Advanced

- System > Settings > Enable TV

- System > Settings > System > Power savings, set Shutdown function to Minimise (actually, it didn’t help regarding the blackout of the screen on exit)

- Enable and Configure PVR IPTV Simple Client

- On exit, use Ctrl-Alt-F1 and then Ctrl-Alt-F7 to get back from the blank screen it leaves (/bin/chvt should do this as well?)

Video issues

I wanted to get a simultaneous SDTV / HDMI output. Everyone says it’s impossible, but I wanted to give it a try. I mean, it’s the drivers that say no-no, but one can find a combination of registers that gets it working. The alternative is an external HDMI splitter, and then an HDMI to CVBS converter. Spoiler: I gave up in the end. Not saying it’s impossible, only that it’s not worth the bother. So:

Broadcom implements the OpenMAX API, which seems to have a limited set of GPGPU capabilities. For example see firmware/opt/vc/src/hello_pi/hello_fft/ in Raspberry’s official git repo. The QPU is documented in VideoCoreIV-AG100-R.pdf, and there’s an open source assembler for it, vc4asm. and possibly this one is better, mentioned on this page. Also look at this blog.

This page details the VideoCore interface for Raspberry.

An utility for switching between HDMI/SDTV outputs (in hindsight, I would go for the official tvservice instead, but this is what I did):

$ git clone https://github.com/adammw/rpi-output-swapper.git

But that didn’t work:

eli@raspberrypi:~/rpi-output-swapper $ make

cc -Wall -DHAVE_LIBBCM_HOST -DUSE_EXTERNAL_LIBBCM_HOST -DUSE_VCHIQ_ARM -I/opt/vc/include/ -I/opt/vc/include/interface/vcos/pthreads -I./ -g -c video_swap.c -o video_swap.o -Wno-deprecated-declarations

cc -o video_swap.bin -Wl,--whole-archive video_swap.o -L/opt/vc/lib/ -lbcm_host -lvcos -lvchiq_arm -Wl,--no-whole-archive -rdynamic

rm video_swap.o

eli@raspberrypi:~/rpi-output-swapper $ sudo ./video_swap.bin --status

failed to connect to tvservice

which comes from this part in tvservice_init():

if ( vc_vchi_tv_init( vchi_instance, &vchi_connections, 1) != 0) {

fprintf(stderr, "failed to connect to tvservice\n");

exit(-4);

}

which is implemented in userland/interface/vmcs_host/vc_vchi_tvservice.c, header file vc_tvservice.h in same directory (Raspberry’s official git repo).

After a lot of back and forth, I compared with the official repo’s tvservice utitlity and discovered that it doesn’t check vc_vhci_tv_init()’s return value. So I ditched the check on video_swap as well, and it worked. But the results on the screen were so messy, that I didn’t want to pursue this direction.

In what follows, some things I found out while trying to solve the problem: The program opens /dev/vchiq on bcm_host_init(), and performs a lot of ioctl()’s on it. The rest of tvservice_init() until the error message causes no system calls at all!

/dev/vchiq had major/minor 248/0 on my system. According to /proc/devices, it belongs to the vchiq module (not a big surprise…). Drivers are at drivers/misc/vc04_services/interface/vchiq_arm/ Seemingly with vchiq_arm.c as the top level file, and are enabled with CONFIG_BCM2708_VCHIQ.

There’s a utility, vcgencmd , for setting a lot of different things, log levels among them, but I didn’t manage to figure out where the log messages go to.

Motivation

Somewhere at the bottom of ISE’s xst synthesizer’s report, it says what the maximal frequency is, along with an outline the slowest path. This is a rather nice feature, in particular when attempting to optimize a specific module. There is no such figure given after a regular Vivado synthesis, possibly because the guys at Xilinx thought this “maximal frequency” could be misleading. If so, they had two good reasons for that:

- There is no such thing as a “maximal frequency”: The tools pay attention to the timing constraints and do their best accordingly. Put shortly, you might not get frequency X unless you ask for it.

- In a typical design, there are many clocks with different frequencies. The slowest path might belong to a clock that’s slow anyhow.

And still, it’s sometimes useful to get an idea of where things stand before the Via Dolorosa of a full implementation.

How to do it in Vivado

First and foremost: Set the timing constraints according to your expectations. Or at least, in a way that makes it clear which clock is important, and which can be slow. Then synthesize the design.

After the synthesis has completed successfully, open the synthesized design (clicking “Open Synthesized Design” on the left bar or with the Tcl command “open_run synth_1″).

In the Tcl window, issue the command

report_timing_summary -file mytiming.rpt

which writes a full post-synthesis timing report into mytiming.rpt. Just “report_timing_summary” prints it out to the console.

There’s also a “Report Timing Summary” option under “Synthesized Design” on the left bar, but I find it difficult to navigate my way to getting information in the GUI representation of the report.

Reading the report

RULE #1: The synthesis report is no more than a rough estimation. The routing delays are guesses. It might report timing failures where the implementation will succeed to fix things, and it might say all is fine where the implementation will fail colossally (in particular when the FPGA’s logic usage goes close to 100%).

Now to action: The first thing to look at is the clock summary and Intra Clock Table, and get to know how Vivado has named which clock. For example,

------------------------------------------------------------------------------------------------

| Clock Summary

| -------------

------------------------------------------------------------------------------------------------

Clock Waveform(ns) Period(ns) Frequency(MHz)

----- ------------ ---------- --------------

clk_fpga_1 {0.000 5.000} 10.000 100.000

gclk {0.000 4.000} 8.000 125.000

audio_mclk_OBUF {0.000 41.667} 83.333 12.000

clk_fb {0.000 20.000} 40.000 25.000

vga_clk_ins/clk_fb {0.000 20.000} 40.000 25.000

vga_clk_ins/clkout0 {0.000 1.538} 3.077 325.000

vga_clk_ins/clkout1 {0.000 7.692} 15.385 65.000

vga_clk_ins/clkout2 {0.000 7.692} 15.385 65.000

------------------------------------------------------------------------------------------------

| Intra Clock Table

| -----------------

------------------------------------------------------------------------------------------------

Clock WNS(ns) TNS(ns) TNS Failing Endpoints TNS Total Endpoints WHS(ns) THS(ns) THS Failing Endpoints THS Total Endpoints WPWS(ns) TPWS(ns) TPWS Failing Endpoints TPWS Total Endpoints

----- ------- ------- --------------------- ------------------- ------- ------- --------------------- ------------------- -------- -------- ---------------------- --------------------

clk_fpga_1 3.791 0.000 0 12474 0.135 0.000 0 12474 3.750 0.000 0 5021

gclk 6.751 0.000 0 2

audio_mclk_OBUF 76.667 0.000 0 1

clk_fb 12.633 0.000 0 2

vga_clk_ins/clk_fb 38.751 0.000 0 2

vga_clk_ins/clkout0 1.410 0.000 0 10

vga_clk_ins/clkout1 10.747 0.000 0 215 -0.029 -0.229 8 215 6.712 0.000 0 195

vga_clk_ins/clkout2 3.990 0.000 0 415 0.135 0.000 0 415 7.192 0.000 0 211

If the clock frequencies listed in the Clock Summary (which are derived from the timing constraints) don’t help matching between a clock and a name, the TNS Total Endpoints of each clock in the Intra Clock Table helps telling which clock is which. So once the name of the clock of interest is nailed down, search for it in the file, and find something like this:

Max Delay Paths

--------------------------------------------------------------------------------------

Slack (MET) : 3.791ns (required time - arrival time)

Source: xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_offset_limit_1/C

(rising edge-triggered cell FDRE clocked by clk_fpga_1 {rise@0.000ns fall@5.000ns period=10.000ns})

Destination: xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0/D

(rising edge-triggered cell FDRE clocked by clk_fpga_1 {rise@0.000ns fall@5.000ns period=10.000ns})

Path Group: clk_fpga_1

Path Type: Setup (Max at Slow Process Corner)

Requirement: 10.000ns (clk_fpga_1 rise@10.000ns - clk_fpga_1 rise@0.000ns)

Data Path Delay: 6.077ns (logic 2.346ns (38.605%) route 3.731ns (61.395%))

Logic Levels: 8 (CARRY4=3 LUT3=1 LUT4=1 LUT6=3)

Clock Path Skew: -0.040ns (DCD - SCD + CPR)

Destination Clock Delay (DCD): 0.851ns = ( 10.851 - 10.000 )

Source Clock Delay (SCD): 0.901ns

Clock Pessimism Removal (CPR): 0.010ns

Clock Uncertainty: 0.154ns ((TSJ^2 + TIJ^2)^1/2 + DJ) / 2 + PE

Total System Jitter (TSJ): 0.071ns

Total Input Jitter (TIJ): 0.300ns

Discrete Jitter (DJ): 0.000ns

Phase Error (PE): 0.000ns

Location Delay type Incr(ns) Path(ns) Netlist Resource(s)

------------------------------------------------------------------- -------------------

(clock clk_fpga_1 rise edge)

0.000 0.000 r

PS7 0.000 0.000 r xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/PS7_i/FCLKCLK[1]

net (fo=1, unplaced) 0.000 0.000 xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/n_707_PS7_i

BUFG (Prop_bufg_I_O) 0.101 0.101 r xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/buffer_fclk_clk_1.FCLK_CLK_1_BUFG/O

net (fo=5023, unplaced) 0.800 0.901 xillybus_ins/xillybus_core_ins/bus_clk_w

r xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_offset_limit_1/C

------------------------------------------------------------------- -------------------

FDRE (Prop_fdre_C_Q) 0.496 1.397 f xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_offset_limit_1/Q

net (fo=5, unplaced) 0.834 2.231 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_offset_limit[1]

LUT4 (Prop_lut4_I0_O) 0.289 2.520 r xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_lutdi/O

net (fo=1, unplaced) 0.000 2.520 xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_lutdi

CARRY4 (Prop_carry4_DI[0]_CO[3])

0.553 3.073 r xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_cy[0]_CARRY4/CO[3]

net (fo=1, unplaced) 0.000 3.073 xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_cy[3]

CARRY4 (Prop_carry4_CI_CO[3])

0.114 3.187 r xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_cy[4]_CARRY4/CO[3]

net (fo=3, unplaced) 0.936 4.123 xillybus_ins/xillybus_core_ins/unitw_1_ins/Mcompar_n0037_cy[7]

LUT6 (Prop_lut6_I4_O) 0.124 4.247 f xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_wr_request_condition/O

net (fo=7, unplaced) 0.480 4.727 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_wr_request_condition

LUT3 (Prop_lut3_I2_O) 0.124 4.851 r xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_flush_condition_unitw_1_wr_request_condition_AND_179_o3_lut/O

net (fo=1, unplaced) 0.000 4.851 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_flush_condition_unitw_1_wr_request_condition_AND_179_o3_lut

CARRY4 (Prop_carry4_S[2]_CO[3])

0.398 5.249 f xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_flush_condition_unitw_1_wr_request_condition_AND_179_o2_cy_CARRY4/CO[3]

net (fo=21, unplaced) 0.979 6.228 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_flush_condition_unitw_1_wr_request_condition_AND_179_o

LUT6 (Prop_lut6_I5_O) 0.124 6.352 r xillybus_ins/xillybus_core_ins/unitw_1_ins/_n03401/O

net (fo=15, unplaced) 0.502 6.854 xillybus_ins/xillybus_core_ins/unitw_1_ins/_n0340

LUT6 (Prop_lut6_I5_O) 0.124 6.978 r xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0_rstpot/O

net (fo=1, unplaced) 0.000 6.978 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0_rstpot

FDRE r xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0/D

------------------------------------------------------------------- -------------------

(clock clk_fpga_1 rise edge)

10.000 10.000 r

PS7 0.000 10.000 r xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/PS7_i/FCLKCLK[1]

net (fo=1, unplaced) 0.000 10.000 xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/n_707_PS7_i

BUFG (Prop_bufg_I_O) 0.091 10.091 r xillybus_ins/system_i/vivado_system_i/processing_system7_0/inst/buffer_fclk_clk_1.FCLK_CLK_1_BUFG/O

net (fo=5023, unplaced) 0.760 10.851 xillybus_ins/xillybus_core_ins/bus_clk_w

r xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0/C

clock pessimism 0.010 10.861

clock uncertainty -0.154 10.707

FDRE (Setup_fdre_C_D) 0.062 10.769 xillybus_ins/xillybus_core_ins/unitw_1_ins/unitw_1_end_offset_0

-------------------------------------------------------------------

required time 10.769

arrival time -6.978

-------------------------------------------------------------------

slack 3.791

This is a rather messy piece of text, but the key elements are marked in red.

Before drawing any conclusions, make sure it’s the right part you’re looking at:

- It’s the Max Delay Paths section. The mimimal paths section is useful for spotting hold time violation, and has no effect on the maximal frequency.

- It’s the right clock. In the example above, it’s clk_fpga_1. The Requirement line states not only the constraint given for this clock (10 ns = 100 MHz), but also that it goes from one rising edge of clk_fpga_1 to another.

Once that’s done, let’s see what we’ve got: The requirement was 10 ns, and the slack 3.791 ns (note that it’s positive), which means that we could have asked for a clock period 3.791 ns shorter and it would still be OK. So it could have been 10 – 3.791 = 6.2090 ns, which is some 161 MHz.

So the short answer to the “maximal clock” question for clk_fpga_1 is 161 MHz. But remember that this figure might change if the constraints change.

And a final note: The Data Path Delay tells us something about what made this worst path slow or fast. How much delay went on logic, and how much on the (estimated) route delays. So does the detailed delay report that follows. For a more detailed report, consider using the “-noworst” flag when requesting the timing report, so a few worst-case paths are listed. This can help solving timing problems.

It’s quite well-known, that in order to call a function in C, which has been compiled in a C++ source file, there’s need for an extern “C” statement.

So if this appears on a C++ source file:

void my_puts(const char *str) {

...

}

and there’s an attempt to call my_puts() in a plain C file, this will fail with a normal compiler as well as HLS.

In HLS, specifically, the function call in the C file will yield an error like

ERROR: [SYNCHK 200-71] myproject/example/src/main.c:20: function 'my_puts' has no function body.

The thing is, that just adding an extern “C” in the .cpp file will not be enough. The “no function body” error will not go away. What’s required is setting the namespace to hls as well. Something like this:

namespace hls {

extern "C" {

void my_puts(const char *str) {

...

}

}

}

The inspiration for this solution came from the source files of the HLS suite itself. I don’t know if this is really a good idea, only that it works.

If you’re into Linux, and you ever find yourself in a place you’d like to return to with Waze (in the middle of some road, or some not-so-well-mapped village, a campus etc.), just take a photo with your cellular. Assuming that it stores the GPS info.

Alternatively, the “My GPS Coordinates” Android app can be usedful to obtain, SMS, and share the coordinates. But I’ll stick to the photo method.

Use exiftool to extract the coordinates from the image. The -c flag makes sure the coordinates are in plain format:

$ exiftool -c "%.6f degrees" 20160328_160309.jpg

ExifTool Version Number : 8.00

File Name : 20160328_160309.jpg

Directory : .

File Size : 5.2 MB

File Modification Date/Time : 2016:06:14 15:43:43+03:00

File Type : JPEG

MIME Type : image/jpeg

[ ... ]

GPS Altitude : 0 m Above Sea Level

GPS Date/Time : 2016:03:28 13:02:58Z

GPS Latitude : 32.777351 degrees N

GPS Longitude : 35.024139 degrees E

GPS Position : 32.777351 degrees N, 35.024139 degrees E

Image Size : 5312x2988

Shutter Speed : 1/50

[ ... ]

Aha! Now a manual edit of the part marked in red. The link is

https://maps.google.com/?ll=32.777351,35.024139

I’m lucky enough to live in the North-East part of the world. Had it been south or west, just put negative numbers.

Or, create a Waze link, which can be tapped on the phone to get me to that place:

http://waze.to/?ll=32.777351,35.024139&navigate=yes

This opens the web browser, which in turn opens Waze, which started telling me what to do to get there…

The following link can also be used to open Waze directly, however it has to be part of a link on a page like this:

waze://?ll=32.777351,35.024139

As plain text on a mail message, SMS or in Kepp, it didn’t work on my LG G4 Android, because the “waze:” prefix didn’t turn it into a link in these apps. It’s still useful within a website (or an HTMLed web message?)

Single-source utilities

This is the Makefile I use for compiling a lot of simple utility programs, one .c file per utility:

CC= gcc

FLAGS= -Wall -O3 -g -fno-strict-aliasing

ALL= broadclient broadserver multicastclient multicastserver

all: $(ALL)

clean:

rm -f $(ALL)

rm -f `find . -name "*~"`

%: %.c Makefile

$(CC) $< -o $@ $(FLAGS)

The ALL variable contains the list of output files, each have a corresponding *.c file. Just an example above.

The last implicit rule (%: %.c) tells Make how to create an extension-less executable file from a *.c file. It’s almost redundant, since Make attempts to compile the corresponding C file anyhow, if it sees a target file with no extension (try “make –debug=v”). If the rule is removed, and the CFLAGS variable is set to the current value of FLAGS, it will work the same, except that the Makefile itself won’t be dependent on.

IMPORTANT: Put the dynamic library flags (e.g. -lm, not shown in the example above) last in the command line, or “undefined reference” errors may occur on some compilation platforms (Debian in particular). See my other post.

Multiple-source utilites

CC= gcc

ALL= util1 util2

OBJECTS = common.o

HEADERFILES = common.h

LIBFLAGS=-fno-strict-aliasing

FLAGS= -Wall -O3 -g -fno-strict-aliasing

all: $(ALL)

clean:

rm -f *.o $(ALL)

rm -f `find . -name "*~"`

%.o: %.c $(HEADERFILES)

$(CC) -c $(FLAGS) -o $@ $<

$(ALL) : %: %.o Makefile $(OBJECTS)

$(CC) $< $(OBJECTS) -o $@ $(LIBFLAGS)

Note that in this case LIBFLAGS is used only for linking the final executables

Strict aliasing?

It might stand out that the -fno-strict-aliasing flag is the only one with a long name, so there’s clearly something special about it.

Strict aliasing means (in broad strokes) that the compiler has the right to assume that if there are two pointers of different types, they point at different memory regions (and not just not having the same address). In other words, dereferences of pointers (as in *p) of different types are treated as independent non-pointer variables. Reordering and elimination of operations is allowed accordingly.

For example, a struct within a struct. If you have a pointer to the outer struct as well as one to the inner struct, you’re on slippery ice.

The actual definition is actually finer, and some of it is further explained on this page (or just Google for “strict aliasing”). Regardless, Linus Torvalds explains why this flag is used in the Linux kernel here.

The thing is that unless you’re really aware of the detailed rules, there’s a chance that you’ll write code that works on one version of gcc and fails on another. The difference might be where and how this or another compiler decided to optimize the code. Such optimization may involve reordering of operations with mutual dependency or optimizing away things that have no impact unless some pointers are related.

This is true in particular for code that plays with pointer casting. So if the code is written in the spirit of “a pointer is just a pointer, what could go wrong”, -fno-strict-aliasing flag is your friend.

General

As part of a larger project, I was required to set up a PCIe link between a host and some FPGAs through a fiber link, in order to ensure medical-grade electrical isolation of a high-bandwidth video data link + allow for control over the same link.

These are a few jots on carrying a 1x Gen2 PCI Express link over a plain SFP+ fiber optics interface. PCIe is, after all, just one GTX lane going in each direction, so it’s quite natural to carry each Gigabit Transceiver lane on an optical link.

When a general-purpose host computer is used, at least one PCIe switch is required in order to ensure that the optical link is based upon a steady, non-spread spectrum clock. If an FPGA is used as a single endpoint at the other side of the link, it can be connected directly to the SFP+ adapter, with the condition that the FPGA’s PCIe block is set to asynchronous clock mode.

Since my project involved more than one endpoint on the far end (an FPGA and USB 3.0 chip), I went for the solution of one PCIe switch on each end. Avago’s PEX 8606, to be specific.

All in all, there are two issues that really require attention:

- Clocking: Making sure that the clocks on both sides are within the required range (and it doesn’t hurt if they’re clean from jitter)

- Handling the receiver detect issue, detailed below

How each signal is handled

- Tx/Rx lanes: Passed through with fiber. The differential pair is simply connected to the SFP+ respective data input and output.

- PERST: Signaled by turning off laser on the upstream side and issuing PERST to everything on the downstream side on (a debounced) LOS (Loss of Signal).

- Clock: Not required. Keep both clocks clean, and within 250 ppm.

- PRSNT: Generated locally, if this is at all relevant

- All other PCIe signals are not mandatory

Some insights

- It’s as easy (or difficult) as setting up a PCIe switch on both sides. The optical link itself is not adding any particular difficulty.

- Dual clock mode on the PCIe switches is mandatory (hence only certain devices are suitable). The isolated clock goes to a specific lane (pair?), and not all configurations are possible (e.g. not all 1x on PEX8606).

- According to PCIe spec 4.2.6.2, the LTSSM goes to Polling if a receiver has been detected (that is, a load is sensed), but Polling returns to Detect if there is no proper training sequence received from the other end. So apparently there is no problem with a fiber optic transceiver, even though it presents itself as a false load in the absence of a link partner at the other side of the fiber: The LTSSM will just keep looping between Detect and Polling until such partner appears.

- The SFP+ RD pins are transmitters on the PCIe wire pair, and the TD are receivers. Don’t get confused.

- AC coupling: All lane wires must have an 100 nF capacitor in series. External connectors (e.g. PCIe fingers) must have an capacitor on PET side (but must not have one on the ingoing signal).

- Turn off ASPM wherever possible. Most BIOSes and many Linux kernels volunteer doing that automatically, but it’s worth making sure ASPM is never turned on in any usage scenario. A lot of errors are related to the L0s state (which is invoked by ASPM) in both switches and endpoints.

- Not directly related, but it’s often said that the PERST# signal remains asserted 100 ms after the host’s power is stable. The reference for this is section 2.2 of the PCI Express Card Electromechanical Specification (“PERST# Signal”): “On power up, the deassertion of PERST# is delayed 100 ms (TPVPERL) from the power rails achieving specified operating limits.”

PEX 86xx notes

- PEX_NT_RESETn is an output signal (but shouldn’t be used anyhow)

- It seems like the PLX device cares about nothing that happened before the reset: A lousy voltage ramp-up or the absence of clock. All if forgotten and forgiven.

- A fairly new chipset and BIOS are required on the motherboard, say from year 2012 and on, or the switch isn’t handled properly by the host.

- On a Gigabyte Technology Co., Ltd. G31M-ES2L/G31M-ES2L, BIOS FH 04/30/2010, the motherboard’s BIOS stopped the clock short after powering up (it gave up, probably), and that made the PEX clockless, probably, leading to completely weird behavior.

- There’s a difference between the lane numbering a port numbering (the latter used in function numbers of the “virtual” endpoints created with respect to each port). For example, on 8606 running a 2x-1x-1x-1x-1x configuration, lanes 0-1, 4, 5, 6 and 7 are mapped to ports 0, 1, 5, 7 and 9 respectively. Port 4 is lane 1 in an all-1x configuration (with other ports mapped the same).

- The PEX doesn’t detect an SFP+ transceiver as a receiver on the respective PET lane, which prevents bringup of the fiber lane, unless the SerDes X Mask Receiver Not Detected bit is enabled in the relevant register (e.g. bit 16 at address 0x204). The lane still produces its receiver detection pattern, but ignores the fact it didn’t feel any receiver at the other end. See below.

- In dual-clock mode, the switch works even if the main REFCLK is idle, given that the respective lane is unused (needless to say, the other clock must work).

- Read the errata of the device before picking one. It’s available on PLX’ site on the same page that the Data Book is downloaded.

- Connect an EEPROM on custom board designs, and be prepared to use it. It’s a lifesaver.

Why receiver detect is an issue

Before attempting to train a lane, the PCIe spec requires the transmitter to check if there is any receiver on the other side. The spec requires that the receiver should have a single-ended impedance of 40-60 Ohm on each of the P/N wires at DC (and a differential impedance of 80-120 Ohms, but that’s not relevant). The transmitter’s single-ended impedance isn’t specified, only the differential impedance must be 80-120. The coupling capacitor may range between 75-200 nF, and is always on the transmitter’s side (this is relevant only when there’s a plug connection between Tx and Rx).

The transmitter performs a receiver detect by creating an upward common mode pulse of up to 600 mV on both lane wires, and measuring the voltage on these.This pulse lasts for 100 us or so. As the time constant for 50 Ohms combined with 100 nF is 5 us, a charging capacitor’s voltage pattern is expected. Note that the common mode impedance of the transmitter is not defined by the spec, but the transmitter’s designer knows it. Either way, if a flat pulse is observed on the lane wires, there’s no receiver sensed.

Now to SFP+ modules: The SFP+ specification requires a nominal 100 Ohm differential impedance on its receivers, but “does not require any common mode termination at the receiver. If common mode terminations are provided, it may reduce common mode voltage and EMI” (SFF-8431, section 3.4). Also, it requires DC-blocking capacitors on both transmitter and receiver lane wires, so there’s some extra capacitance on the PCIe-to-SFP+ direction (where the SFP+ is the PCIe receiver) which is not expected. But the latter issue is negligible compared with the possible absence of common mode termination.

As the common-mode termination on the receiver is optional, some modules may be detected by the PCIe transmitter, and some may not.

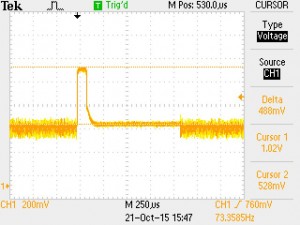

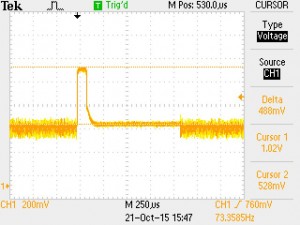

This is what one of the PCIe lane’s wires looks like when the PEX8606 switch is set to ignore the absence of receiver (with the SerDes X Mask Receiver Not Detected bit): It still runs the receiver detect test (the large pulse), but then goes to link training despite that no load was detected (that’s the noisy part after the pulse). In the shown case, the training kept failing (no response on the other side), so it goes back and forth between detection and training.

This capture was done with a plain digital oscilloscope (~ 200 MHz bandwidth).

These are a few jots I wrote down as I wrote some code that generates component.xml files automatically. The XML convention of this file IP-XACT format, a specification by the SPIRIT Consortium which can be downloaded free from IEEE. The “spirit:” prefixes all over the XML file indicates that the keywords are defined in the IP-XACT spec.

Block design files (*.bd), which is the only essential source Vivado needs for defining a block design, are also given by IP-XACT convention, however they serve a different purpose, and have a different format.

An IP-XACT file can be opened directly in Vivado (File -> Open IP-XACT File… or the ipx::open_ipxact_file Tcl command on earlier Vivados) and there are plenty of Tcl commands (try “help ipx::” at Tcl prompt, yes, with two colons).

Structure

Everything is under the <spirit:component> entry.

- Vendor, library version etc

- busInterfaces: Each businterface groups ports (to be listed later on) into interfaces such as AXI, AXI Streaming etc. These interfaces are one of those known to Vivado, and it seems like it’s not possible to add a custom interface in a sensible way.

- model: views and ports, see below

- fileSets: Each fileset lists the files that are relevant for one particular view. The pairing is done by matching the view’s fileSetRef attribute with the fileset’s name attribute.

- description: This is some text that is displayed to the user. It can be long

- parameters

- vendorExtensions (Xilinx taxonomy, basic stuff, note the supportedFamilies entry)

The “model” entry has two subentries:

- views: Different ways to consume the files of the IP: Synthesis in Verilog, synthesis in VHDL, synthesis in any language, files for describing GUI etc. It seems like Vivado is looking at the envIdentifier attribute in particular, and the fileSetRef for linking with a fileset.

A view doesn’t have to contain all files required, but several views are used together for a given scenario. For example, when synthesizing in Verilog, the fileset linked to the view identified with “verilogSource:vivado.xilinx.com:synthesis” (typically named “xilinx_verilogsynthesis”) will probably contain the Verilog files. But if there’s also a fileset linked with a view identified with “:vivado.xilinx.com:synthesis” (typically named “xilinx_anylanguagesynthesis”), its files will be used as well as well. The latter fileset may contain netlists (ngc, edif), which the language-specific fileset may not.

- ports. Enlists the top-level module’s ports. Input ports may have a defaultValue attribute, which defines the value in case nothing is connected to it. All ports appearing in the busInterfaces section must appear here, in which case Vivado includes them in a group. If a port doesn’t belong to any bus interface, it’s exposed as a wire on the block.

Notes

- In the files listed in a fileset, each one is given a fileType attribute. This attribute has to be one listed in the IP-XACT standard section C.8.2 (e.g. verilogSource, vhdlSource, tclSource etc.). Other strings will be rejected by Vivado. For xci, ngc, edif etc, Vivado expects a userFileType attribute instead. One of fileType or userFileType must be present.

- When instantiating the IP in a block design, Vivado expects the top-level module to have the name given in the modelName attribute in the relevant view. This is typically the name of one of the modules of the fileset.

- The entries in vendorExtensions -> taxonomies is where the IP will appear in Vivado’s IP Catalog, when it’s listed by groups. The path is given as a directory path, with slashes (hence the leading slash, marking “root”). It’s fine to invent a name for a new root entry, in which case a new group is generated in the IP Catalog. Vivado accepts taxonomies it doesn’t know of.

- Sub-core’s XCI files may go into a Verilog/VHDL Synthesis fileset, but the last file in the fileset must be in Verilog/VHDL.

So the situation is like this: An email I attempted to send got rejected by the recipient’s mail server because my ISP (Netvision) has a poor spam reputation. And it so happens that I have a shell account (with root, possibly) on a server with an excellent reputation. So how do I use this advantage?

On my Thunderbird oldie, save the message with “Save As…” from the “Sent” folder into an .eml file. Or from “Unsent Mail” folder, if it’s a fresh message which I haven’t even tried to send the normal way (using the “Send Later” feature).

Copy this .eml file to the server with good mail reputation.

On that server, go

$ sendmail -v -t < test.eml

"eli@picky.server.com" <eli@picky.server.com>... Connecting to [127.0.0.1] via relay...

220 theserver.org ESMTP Sendmail 8.14.4/8.14.4; Sat, 18 Jun 2016 11:05:26 +0300

>>> EHLO theserver.org

250-theserver.org Hello localhost.localdomain [127.0.0.1], pleased to meet you

250-ENHANCEDSTATUSCODES

250-PIPELINING

250-8BITMIME

250-SIZE

250-DSN

250-ETRN

250-DELIVERBY

250 HELP

>>> MAIL From:<eli@theserver.org> SIZE=864

250 2.1.0 <eli@theserver.org>... Sender ok

>>> RCPT To:<eli@picky.server.com>

>>> DATA

250 2.1.5 <eli@picky.server.com>... Recipient ok

354 Enter mail, end with "." on a line by itself

>>> .

250 2.0.0 u5I85QQq030607 Message accepted for delivery

"eli@picky.server.com" <eli@picky.server.com>... Sent (u5I85QQq030607 Message accepted for delivery)

Closing connection to [127.0.0.1]

>>> QUIT

221 2.0.0 theserver.org closing connection

The -v flag causes all the verbose output, and the -t flag makes sendmail read the headers. If there’s a Bcc: header, it’s removed before sending.

IMPORTANT: If the processing involves several connections, they will be shown one after the other. In particular, the first leg might be to the local mail server, and only then the relaying out. In that case, the EHLO of the first connection is set by the sendmail executable we’re running, not the sendmail server.

Note that the “MAIL From:” (envelope sender) is the actual user on the Linux machine (user name@the machine’s domain name). The -f flag can be used to used to change this:

# sendmail -v -f 'eli@billauer.co.il' -t < test.eml

To be sure it went fine, look in /var/log/maillog. A successful transmission leaves an entry like this:

Jun 18 11:06:17 theserver sendmail[30611]: u5I85QQq030607: to=<eli@picky.server.com>, ctladdr=<eli@theserver.org> (500/123), delay=00:00:51, xdelay=00:00:48, mailer=esmtp, pri=120985, relay=picky.server.com. [108.86.85.180], dsn=2.0.0, stat=Sent (OK id=1bEBGd-0007kL-DB)

Note the mail ID, which was given by sendmail (marked in red). Finding all related log messages is done simply with e.g. (as root)

# grep u5I85QQq030607 /var/log/maillog

Jun 18 11:05:26 theserver sendmail[30607]: u5I85QQq030607: from=<eli@theserver.org>, size=985,, nrcpts=1, msgid=<57650054.90002@picky.server.com>, proto=ESMTP, daemon=MTA, relay=localhost.localdomain [127.0.0.1]

Jun 18 11:05:29 theserver sendmail[30604]: u5I85NZV030604: to="eli@picky.server.com" <eli@picky.server.com>, ctladdr=eli (500/123), delay=00:00:06, xdelay=00:00:03, mailer=relay, pri=30864, relay=[127.0.0.1] [127.0.0.1], dsn=2.0.0, stat=Sent (u5I85QQq030607 Message accepted for delivery)

Jun 18 11:06:17 theserver sendmail[30611]: u5I85QQq030607: to=<eli@picky.server.com>, ctladdr=<eli@theserver.org> (500/123), delay=00:00:51, xdelay=00:00:48, mailer=esmtp, pri=120985, relay=picky.server.com. [208.76.85.180], dsn=2.0.0, stat=Sent (OK id=1bEBGd-0007kL-DB)

(the successful finale is the last message)