PCI express from a Xilinx/Altera FPGA to a Linux machine: Making it easy

Posted Under: FPGA,Intel FPGA (Altera),Linux kernel,PCI express

Update: The project is up and running, available for a large range of FPGAs. Click here to visit its home page.

Over the years in which I’ve worked on FPGA projects, I’ve always been frustrated by the difficulty of communicating with a PC. Or an embedded processor running a decent operating system, for that matter. It’s simply amazing that even though the FPGA’s code is implemented on a PC and programmed from the PC, it’s so complicated to move application data between the PC and the FPGA. Having a way to push data from the PC to the FPGA and pull other data back would be so useful not only for testing, but it could also be the actual application.

So wouldn’t it be wonderful to have a standard FIFO connection on the FPGA, and having the data written to the FIFO showing up in a convenient, readable way on the PC? Maybe several FIFOs? Maybe with configurable word widths? Well, that’s exactly the kind of framework I’m developing these days.

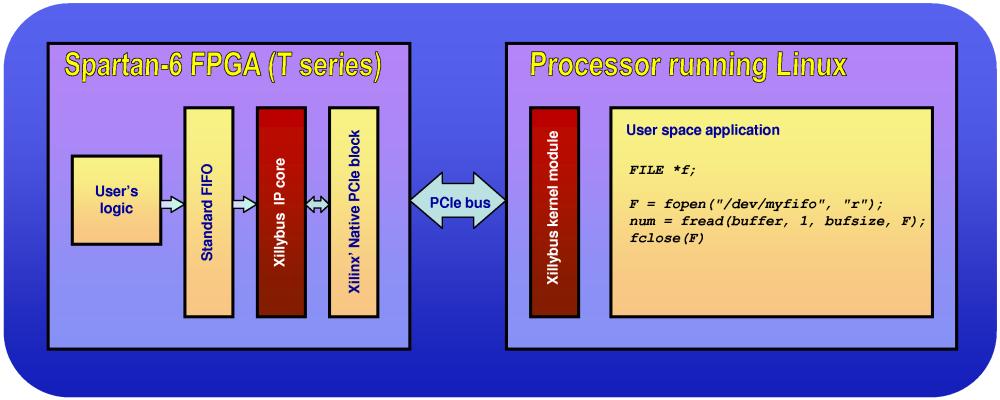

The usage idea is simple: A configurable core on the FPGA, which exposes standard FIFO interface lines to its user. On the other side we have a Linux-running PC or embedded processor, where there is a /dev device file for each FIFO in the FPGA. When a byte is written to the FIFO in the FPGA, it’s soon readable from the device file. Data is streamed naturally and seamlessly from the FIFO on the FPGA to a simple file descriptor in a userspace Linux application. No hassle with the I/O. Just simple FPGA design on one side, and a simple application on the Linux machine.

Ah, and the same goes for the reverse direction, of course.

The transport is a PCI Express connection. With certain Spartan-6 and Virtex 5/6 devices, this boils down to connecting seven pins from the FPGA to the processor’s PCI Express port, or to a PCIe switch. Well, not exactly. A clock cleaner is most probably necessary. But it’s seven FPGA pins anyhow, with reference designs to copy from. It’s quite difficult to get this wrong.

No kernel programming will be necessary either. All that is needed, is to compile a certain kernel module against the headers of the running Linux kernel. On many environments, this merely consists of typing “make” at shell prompt. Plus copying a file or two.

So all in all, the package consists of a healthy chunk of Verilog code, which does the magic of turning plain FIFO interfaces into TLPs on the PCIe bus, and a kernel module on the Linux machine’s side, which talks with the hardware and presents the data so it can be read with simple file access.

If you could find an IP core like this useful, by all means have a look on the project’s home page.

Reader Comments

hellO! how is this going?

According to schedule, I would say. I have the FPGA to CPU part set up and working (data streaming from FPGA, read from a regular file within Linux). I’m now working on the other direction.

Stay tuned…

Hello,

I have been reading your article about how to transfer data from a FPGA board to a computer and I might be pretty interested by your researches.

I would like to use a fpga board in order to send information that has been calculated to another computer with a pcie bus.

I’m working with a Stratix 4 but I think that your Research may be pretty helpful in my project.

Thank you.

Unfortunately, I’m a Xilinx guy, and the project I’m working on is designed for Spartan-6T at this time. This is not a research project, but rather an implementation of an IP core.

So if you’re ready to consider using a Xilinx device, you may be up and running as soon as July or so. Porting to Altera is currently not planned.

Thank you for your answer, I see that your project has gone well.

I know that Xilinx and Stratix card are totally different but since both of them are using the PCIe protocole with the same layers, I wanted to know if you’re using a Nios2 softcore in order to send your data streaming directly to your Linux computer or if you’re doing this only with a PCIe Hardware IP and somes Transceiver in PIPE mode?

Moreover, are you the one who coded the driver on Linux?

Since I would like to read the data coming from my FPGA, it would be great if I could read it directly from a file in my /dev folder.

I’m using a core provided by Xilinx to interface with a PCIe hardware core on Spartan-6T. I haven’t looked into how Altera does the wrapping, but I suppose porting is more than possible (given sufficient motivation…).

And yes, I’m doing the Linux part as well. As the diagram above implies, I use a classic /dev file to access data from the FPGA. In fact, it’s possible to read the data with simple shell-script commands such as cat, hexdump and dd.

I’ve seen many topic discussing about Xilinx Spartan board but you’re the first one to explain thing that well. Thank you for that.

I guess I will try to implement a PCIe HIP with a NiosII core just like you did in a Xilinx way.

For the linux driver, I think that I’ll be able to make my own driver with your advices thank you.

I’m still very interested by your project by the way.

hi, I am working on a project to interface a PCI express from an Altera FPGA (Cyclone IV GX) to a Linux machine to transfer data from FPGA to PC. and I saw your explanation here using Xilinx FPGA. I saw the diagram you included and yes, basically using either Altera or Xilinx FPGA has nearly the same block diagram. Would you please share the linux driver code as well as the FPGA verilog coding? I appreciated very much.

The Linux driver’s sources are available for download at the site. Verilog source code is not published, as the IP core is licensed against fees.

Is the Linux driver’s source applicable to be used for Xilinx FPGA only? As the name Xillybus sounds as if it is targeted for Xilinx only. So is the Xillybus linux driver usable for Altera FPGA as well? Your advice is very much needed. thanks.

The Linux driver is tailored for communicating with the Xillybus IP core within the FPGA. It can help somewhat in understanding what a PCIe driver looks like, but that’s basically as relevant as it gets for a non-Xillybus project.

Porting Xillybus to Altera is somewhere in the far planning. Nothing to rely on presently.

Does Xillybus IP has both PCIe Initiator & Target functionality in FPGA? What is the achieved PCIe throughput in Spartan-6 with Linux/Windows driver assuming the user interface does not apply any back pressure/flow control?

I am looking at an application which needs 100MB/sec in each direction.

Thanks

I’m not 100% sure I understood your question, so I’ll try the best I can.

Throughput: The upstream (from FPGA) direction offers 200 MB/s. Downstream gives slightly lower than 100 MB/s for a single stream, but two independent streams can run in parallel and reach a total of nearly 200 MB/s.

All this holds for a 1x connection as offered by Spartan-6T. Virtex-6 goes higher.

Flow control: FPGA logic interfaces with a FIFO’s empty or full lines. The rest of the flow control is handled by Xillybus’ logic and drivers transparently, much by sensing the FIFO’s control signals on the other side.

hi,i am working on a project where i need to send the data from the pc to fpga(virtex6) through pcie on linux.and i need to know where the data is storing on the fpga so that i can retrive it and again process it and send it to mac layer.

In the FPGA, you’ll fetch the data from a standard FIFO. This is explained in Xillybus’ site.

i am new to fpga and pcie so without using xillybus cant we know the exact location of where the data is stored in fpga by pcie and also when pcie is connected to the linux should we have a kernal module to transfer the data from pc????

Thanks Eli for the response on Xillybus throughput. Below are my definition on Initiator & Target.

Initiator (or Master): FPGA has DMA type functionality and can transfer the data from FPGA to Host without requiring Memory Read packets from the Host.

Target: This is more like Slave where the Host initiates all write/read transactions and FPGA just responds to Memory Writes and Memory read packets.

What packet size and transfer size did you use for throughput calculations?

Regarding where the data can be “found” when you don’t use Xillybus: The data isn’t stored anywhere. It arrives as packets which you need to handle one by one with a state machine you develop. On the PC side, yes, you need to develop or adopt some kind of driver.

As for the throughput: All data transfers are initiated by the FPGA (“Initiator”). There is no exact meaning to packet size and transfer size when working with Xillybus, because the system presents a stream interface. So basically, I just ran a program that reads or writes to a file descriptor as fast as possible (typically a few GBs), and divided the amount of data with the time elapsed.

Hi there!

First of all, I’m not a FPGA guy. I’m a linux device drivers developer.

I’m working in a project which has some communication (i2c,spi and MDIO) will be performed by the FPGA. The CPU will access these buses via PCI Express.

What has to be done in FPGA and PICe linux device driver to allow kernel to see the communication interfaces?

If we’ll take the SPI as an example, the FPGA logic will need to serialize/deserialize the data and then connect it with a standard FIFO. This is not completely trivial if you’re not experienced with FPGA design, but this logic has to be there this way or another.

As for the Linux side, there is no work at all. Xillybus gives you the data directly through a device file interface.

Questions & Comments

Since the comment section of similar posts tend to turn into a Q & A session, I’ve taken that to a separate place. So if you’d like to discuss something with me, please post questions and comments here instead. No registration is required.

This comment section is closed.